Did you know that the future of transportation might be shaped by algorithms that learn from trial and error? Welcome to the world of reinforcement learning (RL) in autonomous vehicles, where machines are taught to make decisions just like humans do – through experience.

Reinforcement learning is transforming the development of self-driving cars. But what exactly is RL, and why is it so crucial for autonomous vehicles? Let’s explore this fascinating intersection of artificial intelligence and automotive technology.

At its core, RL is a type of machine learning where an agent (in this case, an autonomous vehicle) learns to make decisions by interacting with its environment. Imagine a car that improves its driving skills with each journey, learning from its successes and mistakes. That’s the power of reinforcement learning.

In the context of autonomous vehicles, RL algorithms enable cars to learn optimal behaviors for various driving scenarios. From navigating complex intersections to merging onto highways, these algorithms help vehicles make split-second decisions based on their learned experiences.

Throughout this article, we’ll explore the methodologies that make RL in autonomous vehicles possible, delve into the challenges faced by researchers and engineers, and peek into the future directions of this groundbreaking technology. We’re in for an exciting ride!

Main Takeaways:

- Reinforcement learning enables autonomous vehicles to learn and improve through interaction with their environment

- RL algorithms help self-driving cars make optimal decisions in various driving scenarios

- We’ll explore RL methodologies, challenges, and future directions in autonomous driving

As we navigate this topic, we’ll uncover how RL is transforming autonomous driving, potentially reshaping the future of transportation. Are you ready to explore the cutting edge of automotive AI? Let’s hit the road!

How Reinforcement Learning Works in Autonomous Vehicles

Reinforcement learning (RL) enables autonomous vehicles to make intelligent decisions in complex environments. RL allows self-driving cars to learn optimal behaviors through trial-and-error interactions with the world around them.

The process of making ethical decisions in uncertain situations becomes challenging when safety needs to be prioritized over traffic rules because it cannot be fully tested in controlled environments.

– Linda Chavez, Founder & CEO, Seniors Life Insurance Finder

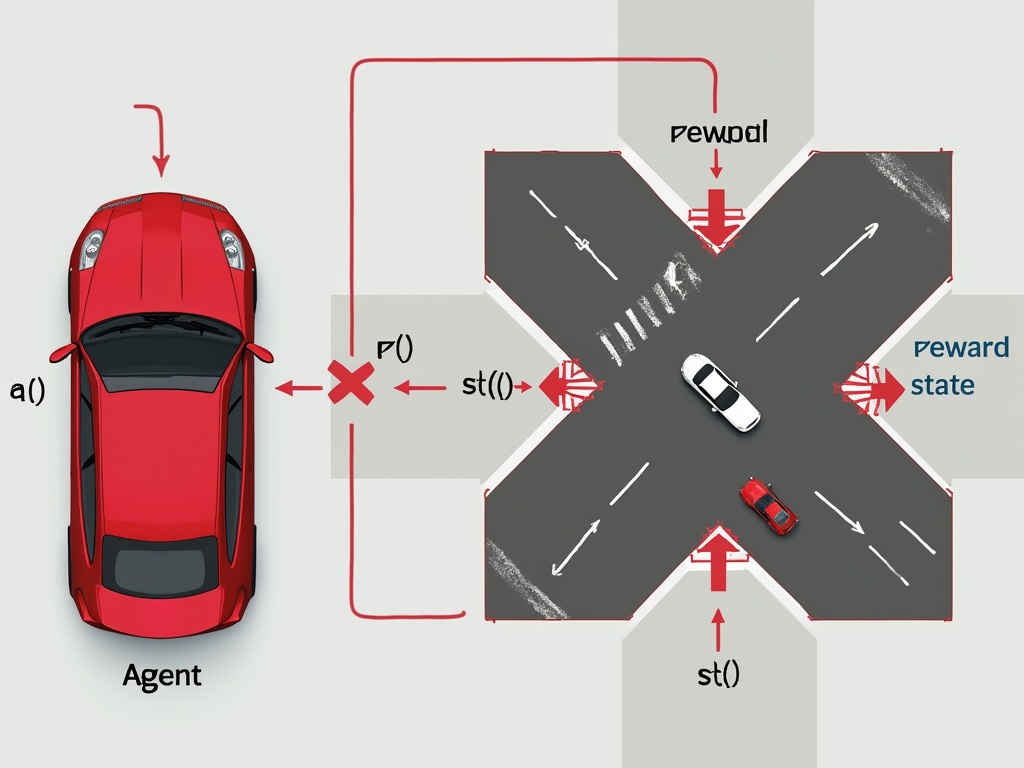

The process begins with the vehicle, acting as an agent, observing its current state. This state might include factors like its position on the road, speed, and the location of nearby objects. Based on this information, the agent selects an action, such as accelerating, braking, or steering.

After taking an action, the environment responds, transitioning the agent to a new state. The agent also receives a reward signal, indicating how good or bad the chosen action was. For example, safely navigating a turn might yield a positive reward, while veering off the road would result in a negative one.

Through repeated interactions, the autonomous vehicle aims to learn a policy—a strategy for choosing actions that maximizes long-term rewards. This learning process allows the car to gradually improve its decision-making capabilities, optimizing its driving behavior over time.

One key advantage of reinforcement learning is its ability to handle the unpredictability of real-world driving scenarios. Unlike rule-based systems, RL algorithms can adapt to new situations and generalize their learned knowledge to unfamiliar environments.

Consider a self-driving car approaching an intersection. Using RL, it can learn to recognize traffic patterns, anticipate the actions of other vehicles, and make safe decisions about when to proceed. The more the car interacts with various intersection scenarios, the better it becomes at navigating them efficiently and safely.

Importantly, reinforcement learning in autonomous vehicles often takes place in simulated environments before being deployed on real roads. This allows for extensive training without risking safety. Companies like Wayve have demonstrated how RL-trained models can successfully transfer their skills to real-world driving conditions.

Despite simulations, it is difficult to train an autonomous vehicle on when it should react if a street bulges, a person stops in the middle of the road with a wave to continue the ride, or makes eye contact to show intentions.

– Harkamaljeet Singh, CEO, Silver Taxi Melbourne

While reinforcement learning shows great promise for autonomous driving, it’s not without challenges. Ensuring safe exploration during learning, dealing with partial observability of the environment, and scaling to the complexity of urban driving are active areas of research in the field.

As technology advances, reinforcement learning continues to play an increasingly important role in the development of safer, more capable autonomous vehicles. By harnessing the power of learning from experience, self-driving cars are getting closer to matching and potentially surpassing human-level driving performance.

Challenges of Reinforcement Learning in Autonomous Vehicles

Reinforcement learning (RL) holds great promise for developing autonomous vehicles, but several key challenges emerge when applying RL in this domain. Let’s explore the major hurdles facing researchers and engineers as they work to create effective RL-based driving models.

Data Bias

One of the foremost challenges is data bias. RL algorithms learn from the data they’re trained on, but this data may not fully represent all driving scenarios. For example, if the training data primarily consists of highway driving in clear weather, the model may struggle in urban environments or adverse conditions.

Even quite elaborate simulated experiences are not capable of capturing the serendipity or subtlety of such human deliberation, either in chaotic or ambiguously labeled situations, which further restricts the generalization of the learned behaviors and then when applied to reality.

– Aryan Bhardwaj, Owner, Spartan Equipment

This bias can lead to dangerous situations where the vehicle makes incorrect decisions in unfamiliar scenarios. Imagine an autonomous car trained mostly on right-hand drive data trying to navigate in a left-hand drive country – the consequences could be disastrous.

To address this, researchers must carefully curate diverse datasets that cover a wide range of driving conditions, locations, and scenarios. However, collecting such comprehensive data is time-consuming and expensive.

Safety Concerns

Safety is paramount in autonomous driving, but traditional RL algorithms aren’t inherently safe. They often learn through trial and error, which is unacceptable when errors could lead to accidents or fatalities.

Researchers are developing safe reinforcement learning (SRL) techniques to address this issue. These methods incorporate constraints and safety considerations into the learning process. For instance, some algorithms use risk estimation to avoid actions deemed too dangerous.

Despite these advances, ensuring absolute safety in all possible scenarios remains a significant challenge. The unpredictable nature of real-world traffic and the potential for sensor failures or unexpected obstacles make this a complex problem to solve.

As I’m bouncing from bike to bike or zipping from rental pick-up to rental drop-off, I see the odd way driver-assistance cars react to motorcycles. They’ll brake check me while I’m just lane positioning regularly, or they don’t realize that Motorcycles can get up to speed way more quickly than cars when merging.

– Carlos Nasillo, CEO, Riderly

Computational Demands

RL algorithms, especially those using deep learning, require substantial computational resources. Training a model to handle the complexities of driving can take weeks or even months on high-performance hardware.

This computational intensity poses challenges for both development and deployment. During development, it slows down the iteration process, making it difficult to quickly test and refine models. In deployment, it may require powerful onboard computers, increasing the cost and energy consumption of autonomous vehicles.

Researchers are exploring ways to make RL more efficient, such as transfer learning and meta-learning approaches. However, balancing computational efficiency with performance remains an ongoing challenge.

Integration with Existing Systems

Autonomous vehicles need to integrate with existing automotive systems and infrastructure. This integration presents its own set of challenges for RL-based models.

For example, an RL algorithm might learn an optimal driving behavior that conflicts with traditional rules-based systems handling things like traction control or emergency braking. Resolving these conflicts and ensuring smooth interaction between RL components and other vehicle systems is crucial.

Moreover, RL models need to interface with a variety of sensors, each with its own quirks and potential failure modes. Ensuring robust performance across this complex system of systems is a significant engineering challenge.

Despite these hurdles, the potential of reinforcement learning in autonomous driving is immense. As researchers continue to tackle these challenges, we move closer to a future where AI can navigate our roads as safely and efficiently as the best human drivers – perhaps even better.

Solutions to Overcome Reinforcement Learning Challenges

Reinforcement learning (RL) holds immense potential for autonomous vehicles, but implementing it effectively comes with several hurdles. Researchers have proposed a range of innovative solutions to address these challenges and push the boundaries of what’s possible in self-driving technology.

Improving Data Diversity

One of the key issues in RL for autonomous vehicles is the lack of diverse, real-world training data. To combat this, researchers are exploring creative data augmentation techniques.

Simulation environments like CARLA allow for the generation of vast amounts of synthetic data, exposing AI models to a wide range of driving scenarios. This approach helps autonomous vehicles learn to handle rare or dangerous situations without real-world risk.

Additionally, techniques like domain randomization introduce deliberate variations in visual elements, weather conditions, and traffic patterns. This trains the AI to be more robust and adaptable to real-world unpredictability.

Implementing Safe RL Algorithms

Safety is paramount in autonomous driving, and traditional RL algorithms often fall short in this critical area. Enter safe reinforcement learning (SRL), a game-changing approach to developing trustworthy AI drivers.

SRL algorithms, like the Parallel Constrained Policy Optimization (PCPO), incorporate strict safety constraints directly into the learning process. This ensures that the AI always prioritizes avoiding collisions and maintaining safe distances, even during the exploration phase of learning.

Another promising technique is the use of ‘shielding’ mechanisms. These act as a safety net, intervening to prevent the AI from taking potentially dangerous actions in uncertain situations.

Optimizing Computational Methods

The sheer complexity of autonomous driving demands significant computational power. Researchers are developing innovative ways to make RL more efficient and practical for real-world deployment.

| Technique | Description | Benefits | Challenges |

|---|---|---|---|

| Hierarchical Reinforcement Learning | Breaks down the driving task into manageable sub-tasks. | Faster convergence on optimal policies. | Implementation complexity. |

| Transfer Learning | Enables AI models to apply knowledge gained in one driving scenario to new, similar situations. | Reduces the amount of training data needed. | Potential for negative transfer where transferred knowledge is not relevant. |

| Safe Reinforcement Learning | Incorporates constraints and safety considerations into the learning process. | Ensures the AI prioritizes safety. | Balancing exploration with safety constraints. |

| Simulation Environments | Generate vast amounts of synthetic data for training AI models. | Allows training without real-world risk. | Simulated data may not fully capture real-world complexities. |

Developing Robust Integration Techniques

For autonomous vehicles to become a reality, RL systems need to seamlessly integrate with existing automotive technologies and infrastructure. This presents a unique set of challenges that researchers are actively tackling.

One approach involves creating hybrid systems that combine the adaptability of RL with the reliability of traditional rule-based control methods. This allows for a gradual transition as RL models prove their effectiveness in specific driving tasks.

Researchers are also exploring ways to make RL models more interpretable. This is crucial for building trust with regulators and the public, as well as for debugging and improving the systems over time.

By addressing these core challenges, the future of RL in autonomous vehicles looks incredibly promising. As these solutions continue to evolve, we move closer to a world where safe, efficient self-driving cars are a common sight on our roads.

Case Studies of RL in Autonomous Driving

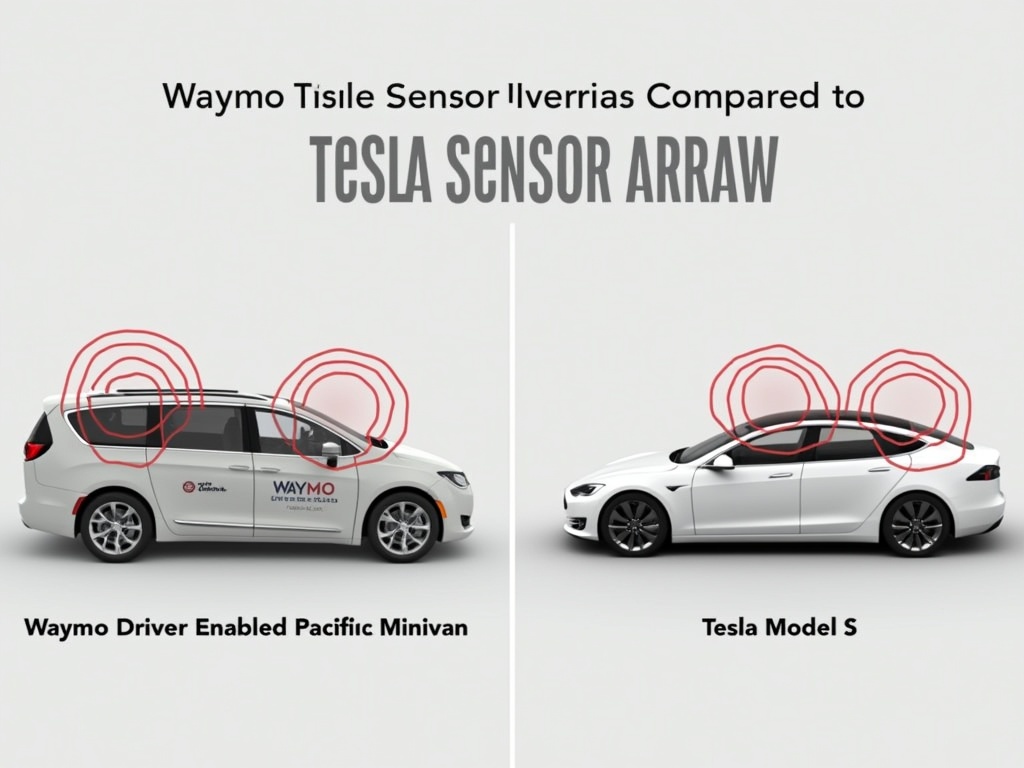

Waymo vs Tesla sensor array comparison

Major players like Waymo and Tesla are embracing reinforcement learning (RL) to develop fully autonomous vehicles. These companies have made significant strides in applying RL algorithms to tackle the complex challenges of self-driving cars.

Waymo, formerly the Google self-driving car project, has been at the forefront of autonomous vehicle development. The company has leveraged RL techniques to improve its vehicles’ decision-making capabilities in unpredictable traffic scenarios. One notable innovation is their use of ‘dynamic confidence-aware reinforcement learning’ (DCARL), which allows for continuous improvement of the self-driving system’s performance over time.

Tesla has taken a unique approach by implementing what CEO Elon Musk calls ‘shadow mode’—a system that allows their neural networks to learn from millions of miles of real-world driving data collected from their customer fleet. This massive dataset helps train their RL algorithms to handle a wide variety of driving situations.

Both companies have seen successes and faced challenges in their RL implementations. Waymo’s vehicles have shown impressive capabilities in complex urban environments, while Tesla’s Autopilot system has demonstrated the potential for widespread deployment of semi-autonomous features.

However, these case studies also highlight ongoing challenges. Ensuring safety and reliability in all possible scenarios remains a significant hurdle. Additionally, the computational demands of processing vast amounts of sensory data in real-time continue to push the boundaries of current hardware capabilities.

The integration of reinforcement learning in autonomous vehicles represents a significant leap forward, but it’s clear that we’re still in the early stages of this technology’s potential.

Dr. Huei Peng, Director of Mcity at the University of Michigan

As these companies continue to refine their RL algorithms and gather more real-world data, we can expect to see further advancements in the capabilities of self-driving vehicles. The lessons learned from these pioneering efforts will undoubtedly shape the future of autonomous transportation.

Future Prospects of Reinforcement Learning in Autonomous Vehicles

The road ahead for reinforcement learning in autonomous vehicles looks exceptionally promising. AI is evolving rapidly, bringing us to the brink of a transportation revolution that could redefine mobility and road safety. One of the most exciting developments is the integration of reinforcement learning with more powerful computational resources. As processors become faster and more efficient, autonomous vehicles will be able to make split-second decisions with unprecedented accuracy, potentially avoiding accidents.

Enhanced data collection methods are set to boost the learning capabilities of self-driving cars. By accessing vast pools of real-world driving data, these vehicles will become increasingly adept at navigating complex traffic scenarios. Imagine a future where your car has effectively ‘seen’ millions of miles of road before you even sit in the driver’s seat.

The true game-changer may lie in collaborative learning. Experts predict that autonomous vehicles will soon share their learned experiences with each other, creating a collective intelligence that evolves in real-time. This could lead to rapid improvements in safety and efficiency across entire fleets of vehicles.

However, with great power comes great responsibility. As these technologies advance, we must address important ethical and regulatory questions. How do we ensure that AI-driven decisions align with human values? What happens when an autonomous vehicle must choose between two potentially harmful outcomes?

Despite these challenges, the future of reinforcement learning in autonomous vehicles is undeniably bright. As we stand on the brink of this new era, one thing is clear: the cars of tomorrow will be smarter, safer, and more efficient than anything we’ve seen before. It’s going to be an exciting ride.

Leveraging SmythOS for Enhanced Autonomous Driving

A futuristic car illuminated by vibrant neon lights.

SmythOS is transforming autonomous driving with its powerful toolkit and robust platform. By integrating reinforcement learning capabilities, it empowers developers to create more intelligent and responsive self-driving systems.

One key advantage of SmythOS is its comprehensive support for major graph databases. This feature allows teams to efficiently model complex road networks and traffic patterns, enabling vehicles to make more informed decisions in real-time.

The platform’s semantic technologies advance autonomous driving by helping vehicles understand context and meaning beyond raw sensor data, improving their ability to interpret their environment and predict potential hazards.

Importantly, SmythOS offers an intuitive visual workflow builder for developing driving agents. This tool democratizes AI development, allowing even those without deep coding expertise to contribute to autonomous vehicle projects.

Real-time debugging is another crucial feature. Developers can monitor and troubleshoot systems on the fly, significantly reducing development time and improving safety outcomes.

With enterprise-grade security controls, SmythOS ensures that sensitive data and algorithms remain protected, essential for building public trust in self-driving technology.

The scalability of SmythOS is particularly valuable for autonomous driving applications. As self-driving fleets grow, the platform can easily accommodate increased demands without compromising performance.

By leveraging SmythOS, teams can focus on innovation rather than backend infrastructure, accelerating the development of safer, more efficient self-driving vehicles.

SmythOS transforms autonomous vehicle development from a complex challenge into a manageable, secure, and scalable solution.

In summary, SmythOS provides a comprehensive ecosystem for autonomous driving development. Its integration of reinforcement learning, graph databases, and semantic technologies, combined with powerful development and debugging tools, positions it as a game-changer in the race towards fully autonomous vehicles.

| Feature | Benefit |

|---|---|

| Graph Database Support | Efficient modeling of complex road networks and traffic patterns |

| Semantic Technologies | Better interpretation of environment and prediction of potential hazards |

| Visual Workflow Builder | Allows non-experts to contribute to autonomous vehicle projects |

| Real-time Debugging | Monitor and troubleshoot systems on the fly, reducing development time |

| Enterprise-Grade Security | Protection of sensitive data and algorithms |

| Scalability | Accommodates increased demands without compromising performance |

Conclusion

Reinforcement learning is set to transform the autonomous vehicle industry, enhancing decision-making capabilities for self-driving cars. Despite challenges, advancements in AI technology and methodologies indicate a promising future for transportation.

Reinforcement learning algorithms enable autonomous vehicles to learn from experience, improving their ability to navigate complex road scenarios. This iterative problem-solving approach holds great promise for creating safer and more reliable self-driving systems.

The evolution of autonomous vehicles is likely to accelerate as researchers refine techniques for handling real-world traffic dynamics. Improved sensor technologies, sophisticated simulation environments, and innovative behavior planning are all contributing to this progress.

Platforms like SmythOS are crucial in this transformation. By providing robust tools for developing, testing, and deploying AI agents, SmythOS empowers researchers and engineers to push the boundaries of autonomous driving. Its focus on explainable AI and real-time monitoring addresses key challenges in building public trust and ensuring regulatory compliance.

The future of transportation will be shaped by the convergence of reinforcement learning, advanced sensors, and powerful development platforms. While the road ahead may have twists and turns, the destination—a world of safer, more efficient, and truly autonomous vehicles—is becoming clearer.