AI agents are changing the game in machine learning. These smart software programs work on their own to tackle complex tasks, learn from their surroundings, and get better over time. In this article, we’ll take a deep dive into what makes AI agents tick and why they’re so important for developers and tech leaders.

AI agents in machine learning are like digital workers that never sleep. They can handle jobs that would take humans hours or even days to complete. These agents use advanced algorithms to make decisions, solve problems, and adapt to new situations without constant human input.

As we explore AI agents, we’ll cover several key areas:

- The building blocks that power AI agents

- How these agents make our work easier and better

- The different kinds of AI agents you might encounter

- Real-world examples of AI agents in action

Whether you’re a seasoned developer or just starting to learn about AI, understanding AI agents is crucial in today’s tech landscape. They’re not just tools – they’re partners that can help us push the boundaries of what’s possible in machine learning.

So, let’s roll up our sleeves and dive into the world of AI agents. By the end of this article, you’ll have a solid grasp of how these digital helpers are shaping the future of technology and how you can put them to work in your own projects.

Key Components of AI Agents

AI agents rely on several crucial components working in harmony to accomplish tasks intelligently and autonomously. Let’s explore the key building blocks that enable these artificial entities to perceive, think, and learn:

Perception Mechanisms

An AI agent’s eyes and ears are its perception mechanisms. These allow the agent to gather data about its environment, whether that’s visual information from cameras, audio from microphones, or other sensory inputs. Without accurate perception, an agent would be flying blind.

For example, a self-driving car uses cameras and sensors to constantly monitor the road, other vehicles, and obstacles. This steady stream of perceptual data forms the foundation for the agent’s decision-making.

Reasoning Capabilities

Raw data isn’t enough – AI agents need the ability to make sense of what they perceive. This is where reasoning capabilities come in. Reasoning allows agents to analyze information, identify patterns, and make logical decisions based on their goals and current situation.

Developers are training AI so that they understand what things are, not what things aren’t, to put it simply. So, when there is nuance or when things just aren’t as straightforward, AI agents can really struggle with proper reasoning.

– Edward Tian, CEO, GPTZero

Imagine a chess-playing AI. Its reasoning module evaluates potential moves, anticipates the opponent’s strategy, and selects the most promising course of action. This cognitive processing is what separates a truly intelligent agent from a simple programmed response.

Memory Storage

Just like humans, AI agents benefit immensely from the ability to remember and learn from past experiences. Memory components allow agents to store important information, recall relevant facts, and build up a knowledge base over time.

A customer service chatbot, for instance, might remember details from previous conversations with a user. This allows it to provide more personalized and context-aware responses in future interactions.

When we built memory systems that mirrored these qualities into our REBL Labs marketing automation, our retention rates increased by 35% within six months.

– REBL Risty, CEO, REBL Marketing

Learning Modules

Perhaps the most exciting aspect of AI agents is their capacity for improvement. Learning modules enable agents to adapt their behavior based on successes, failures, and new information. This allows them to tackle novel situations and become more effective over time.

Consider a recommendation system on a streaming platform. As it observes user behavior and receives feedback, its learning module helps it refine its suggestions, leading to increasingly accurate and satisfying recommendations.

These core components – perception, reasoning, memory, and learning – don’t operate in isolation. They form an interconnected system, each enhancing the others. An agent’s perceptions inform its reasoning, which in turn updates its memory. This stored knowledge then influences future perceptions and decisions, creating a cycle of continuous improvement.

We solved this by embedding continuous performance monitoring that tracks not just rankings but actual user engagement metrics. This approach allowed our systems to learn from real-world interactions rather than relying solely on initial training data.

– Craig Flickinger, CEO, SiteRank

As AI technology advances, we can expect these fundamental components to become even more sophisticated, leading to agents capable of tackling increasingly complex real-world challenges.

Benefits of AI Agents in Machine Learning

AI agents are revolutionizing machine learning, delivering game-changing advantages for businesses. These intelligent systems boost productivity, slash costs, and supercharge decision-making. Let’s explore how AI agents are transforming the ML landscape:

Turbocharging Productivity

AI agents tirelessly handle repetitive tasks, freeing up human brainpower for more creative work. They can process vast datasets at lightning speed, accelerating insights and innovation. For example, AI agents in manufacturing can monitor production lines 24/7, flagging issues before they become costly problems.

Slashing Costs Through Efficiency

By automating complex processes, AI agents dramatically cut operational expenses. They reduce human error, minimize waste, and optimize resource allocation. In finance, AI-powered trading algorithms can execute thousands of transactions per second, maximizing returns while minimizing risks.

Smarter, Faster Decisions

AI agents analyze real-time data streams, spotting patterns humans might miss. This leads to more informed, timely choices across industries. Retailers use AI agents to adjust pricing and inventory in real-time, responding to sudden shifts in demand.

Personalization at Scale

Machine learning AI agents excel at tailoring experiences to individual users. They can analyze customer behavior, preferences, and history to deliver hyper-personalized recommendations. Streaming services like Netflix leverage AI agents to suggest content you’ll love, keeping viewers engaged.

AI agents in machine learning: Boosting productivity, cutting costs, and making smarter decisions. The future of business is here, powered by intelligent automation. #AIagents #MachineLearning #BusinessInnovation

As AI agents continue to evolve, their impact on machine learning will only grow. From healthcare to finance, these intelligent systems are ushering in a new era of efficiency, accuracy, and innovation. How might AI agents transform your industry or daily work?

Implementing AI Agents: Best Practices

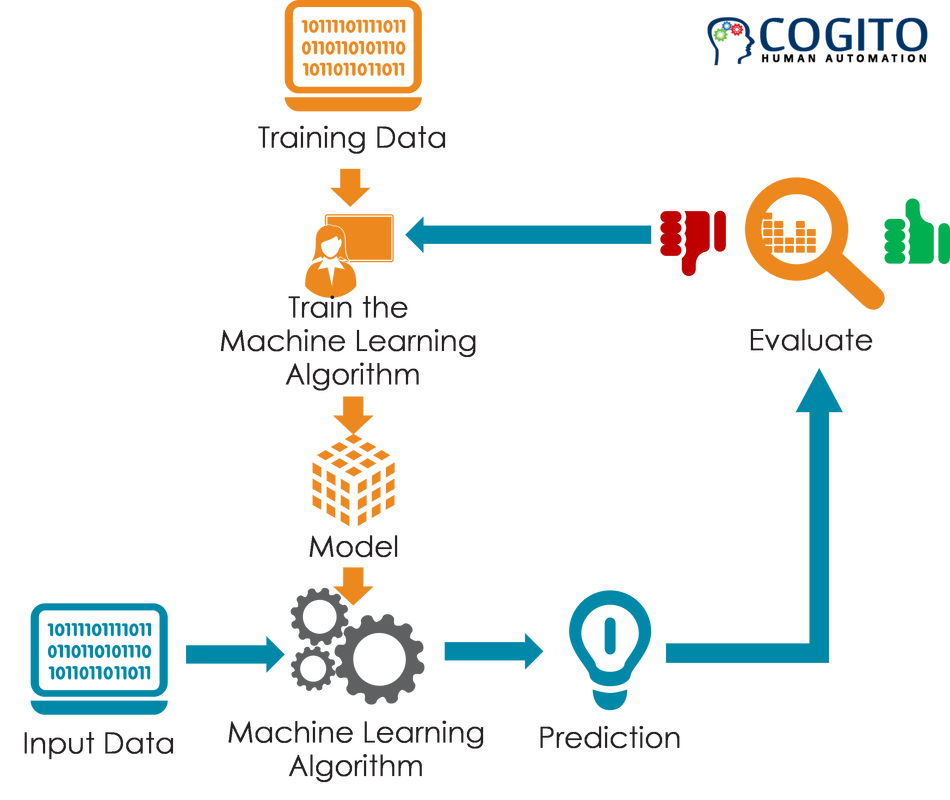

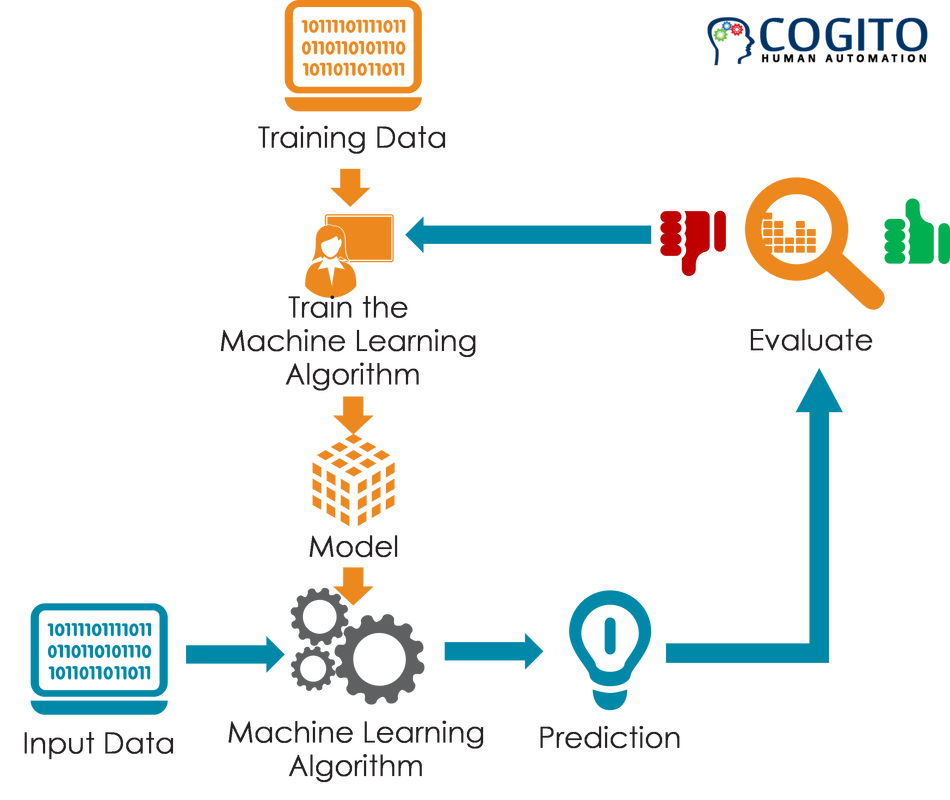

Illustration of AI training components by Cogito. – Via weebly.com

Successfully deploying AI agents requires careful planning and execution. By following these best practices, you can maximize the effectiveness of your AI implementations:

1. Define Clear Objectives

Start by clearly outlining what you want your AI agents to achieve. Specific, measurable goals will guide your entire implementation process.

Set concrete targets like “Reduce customer service response time by 50%” rather than vague goals like “Improve customer service”.

2. Select Appropriate Tools and Frameworks

Choose AI tools and frameworks that align with your objectives and technical requirements. Consider factors like:

- Scalability needs

- Integration with existing systems

- Team expertise

- Budget constraints

3. Ensure High-Quality Training Data

The performance of your AI agents depends heavily on the quality of their training data. Focus on:

- Data relevance and accuracy

- Sufficient volume and diversity

- Proper labeling and annotation

- Regular data cleansing and updates

Implement rigorous data governance practices to maintain data quality over time.

4. Implement Continuous Monitoring

Set up robust monitoring systems to track your AI agents’ performance. This allows you to:

- Identify and address issues quickly

- Measure progress toward objectives

- Detect unexpected behaviors or biases

5. Refine and Iterate

AI implementation is an ongoing process. Continuously refine your agents by:

- Analyzing performance metrics

- Gathering user feedback

- Retraining models with new data

- Adjusting algorithms as needed

I’d strongly recommend getting your hands on actual production data samples early, even if limited, rather than building on synthetic or historical datasets that might not reflect reality.

– Runbo Li, CEO, Magic Hour

6. Prioritize Ethical Considerations

Ensure your AI agents operate ethically and responsibly. This includes:

- Addressing potential biases

- Protecting user privacy

- Ensuring transparency in decision-making

[[artifact_table]] Summary of the Key Benefits of AI Best Practices [[/artifact_table]] By following these best practices, you’ll be well-positioned to implement AI agents that drive real value for your organization. Remember, successful AI implementation is a journey of continuous learning and improvement.

Implementing AI agents? Remember: Clear goals, quality data, and continuous refinement are key to success. Always prioritize ethics in your AI journey. #AIImplementation #BestPractices

Challenges and Ethical Considerations

Symbolizing AI governance and data privacy processes. – Via freepik.com

As AI agents proliferate across industries, organizations face a minefield of complex challenges. Data privacy tops the list of concerns, with the massive datasets required to train these systems raising red flags about user consent and information security. How can companies build robust AI without compromising individual privacy?

Bias management presents another thorny issue. AI agents trained on historical data risk perpetuating and amplifying existing societal biases. A hiring algorithm that favors male candidates or a credit scoring system that disadvantages minority applicants could have far-reaching consequences. Developers must proactively audit their systems for unfair outcomes.

Transparency and accountability also demand attention. The ‘black box’ nature of many AI models makes it difficult to explain their decision-making processes. This opacity can erode trust and make it challenging to assign responsibility when things go wrong. Companies need to prioritize interpretable AI and establish clear chains of accountability.

To address these ethical quandaries, organizations should:

- Implement strict data governance policies, including data minimization and purpose limitation

- Conduct regular bias audits and employ diverse teams in AI development

- Develop explainable AI models and maintain human oversight of critical decisions

- Establish ethics boards to guide AI deployment and address emerging concerns

While these steps can mitigate risks, they’re not silver bullets. As AI capabilities grow, we’ll need ongoing dialogue between technologists, ethicists, and policymakers to navigate the shifting ethical landscape. The goal isn’t to halt progress, but to ensure AI agents serve humanity’s best interests.

The greatest danger of artificial intelligence is that people conclude too early that they understand it.

– Eliezer Yudkowsky, AI researcher

By tackling these challenges head-on, organizations can harness the power of AI agents while upholding ethical standards and protecting individual rights. The path forward requires vigilance, adaptability, and a commitment to responsible innovation.

Conclusion: Leveraging AI Agents with SmythOS

AI agents represent a transformative force in the realm of machine learning, offering immense potential to revolutionize how businesses operate and innovate. As we’ve explored, these digital assistants can dramatically enhance efficiency, reduce costs, and unlock new possibilities across various industries. At the forefront of this AI revolution stands SmythOS, a platform that truly democratizes the power of AI agents.

SmythOS sets itself apart with its user-friendly approach to AI agent development. The platform’s visual debugging environment is a game-changer, allowing developers to fine-tune their agents with unprecedented ease and precision. This feature alone can slash development time from weeks to mere minutes, a boon for businesses racing to implement AI solutions.

Perhaps one of the most compelling aspects of SmythOS is its free runtime environment. This offering empowers developers to run their AI agents on their own infrastructure, providing both flexibility and significant cost savings. In fact, organizations leveraging SmythOS have reported slashing their infrastructure costs by up to 70% compared to traditional development methods. The impact of SmythOS extends beyond mere cost savings; by simplifying the creation and deployment of AI agents, the platform opens up a world of possibilities for businesses of all sizes.

Whether you’re a startup looking to automate customer service or an enterprise seeking to optimize complex data analysis, SmythOS provides the tools to bring your AI visions to life. As we look to the future, it’s clear that AI agents will play an increasingly crucial role in shaping our digital landscape. By embracing platforms like SmythOS, businesses can position themselves at the cutting edge of this technological revolution.

The ability to rapidly develop, deploy, and scale AI agents will be a key differentiator in the competitive landscape of tomorrow. In conclusion, the synergy between AI agents and SmythOS represents a powerful combination for driving innovation and efficiency. As you consider your organization’s AI strategy, remember that with SmythOS, you’re not just adopting a tool—you’re unlocking a new realm of possibilities. The future of AI is here, and it’s more accessible than ever before.