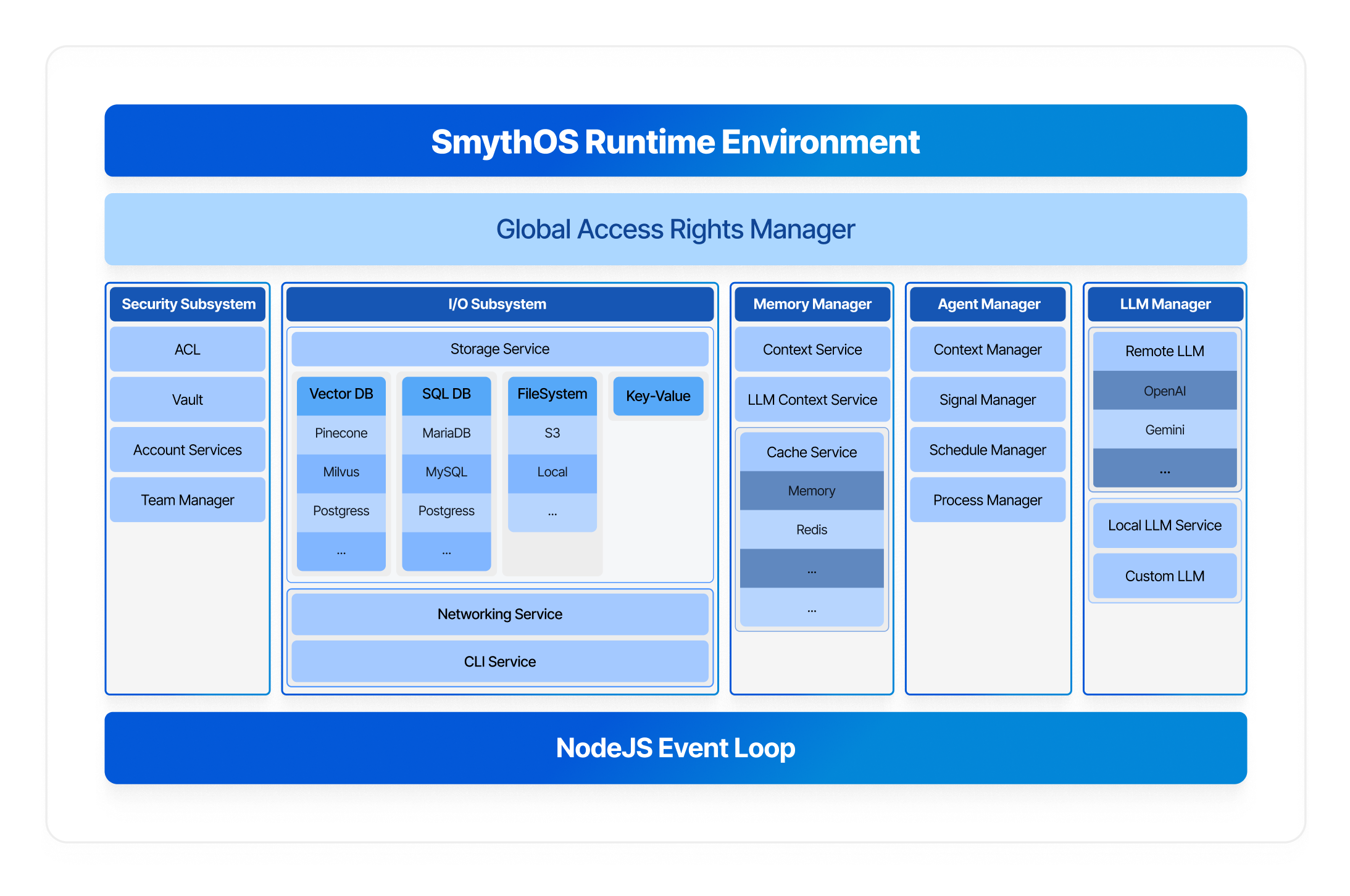

SRE Architecture

The SmythOS Runtime Environment (SRE) is inspired by operating system kernels. It provides a modular, secure foundation for AI agents, with all infrastructure complexity abstracted behind clear interfaces and extensible connectors.

Boot Sequence Steps

When SRE starts, it follows a consistent initialization flow. Each step ensures the environment is secure and ready for agents to run.

- Initialize core services, including storage, cache, vault, and logging

- Register the agent manager and load available components

- Set up HTTP endpoints and routing, if needed

- Connect to LLM providers and vector databases

- Load account and access control configuration

- Signal readiness so agents can execute

import { SRE } from 'smyth-runtime';

const sre = SRE.init({

Storage: { Connector: 'S3', Settings: { bucket: 'my-bucket' } },

Cache: { Connector: 'Redis', Settings: { url: 'redis://prod-cluster' } },

Vault: { Connector: 'HashicorpVault', Settings: { url: 'https://vault.company.com' } },

LLM: { Connector: 'OpenAI', Settings: { apiKey: '...' } },

});

await sre.ready();

Subsystem Architecture

SRE is organized into several subsystems, each managing a distinct aspect of the runtime. Here’s how each one works:

IO Subsystem

This subsystem acts as your gateway to the outside world.

| Service | Purpose | Example Connectors |

|---|---|---|

| Storage | File and data persistence | Local, S3, Smyth |

| VectorDB | Vector storage and retrieval | Pinecone, SmythManaged |

| Log | Activity and debug logging | Console, Smyth |

| Router | HTTP API endpoints | Express |

| NKV | Key-value storage | Redis |

| CLI | Command-line interface | CLI |

// Example: Using multiple storage connectors const localStorage = ConnectorService.getConnector('Storage', 'Local'); const s3Storage = ConnectorService.getConnector('Storage', 'S3');

LLM Manager Subsystem

This subsystem powers your agent’s AI capabilities by providing a unified interface to multiple LLM providers. It supports smart inference routing, usage tracking, and custom model configurations.

Supported providers include OpenAI, Anthropic, Google AI, AWS Bedrock, Groq, Perplexity, and Hugging Face.

// Example: Multi-provider LLM usage const openai = ConnectorService.getLLMConnector('OpenAI'); const claude = ConnectorService.getLLMConnector('Anthropic');

Security Subsystem

Trust is enforced at every step using secure credential storage, identity and authentication management, granular access control, and managed vault integration. Supported vault connectors include HashiCorp, AWS Secrets Manager, and JSON files.

Memory Manager Subsystem

This subsystem manages intelligent state and resource tracking.

- Provides multi-tier caching (RAM, Redis, S3, Local)

- Maintains runtime context and agent state

- Stores conversation history and LLM context

- Monitors memory usage and optimizes resources

Agent Manager Subsystem

This subsystem is the heart of agent execution.

- Manages agent process lifecycles

- Provides real-time monitoring and logging

- Supports async operations and component workflows

- Enables visual programming with over 40 components

- Streams updates to clients in real time

Security by Design

SRE is secure by default. Its Candidate and ACL model ensures all access is authorized and logged. Secrets are stored in secure vaults and access control is enforced at every layer.

For security details, visit Security Model.