It’s raining bananas, but the wildly creative kind.

Google launched its state-of-the-art image model, Gemini 2.5 Flash Image, aka nano banana.

This new model allows developers to edit and blend images using plain language. It’s fast, consistent, and focused on clean visual edits like background blur, posture correction, and object removal.

What’s the deal with nano-banana, and why is it all over the internet? Here’s what developers need to know.

Capabilities That Matter

Gemini 2.5 Flash Image is a tool built for controlled visual editing with minimal guesswork. Image generation has become a norm in the AI industry. But what’s different here is the instruction-following.

Early users have also praised the Flash image for preserving key visual elements and smooth integration into structured workflows.

But what are the features that hit the mark?

Images with Precision Using Natural Language

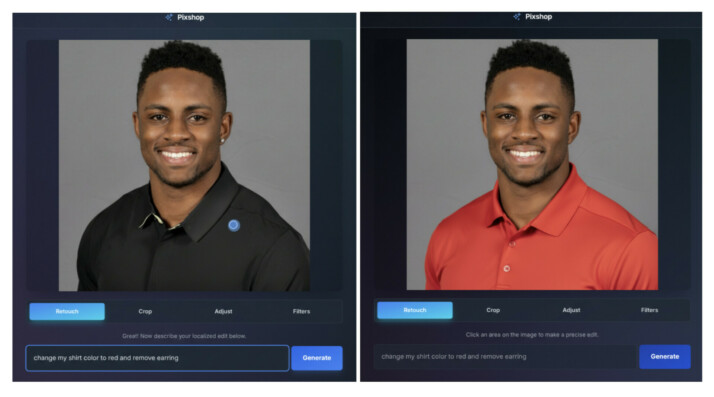

You don’t need bounding boxes or masks. Simply describe what you want, and the model makes targeted changes without touching the rest of the image.

This includes tasks like:

- Blurring backgrounds while keeping the subject in focus

- Removing stains or shadows from clothing or surfaces

- Adjusting posture, lighting, or facial angle

- Changing specific object colors

Developers testing Flash Image through OpenRouter and fal.ai have consistently noted that it responds to edits with minimal overcorrection.

For example, when asked to remove a shadow, it does so without deleting surrounding features. The output is clean and localized.

Hence, it’s reliable for production uses where consistency matters without needing manual retouching downstream. For instance, marketing visuals, product catalogs, or user-generated content pipelines.

Supports Multi-image Input and Merges them Coherently

Flash Image allows you to input several reference images. It then blends them into a single, coherent result.

What matters is that the output isn’t abstract or surreal. Instead, it aligns with the inputs and keeps the structural layout intact.

This is useful for cases where you’re combining product angles, sketch references, or stylistic targets.

For example: merging a line drawing, a real object photo, and a brand style sample into one visual output. You can expect the layout and key visual details to survive the merge.

Keeps the Subject Recognizable Across Edits

Where many models falter, especially during repeated edits, Flash Image retains subject identity. That includes face shape, skin tone, clothing textures, and spatial orientation.

If you edit a product image five different ways, the object remains visually consistent across all versions. For developers working on e-commerce, AI-powered photo tools, or avatar systems, that kind of visual memory is critical.

Understands Real-world Context

The model can interpret vague, but grounded prompts like “make it look like a passport photo,” “reframe like a movie poster,” or “place on a white background.” It also works well with diagrams, sketches, or layout references.

This is possible because the model inherits Gemini 2.5’s combined vision–language architecture, enabling it to connect visual structure with textual intent. That’s why it delivers grounded and task-aware output.

Cost, Speed, and How It Fits Into Your Workflow

Flash Image uses the same pricing model as Gemini 2.5 Flash: $30 per million output tokens. A typical image response uses around 1,290 tokens, which comes out to roughly $0.039 per image.

There are no separate charges for input image size, edit complexity, or number of instructions. Whether you’re removing a shadow or merging multiple references, the cost is consistent.

Fast Enough for Production Use

In testing environments like OpenRouter and fal.ai, Flash Image returns results in under two seconds for most edits. That includes both single-shot transformations and more complex chained instructions like:

“Blur the background, brighten the lighting, and adjust the angle of the face.”

This speed puts it in the same class as other Gemini 2.5 Flash-tier models. It’s usable in real-time tools, batch processing flows, or on-demand UIs without stalling your pipeline.

Works Inside Standard Dev Tools

In Google AI Studio, nano-banana can be used inside the new Build Mode, which supports:

- Prompt templates

- Edit history and replays

- One-click API and GitHub deployment

This makes it easy to test edits, store versions, and hand them off to production workflows. Whether you’re building internal design tools or public-facing content systems, the model integrates without friction.

If you’re using third-party platforms like OpenRouter or fal.ai, the experience is equally direct. Upload an image, send a prompt, receive the edited output with nothing more to configure.

Where Gemini Flash Image Falls Short (and Why)

Gemini 2.5 Flash Image isn’t built for stylization or imagination. It’s built for structure. That makes it reliable for edits, but limited for anything that requires creative variation.

Poor Performance on Style Transfer

The model struggles with requests like:

- “Make this look like a watercolor painting.”

- “Apply Van Gogh style”

- “Turn this into a Pixar-style scene.”

In these cases, the output often ignores the style prompt entirely or makes minor changes that don’t match the expected result.

According to user feedback, Gemini 2.0 Flash Image, an earlier model, handles stylization more reliably in some scenarios.

This makes Flash Image a weak choice for creative workflows where look and feel matter more than fidelity.

Doesn’t Generalize Well Outside Realism

Flash Image is tuned for photorealism and structural edits. While that makes it ideal for e-commerce, brand assets, or structured content, it limits use in entertainment, concept design, or illustration workflows.

There’s no mode switch or configuration to improve this. If you need expressive variation or texture-based creativity, this model likely won’t meet the bar.

What Google Doesn’t Highlight

These limitations aren’t mentioned in the official blog post or documentation. They’ve surfaced through developer tests and benchmark platforms like LM Arena and Reddit, where users are running side-by-side comparisons with other models.

In summary, for edits that need accuracy and control, Flash Image is a strong choice. For artistic direction or creative reinvention, it’s not.

Model Comparison: Gemini vs GPT-4o, Qwen, and Flux

As mentioned earlier, the Gemini 2.5 Flash Image is not meant for abstract creation or artistic style transfer. Its strengths lie in dependable editing, maintaining identity, and practical use.

With that in mind, here’s how it stacks up against GPT-4o, Qwen Image, and Flux Kontext.

| Feature | Gemini 2.5 Flash Image | GPT-4o | Qwen Image | Flux Kontext |

|---|---|---|---|---|

| Prompt Editing | Literal and controlled | Often loosely interpreted | Varies | Loosely guided |

| Style Consistency | Maintains subject identity | Inconsistent | Often changes across outputs | Weak |

| Style Transfer | Weak | Mixed | Strong | Strong |

| Layout Accuracy | High | Moderate | Moderate | Low |

| Multi-Image Support | Yes | No | No | Not documented |

| Scene Control | High | Moderate | Moderate | Low |

| Character Retention | Strong | Variable | Weak | Weak |

| Business Use Readiness | Yes | Limited | Limited | No |

| Branding/Visual Fidelity | Preserved | Not consistent | Not preserved | Often distorted |

| Speed | Fast | Slower for image tasks | Slower | Moderate |

| Cost per Image | ~$0.039 | Higher | Varies | Unknown |

| Use Case Fit | Editing, branded assets, structured edits | Multimodal reasoning, image Q&A | Visual storytelling, stylization | Experimental design, creative exploration |

Summary:

Nano-banana outperforms others in identity retention, prompt accuracy, and brand safety. But trades off creative flexibility.

GPT-4o is more versatile for reasoning over images. Qwen and Flux are better choices for generative art and exploratory design, but less predictable in practical workflows.

Who This Model Is For and Where It Doesn’t Fit

While it offers more powerful creative control, Gemini 2.5 Flash Image isn’t trying to be the most creative model. It’s trying to be the most reliable one, especially for edits that matter, in workflows that can’t tolerate surprises.

It handles real-world editing tasks with clarity. Nano-banana removes background clutter, blending inputs, preserving identity across prompts, and delivering consistent results. It’s fast, priced simply, and built to integrate into tools developers already use.

The tradeoff is clear: you lose out on stylistic range and visual imagination. But for teams building editing pipelines, asset generators, or branded content tools, Gemini 2.5 Flash Image is a practical choice. It does exactly what you ask, and nothing you didn’t.

That kind of precision is more useful than flashy. It all depends on what’s more important to you.