And Why That Needs to Change

Here’s a strange contradiction at the heart of AI development right now. You can download Meta’s Llama 4, Mistral’s latest, or DeepSeek R1 in minutes. Free weights, permissive licenses, run them anywhere. The foundation models powering the AI revolution have never been more accessible.

But try to actually run an AI agent in production, and that’s where things get complicated.

The models are open. The infrastructure to make them useful is mostly closed. And that gap is quietly costing enterprises billions of dollars.

The 95% Graveyard

According to MIT’s 2025 State of AI in Business report, 95% of generative AI pilots fail to deliver measurable business impact. Not because the models aren’t capable. They clearly are. The problem lies somewhere else entirely.

IDC research paints a similar picture. For every 33 AI proofs-of-concept a company launches, only four make it to production. That’s an 88% failure rate before agents ever see real users.

What’s going wrong?

The culprit isn’t the “brain” of these systems. Today’s LLMs reason, plan, and generate responses with remarkable sophistication. The breakdown happens in the nervous system. The orchestration layers, security controls, memory management, and deployment infrastructure that agents need to function reliably at scale.

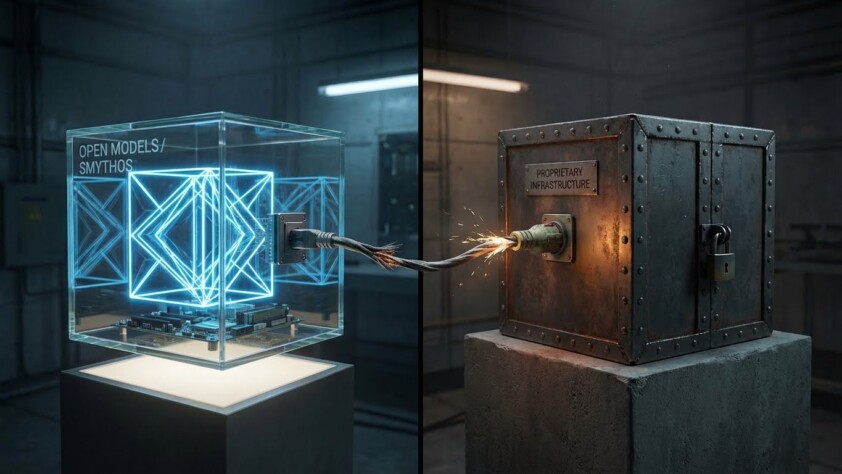

Open Models, Closed Plumbing

The open-source movement has transformed foundation models. Hugging Face hosts thousands of models that anyone can download. Companies like Meta, Mistral, and Alibaba release state-of-the-art weights under permissive licenses. A Stack Overflow survey found 82% of developers now have significant experience with open-source technology.

But when you look at agent infrastructure, the picture shifts dramatically.

Most agent runtimes remain proprietary. Security controls come from closed vendors. Deployment pipelines lock you into specific clouds. The tools for orchestration, memory, credential management, and observability are fragmented across dozens of services, each with its own contracts and constraints.

This creates a peculiar situation. You have the engine. What you lack is the road.

Organizations often obsess over model selection while overlooking the infrastructure that determines whether those models can actually perform useful work.

What Production Actually Requires

Running an AI agent in a demo is easy. Running one that handles real customer data, integrates with enterprise systems, maintains audit trails, and recovers gracefully from failures requires infrastructure that most teams have to build from scratch.

Consider what a production agent needs:

- Stateful execution. Agents don’t just respond to prompts. They maintain context across sessions, remember previous interactions, and adapt based on accumulated knowledge. Without durable state management, every conversation starts from zero.

- Security from the ground up. API keys scattered through code. Credentials exposed in logs. Agents with access to systems they shouldn’t touch. These aren’t hypothetical risks. A June 2025 study found that 69% of organizations cite AI-powered data leaks as their top security concern, yet nearly half have no AI-specific security controls in place.

- Modular connectors. Enterprise systems don’t speak a common language. Agents must simultaneously interface with CRMs, databases, legacy APIs, and cloud services. Building custom integrations for each becomes an engineering tax that never ends.

- Observability. When an agent makes a questionable decision at 3 AM, you need to understand why. Step-level debugging, audit trails, and monitoring aren’t optional. They’re what separate demos from deployable systems.

Most frameworks address one or two of these requirements. Few handle all of them. And almost none do so while remaining open and portable.

The Vendor Lock-In Problem

Closed infrastructure creates dependencies that compound over time. Today’s integration becomes tomorrow’s migration nightmare. WorkOS research on enterprise AI failures found that organizations launching pilots in isolated sandboxes often fail to design clear paths to production. The technology works in isolation, but integration challenges stack up until executives request the go-live date.

It’s not just about switching costs. Closed systems limit your ability to adapt as requirements evolve. When your orchestration layer is proprietary, every architectural decision must be routed through someone else’s roadmap.

This matters especially in regulated industries. Finance, healthcare, and government organizations must audit their AI systems to demonstrate compliance with regulators. Black-box infrastructure makes that nearly impossible.

What an Open Agent Stack Looks Like

The solution isn’t to reject infrastructure entirely. Agents need runtimes, security, and orchestration. The question is whether those components remain under your control.

An open agent stack provides:

- Portability. Run the same agent locally, on your cloud, at the edge, or in a managed environment. No rewriting required when deployment needs change.

- Transparency. When something goes wrong, you can inspect exactly what happened. Source code is available, audit logs are comprehensive, and there are no proprietary black boxes hiding decisions.

- Flexibility. Swap components without rebuilding the system. Use one LLM provider today, switch tomorrow. Connect to Redis or your own caching layer. Select the vector database that best suits your needs.

- Security by default. Credential vaults that encrypt secrets at rest. Sandboxed components that prevent data leakage. Permission systems that enforce zero-trust principles without manual configuration.

These aren’t aspirational features. They’re table stakes for enterprise deployment. However, achieving them with proprietary tools means relying on vendors to prioritize your needs indefinitely.

Why Open Source Matters for the Agent Economy

Some predict an agent-to-agent economy where AI systems transact with each other at a massive scale. Trillions of daily interactions, agents booking, purchasing, analyzing, and executing on behalf of humans and other agents.

If that future materializes on closed infrastructure, it concentrates power among those who control the rails. The internet succeeded because open standards, such as HTTP and TCP/IP, allowed anyone to participate. The same principle should apply to AI agents as well.

Open protocols, such as Agent-to-Agent (A2A) and Model Context Protocol (MCP), promote interoperability. But protocols alone aren’t enough. The runtimes that execute agents, the security that governs them, and the infrastructure that deploys them also need to be open.

This isn’t idealism. It’s practical engineering. Closed systems eventually encounter problems their vendors can’t or won’t solve. Open systems let you solve them yourself.

Why This Matters

Frameworks like LangChain and LangGraph are excellent for prototypes, and workflow tools such as Zapier and n8n make automation accessible. But none of these are true runtimes. None provides the kernel-level guarantees enterprises demand.

SmythOS SRE does. By embedding orchestration to remove fragility, security to enforce governance, memory to guarantee durability, and interoperability to avoid lock-in, SRE delivers the missing backbone enterprises have been waiting for. Released under the MIT license and inspired by the elegance of Linux architecture, it’s designed for the future where a trillion agents create a new global economy.

That’s how we move beyond the 95% graveyard and into production at scale. And when that shift takes hold, today’s demo-to-production struggles will feel as outdated as dial-up modems.

Now it’s your turn. Don’t let your agents stall in proof-of-concept limbo. Give them the durability, governance, and portability they need with SmythOS SRE. Whether you’re an executive ready to de-risk pilots, a developer looking for production-grade architecture, or a pioneer eager to help shape the Internet of Agents, the path forward is clear. Schedule a demo today! Star us on GitHub to support open agent infrastructure. Start building with SmythOS today and join the community moving from fragile demos to lasting impact. Our team is standing by, let us know how we can help you with your Agentic AI needs.