Picture this: It’s 3 AM, and you urgently need to check your bank balance. Instead of waiting for business hours, you simply message your bank’s chatbot and get an instant response. Welcome to the era of chatbots, where customer service never sleeps.

Chatbots are transforming how businesses engage with their customers. These AI-powered digital assistants are not just answering queries; they’re reshaping entire industries, from retail and healthcare to finance and travel.

What exactly makes chatbots so impactful? How are companies leveraging this technology to stay ahead? In this article, we’ll dive into real-world chatbot examples that are setting new standards in customer interactions. We will explore their functionalities, uncover the benefits they bring to both businesses and consumers, and address the challenges they face.

From Siri’s witty responses to Domino’s pizza-ordering bot, we’ll see how chatbots are becoming the frontline of customer engagement. We’ll also look into the future, examining how emerging technologies like natural language processing are pushing the boundaries of what chatbots can do.

Whether you’re a business owner looking to streamline operations or a tech enthusiast curious about AI’s impact on customer service, this article is for you. We’re about to embark on a journey through the fascinating world of chatbots—where convenience meets innovation, and the customer is always just a message away.

Main Takeaways:

- Chatbots are transforming customer interactions across industries

- We’ll explore real-life examples of chatbots in action

- The article will cover chatbot functionalities, benefits, and challenges

- We’ll examine how chatbots are shaping the future of customer engagement

Customer Service Chatbot Examples

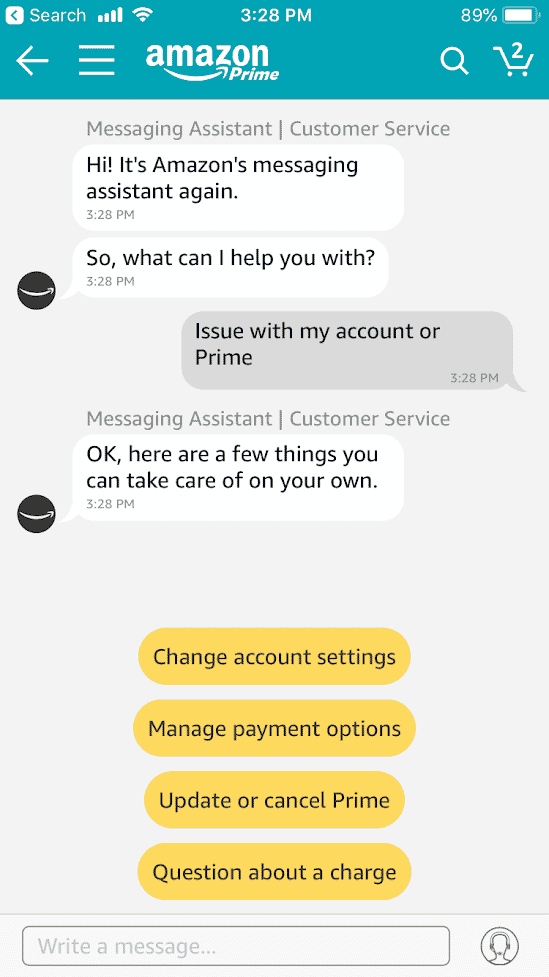

Businesses are increasingly turning to customer service chatbots to provide efficient, round-the-clock support. These AI-powered assistants are transforming how companies interact with their customers, offering quick solutions and personalized experiences. Here are some compelling examples of how chatbots are enhancing customer service across various industries.

Banking on AI: Erica from Bank of America

Imagine having a personal financial advisor available 24/7, right at your fingertips. That’s what Bank of America offers with Erica, their intelligent virtual assistant. Erica goes beyond simple account inquiries, providing nuanced financial guidance to millions of customers. Whether you’re looking to budget better, understand your credit score, or get insights on your spending habits, Erica’s there to help.

For instance, if you’re contemplating a major purchase, Erica can analyze your financial history and offer tailored advice on whether it’s a wise decision given your current financial situation. This level of personalized financial guidance was once reserved for those who could afford human financial advisors. Now, thanks to AI chatbots like Erica, it’s accessible to anyone with a Bank of America account.

Health at Your Fingertips: Babylon Health’s Symptom Checker

Quick and accurate information can be crucial for health concerns. Babylon Health’s Symptom Checker chatbot is changing the game in medical self-diagnosis. This innovative AI doesn’t just regurgitate WebMD articles; it uses advanced algorithms to interpret symptoms and provide potential diagnoses.

Picture this: It’s 2 AM, and you wake up with an unusual rash. Instead of panicking or waiting hours for a doctor’s office to open, you can chat with Babylon’s AI. The chatbot will ask you a series of questions, much like a real doctor would, to narrow down possible causes. It might suggest it’s an allergic reaction and recommend over-the-counter antihistamines, or it could advise you to seek immediate medical attention if the symptoms seem more serious.

How Chatbots Are Elevating Customer Service

These examples highlight the transformative power of customer service chatbots. They’re not just answering simple queries; they’re providing sophisticated, personalized assistance that was once the domain of human experts.

By offering 24/7 availability, these chatbots ensure that customers get help exactly when they need it, without the frustration of waiting on hold or navigating complex phone menus.

Moreover, chatbots like Erica and Babylon’s Symptom Checker are continually learning from interactions, improving their responses over time. This means the quality of service they provide is constantly evolving, becoming more accurate and helpful with each conversation.

As AI technology continues to advance, we can expect even more impressive capabilities from customer service chatbots. From predicting customer needs before they arise to seamlessly integrating with other smart devices, the future of customer service is looking increasingly automated, efficient, and surprisingly personal.

AI Chatbot Innovations: The Rise of Meena and BlenderBot

Artificial intelligence is evolving rapidly, with chatbots revolutionizing our interactions with machines. Two notable contenders in this space, Google’s Meena and Facebook’s BlenderBot, are pushing the boundaries of conversational AI.

Meena, Google’s end-to-end neural conversational model, excels in engaging in human-like dialogue on virtually any topic. Its strength lies in understanding context and nuance, allowing for more natural conversations. Meena’s ability to make jokes and engage in witty banter showcases advancements in natural language processing. However, Meena relies on its pre-trained knowledge base and doesn’t perform real-time web searches.

Facebook’s BlenderBot takes a different approach. Now in its second iteration, BlenderBot 2.0 combines long-term memory capabilities with real-time internet searches. This blend allows it to recall previous interactions and stay updated with current information. In Facebook’s tests, human evaluators found BlenderBot more engaging, with 75% preferring it over Meena.

BlenderBot’s ability to build and retain long-term memory addresses a critical limitation in many AI chatbots—the ‘goldfish memory’ problem. By remembering context from past conversations, it provides more personalized and coherent responses. This feature is particularly valuable for customer service and personal assistance applications.

The ability to search the internet in real-time is a game-changer. It allows BlenderBot 2.0 to provide up-to-date information and engage in more informed discussions on current events.

Jason Weston, Research Scientist at Facebook

While both chatbots represent significant advancements, they also highlight ongoing challenges in AI development. Issues such as bias, privacy concerns, and the potential for generating false information remain at the forefront of ethical considerations.

As these technologies continue to evolve, we can expect even more sophisticated chatbots that blur the line between human and artificial intelligence. The race between tech giants like Google and Facebook is driving innovation at an unprecedented pace, promising a future where our digital interactions become increasingly natural and intuitive.

Chatbots in Sales and Lead Generation

AI-powered chatbots are transforming how businesses engage with potential customers and generate leads. These intelligent virtual assistants, like VainuBot, make the sales funnel more dynamic and efficient.

Sales chatbots engage website visitors in meaningful conversations, often initiating contact with a friendly greeting or contextual prompt. This proactive approach captures attention and opens the door to lead qualification. By leveraging natural language processing and machine learning algorithms, these bots understand user intent and respond with relevant questions, mimicking the conversational flow of a skilled sales representative.

The power of chatbots in lead generation lies in their ability to qualify prospects through interactive dialogues. They ask targeted questions about a visitor’s needs, budget, or timeline, gathering crucial information that helps sales teams prioritize their efforts. For instance, VainuBot might inquire, “Are you looking to improve your sales figures with company data?” followed by more specific queries based on the user’s response.

One of the most significant advantages of implementing chatbots for lead generation is their 24/7 availability. Unlike human sales representatives, these tireless digital assistants can engage potential customers at any hour, ensuring that no lead falls through the cracks due to time zone differences or off-hours inquiries. This constant presence dramatically expands a company’s ability to capture and nurture leads without increasing staffing costs.

Moreover, chatbots streamline the lead generation process by automating repetitive tasks. They can schedule appointments, provide product information, and offer personalized recommendations based on user interactions. This efficiency saves time for sales teams and enhances the customer experience by providing instant, relevant information to prospects.

AI chatbots can engage customers with interactive and relevant content. By analyzing personal data and preferences, lead generation chatbots offer customized recommendations, guiding customers through the decision-making process in a more unique and constructive manner.Master of Code Global

The impact of chatbots on lead generation can be substantial. Companies implementing these AI-driven tools often report significant improvements in lead quality and quantity. For example, some businesses have seen conversion rates increase by up to 40% after deploying chatbots, with a notable reduction in the cost per lead. These results underscore the powerful role that chatbots can play in modern sales strategies.

As AI technology continues to advance, sales chatbots will become even more sophisticated in their ability to engage prospects and qualify leads. The future may bring chatbots that can analyze tone and sentiment, providing even more nuanced interactions. For businesses looking to stay competitive, integrating AI chatbots into their sales and lead generation processes is becoming a necessity for those who wish to thrive in an increasingly automated marketplace.

Chatbots Enhancing User Experience

Leading companies are turning to chatbots to improve their interactions with customers and job seekers. These AI-powered tools are transforming user experiences across industries, from hospitality to beauty.

Marriott International, the world’s largest hotel chain, has embraced chatbots as virtual concierges. These digital assistants help guests with everything from room service orders to local restaurant recommendations. Available 24/7 through platforms like Facebook Messenger, Marriott’s chatbots provide instant, personalized support that enhances the guest experience.

Meanwhile, cosmetics giant L’Oréal is using chatbots to simplify its recruiting process. The company partnered with Mya Systems to create an AI recruiter named Mya. This chatbot engages with job applicants, answering their questions and collecting key information. For L’Oréal, which receives over 1 million applications annually, Mya has been a game-changer.

Here’s how Mya improves the hiring process:

- Screens candidates quickly and efficiently

- Provides instant responses to applicant questions

- Collects relevant data to help human recruiters

- Reduces bias in early-stage candidate evaluations

The results speak for themselves. L’Oréal reports that Mya engages with 92% of candidates and achieves near 100% satisfaction rates. By automating initial screenings, L’Oréal has cut recruiter time spent on each application by 40 minutes.

Whether it’s booking a hotel room or applying for a job, chatbots are making user experiences smoother, faster, and more enjoyable. As AI technology continues to advance, we can expect even more innovative uses of chatbots to enhance how we interact with brands.

Challenges in Chatbot Development

Chatbots have significantly changed customer service and engagement, but developing effective AI assistants is a complex task. Developers encounter several major challenges when building chatbots that seamlessly integrate into existing systems and interact naturally with users.

One pressing issue is addressing bias in chatbot responses. AI models learn from large datasets that often contain societal biases and stereotypes. Without careful oversight, chatbots can perpetuate or even amplify these biases. For example, a customer service bot might provide different levels of support based on a user’s perceived gender or ethnicity. To tackle this challenge, companies like FairNow are developing tools to assess and mitigate chatbot bias. These evaluations analyze how bots respond to prompts associated with different demographic groups, helping developers identify and correct any unfair treatment before the bots go live. Eliminating bias in chatbot development is crucial for creating AI assistants that treat all users equitably.

Another major obstacle is integrating chatbots with existing enterprise systems. Many organizations rely on legacy software that wasn’t designed to communicate with modern AI, leading to data synchronization issues and fragmented user experiences. Successful integration often requires middleware—software that acts as a translator between old and new systems. This approach allows chatbots to access necessary data and functionalities without needing a complete overhaul of existing infrastructure.

Scaling chatbot interactions presents its own set of challenges. As more users engage with an AI assistant, performance may decline. Organizations must invest in robust cloud infrastructure and implement strategies such as load balancing to maintain responsiveness during high-traffic periods. Furthermore, improving natural language understanding remains an ongoing challenge. While modern chatbots have made significant progress, they still struggle with context, nuance, and handling unexpected queries. Continuous training and refinement of language models are essential for creating bots that can engage in truly natural conversations.

Overcoming these hurdles requires a multifaceted approach. Companies must invest in diverse training data, robust testing methodologies, and flexible integration strategies. By addressing these challenges directly, developers can create chatbots that are functional, fair, reliable, and genuinely helpful to users across all demographics.

Conclusion: Future Directions of Chatbots

We’ve explored the rapidly evolving landscape of chatbot technology and it’s clear we’re on the cusp of a new era in human-computer interaction. The challenges we face today are stepping stones to more sophisticated and intuitive chatbot solutions. As AI capabilities advance, chatbots will become increasingly adept at understanding context, tone, and the subtleties of human emotion.

The future of chatbots lies in their seamless integration across multiple platforms and industries. From healthcare to finance, education to customer service, chatbots will revolutionize how we access information and assistance. The rise of voice-based applications and the integration of chatbots with social media platforms will further expand their reach and utility.

One of the most exciting prospects is the development of chatbots with enhanced emotional intelligence. Imagine interacting with a virtual assistant that not only understands your words but also picks up on your mood and responds with genuine empathy. This level of sophisticated interaction could transform fields like mental health support and personalized customer experiences.

It’s crucial to address the ethical considerations surrounding AI and data privacy as we look to the future. Developing responsible AI practices will be paramount in ensuring chatbots remain trustworthy tools that enhance user experiences without compromising them.

The future of chatbots is bright and full of potential. As AI capabilities are refined and systems are further integrated, we can anticipate more natural, efficient, and meaningful chat interactions. These advancements will streamline processes for businesses and enrich our daily lives in ways we’re only beginning to imagine. The journey of chatbot evolution is far from over – it’s just getting started.