Ever wondered how artificial intelligence systems work under the hood? At their heart lies something fascinating called agent architectures – the frameworks that give AI its ability to think and act. Like the blueprint of a brain, these architectures define how machines understand their world and make decisions.

An AI agent architecture is like a nervous system. Just as our brains process information from our senses and tell our bodies how to respond, agent architectures help machines handle input from their environment and figure out what actions to take. This could be anything from a self-driving car processing road conditions to a virtual assistant understanding your questions.

The building blocks of these architectures are straightforward. You have sensory components that act as the machine’s eyes and ears, processing units that work like its brain to make sense of information, and action modules that allow it to affect its environment – similar to how we use our hands and feet to interact with the world.

Understanding agent architectures is crucial because they form the foundation of all intelligent systems. Whether we’re talking about a simple chatbot or a complex autonomous robot, the way these components work together determines how well the AI can achieve its goals. As AI becomes more integrated into our daily lives, knowing how these architectures function helps us build better, more reliable systems.

What makes these architectures remarkable is their adaptability. Like how we learn from experience, modern AI architectures can adjust and improve their behavior based on new information. This learning ability is what allows AI to tackle increasingly complex challenges and find innovative solutions to problems.

Fundamental Components of AI Agent Architecture

Modern AI agent architectures comprise several essential components that work in harmony to create intelligent, autonomous systems. Each module serves a distinct purpose in helping the agent perceive, understand, and interact with its environment.

The profiling module acts as the agent’s core identity system, defining its role and characteristics. For instance, in a software development team, an agent might be profiled as a code reviewer with specific expertise in security analysis. This module helps ensure the agent maintains consistent behaviors aligned with its designated role, much like how a human expert draws upon their professional training and experience.

Working alongside the profiling module, the memory module functions as the agent’s knowledge repository. It combines short-term memory for immediate context awareness with long-term memory for retaining important experiences and learned patterns. For example, when an AI agent assists in customer service, its short-term memory tracks the current conversation while its long-term memory stores previous customer interactions and solutions, enabling more informed and consistent responses.

The planning module serves as the agent’s strategic center, breaking down complex tasks into manageable steps. Consider an autonomous driving agent; before changing lanes, its planning module evaluates traffic conditions, calculates timing, and sequences the necessary actions. This methodical approach allows agents to tackle intricate challenges through well-reasoned strategies rather than reactive responses.

The action module transforms the agent’s decisions into concrete outcomes, acting as the bridge between internal processing and external interaction. When a robotic agent receives instructions to organize items in a warehouse, the action module coordinates the physical movements required to pick up, transport, and place objects. This component ensures that the agent’s internal decisions manifest as meaningful changes in its environment.

Learning strategies represent the agent’s ability to improve over time through experience. Similar to how humans refine their skills through practice, AI agents employ various learning approaches to enhance their performance. For instance, a trading agent might learn from successful and failed transactions, gradually developing more sophisticated investment strategies based on market patterns and outcomes.

Profiling Module: The Eyes and Ears of the Agent

The profiling module functions as the primary sensory system for an artificial intelligence agent, similar to how human senses help us understand and navigate our world. Just like our eyes, ears, and other senses gather information about our surroundings, this crucial component continuously collects and processes data from the agent’s environment.

At its core, the profiling module acts as an information processing pipeline. Raw sensory input—such as visual data from cameras, audio from microphones, or readings from other sensors—flows in and is transformed into structured data that the AI agent can utilize for decision-making. This process resembles how our brains convert raw sensory signals into meaningful perceptions.

Functioning like a sophisticated filtering system, the profiling module separates relevant signals from background noise. For example, an autonomous vehicle’s profiling module might analyze camera feeds to identify important objects like pedestrians, traffic signs, and other vehicles while dismissing irrelevant details in the background. The module does not simply collect data; it actively processes and organizes sensory information into recognizable patterns that the AI agent can act upon. Think of it as a personal assistant that not only gathers facts but also organizes them in a way that enhances decision-making efficiency.

Just as our brains learn to prioritize relevant sensory information and ignore distractions, the profiling module enables AI agents to develop increasingly sophisticated methods for processing sensory inputs. This adaptive capability allows agents to become more effective at understanding and responding to their environments over time. The accuracy and efficiency of the profiling module directly impact an AI agent’s ability to make sound decisions. Just as human sensory impairments can hinder our ability to navigate the world safely, limitations in an agent’s profiling capabilities can restrict its effectiveness in performing designated tasks.

Integration of Memory Module

Imagine having a personal library that not only stores your experiences but actively helps you make better decisions. This is precisely what the memory module does for AI agents; it serves as a sophisticated repository that enables agents to learn from past interactions and apply that knowledge to future situations.

The memory module acts as both a chronicler and advisor, storing two critical types of information. First, it maintains a record of the agent’s direct experiences—interactions, decisions, and their outcomes. For example, when an AI agent encounters a new problem-solving scenario, it can reference similar past experiences stored in its memory to inform its approach. Research has shown that this ability to recall and learn from past experiences significantly enhances an agent’s decision-making capabilities.

Beyond experiential data, the memory module also maintains factual knowledge—fundamental rules, concepts, and information that help the agent understand its operating environment. This dual storage system allows agents to combine learned experiences with established knowledge, much like how humans integrate their personal experiences with formal education to make informed choices.

The memory module’s architecture employs both short-term and long-term memory components. Short-term memory handles immediate context and recent interactions, while long-term memory preserves crucial experiences and knowledge for future reference. This structured approach ensures that agents can maintain contextual awareness while building a comprehensive knowledge base over time.

The implementation of memory in AI agents has led to remarkable improvements in their autonomous capabilities. When an agent encounters a complex decision point, it can query its memory module to recall similar situations, analyze past outcomes, and select the most promising course of action. This deliberate integration of past experiences with current context enables more nuanced and effective decision-making.

Memory in AI agents is not just about storing information—it’s about creating a foundation for learning, adaptation, and increasingly sophisticated behavior.

Abu Sebastian, IBM Research Europe

The architectural design of memory modules continues to evolve, with newer systems incorporating advanced features like selective retention, priority-based recall, and dynamic knowledge updating. These developments are pushing the boundaries of what AI agents can achieve, moving us closer to systems that can truly learn and adapt from their experiences in meaningful ways.

Planning Module: The Strategist Behind Decisions

The planning module acts as the strategic command center for AI agents, coordinating complex decision-making processes that convert raw data into actionable plans. Similar to a chess grandmaster who plots multiple moves ahead, this component analyzes current situations, evaluates potential outcomes, and devises the best path toward specific goals.

At its core, the planning module utilizes various reasoning techniques. Through deductive reasoning, it arrives at specific conclusions based on general principles. For example, if a user’s account balance is overdue, it activates payment notification protocols. Additionally, the module employs inductive reasoning to derive broader insights from specific observations, enabling agents to make increasingly nuanced decisions over time.

The module’s effectiveness is enhanced by its integration with the agent’s memory systems. By accessing both short-term and long-term memory, it can incorporate historical data, user preferences, and outcomes from previous interactions into its strategic calculations. For instance, when managing customer service requests, the planning module might recall past shipping addresses and billing histories to streamline future transactions.

Advanced planning modules employ what experts refer to as “hierarchical planning,” which breaks down complex objectives into manageable steps while maintaining focus on the bigger picture. This approach allows agents to tackle sophisticated tasks without becoming overwhelmed.

One of the module’s most impressive features is its adaptability. Instead of rigidly adhering to predetermined scripts, it can adjust strategies in real time based on new information or unforeseen challenges. This flexibility enables agents to navigate dynamic environments while making steady progress toward their defined objectives.

AI agents can decompose goals into a series of actionable steps, allowing them to solve complex problems efficiently. While the planning module operates autonomously, its decision-making processes remain transparent and accountable. Each strategic choice can be traced back to specific data points and logical principles, ensuring that agents act not only effectively but also responsibly in pursuit of their goals.

Action Module: From Plans to Execution

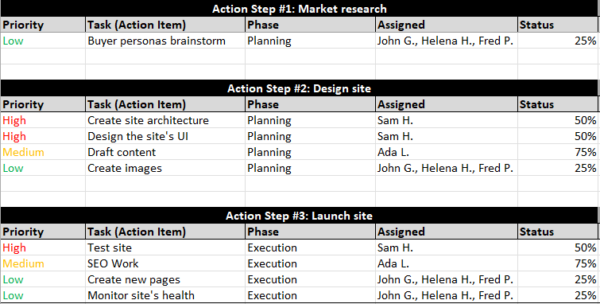

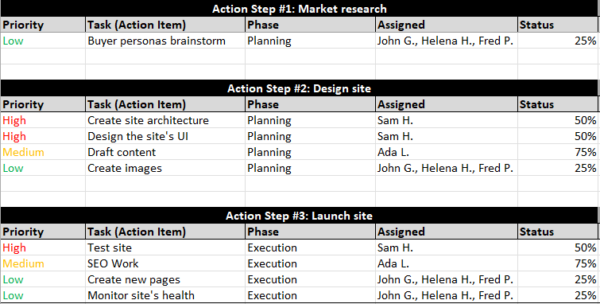

Overview of project tasks and their statuses – Via projectmanager.com

The action module serves as the critical bridge between strategic planning and real-world execution. Like a skilled orchestra conductor translating musical notation into harmonious sound, this component transforms abstract plans into concrete, executable tasks that autonomous agents can perform.

At its core, the action module operates through a sophisticated process of task decomposition and sequencing. When presented with a high-level strategy – for example, ‘engage with website visitors’ – the module breaks this down into specific executable actions such as monitoring user activity, identifying engagement opportunities, and initiating conversations at optimal moments.

The module’s effectiveness stems from its ability to maintain contextual awareness while executing tasks. For instance, when an agent needs to respond to a customer inquiry, the action module doesn’t just trigger a response – it considers factors like the customer’s history, current context, and optimal communication timing. This ensures that each action aligns precisely with the agent’s strategic objectives.

Task prioritization represents another crucial function of the action module. Rather than blindly executing tasks in sequence, it evaluates dependencies, urgency, and resource requirements. This means an agent can dynamically adjust its execution sequence based on changing conditions or emerging priorities.

The translation process itself involves three key steps: first, interpreting the strategic intent behind each plan; second, mapping available actions to achieve those intentions; and finally, orchestrating the execution sequence for maximum effectiveness. Consider an agent tasked with managing social media engagement – the action module would translate broad goals like ‘increase community engagement’ into specific actions like posting content, responding to comments, and analyzing interaction patterns.

Perhaps most importantly, the action module incorporates feedback mechanisms to ensure executed actions achieve their intended outcomes. When actions don’t produce expected results, the module can adapt its execution approach or signal the need for strategy refinement. This creates a continuous improvement loop that enhances the agent’s effectiveness over time.

The action module is essentially the bridge between what we want to accomplish and how we actually get it done. It’s the difference between having a brilliant strategy and actually seeing results.

Christopher Akin, PMP®

Learning Strategies: The Engine of Adaptation

Learning strategies serve as the foundation for how autonomous agents evolve and adapt, much like how a skilled chef refines their recipes through experimentation and feedback. These strategies enable agents to modify their behavior based on experiences and acquired knowledge, ultimately leading to more sophisticated and effective decision-making.

Supervised learning represents perhaps the most straightforward approach, functioning like an attentive teacher guiding a student. In this model, the agent receives labeled training data with clear examples of correct outputs for given inputs. This foundational strategy helps agents learn to recognize patterns and make predictions based on previously seen examples, similar to how a medical student learns to diagnose conditions by studying confirmed cases.

Unsupervised learning takes a more exploratory approach, allowing agents to discover hidden patterns and relationships within unlabeled data. Rather than relying on explicit correct answers, these algorithms group and categorize information based on inherent similarities. Think of it as an archaeologist examining artifacts without prior knowledge, gradually identifying patterns and relationships between different pieces.

Reinforcement learning represents perhaps the most dynamic approach, where agents learn through direct interaction with their environment. Like a child learning to ride a bicycle, the agent receives feedback in the form of rewards or penalties based on its actions. Through trial and error, it develops strategies to maximize positive outcomes while minimizing negative ones.

Each learning strategy offers unique advantages depending on the specific challenges and available data. While supervised learning excels at well-defined problems with clear right and wrong answers, unsupervised learning shines when exploring unknown patterns in data. Reinforcement learning proves particularly valuable in scenarios requiring continuous adaptation to changing conditions.

| Learning Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Supervised Learning | Learning from labeled training data with clear examples of correct outputs for given inputs. | Effective for pattern recognition and prediction based on past data. | Requires large amounts of labeled data; may not perform well on unseen data. |

| Unsupervised Learning | Discovering hidden patterns and relationships within unlabeled data. | Useful for exploring unknown patterns in data. | Less precise as it does not have clear correct answers; can be more complex to implement. |

| Reinforcement Learning | Learning through direct interaction with the environment, receiving feedback in the form of rewards or penalties. | Adaptable to changing conditions; effective in dynamic environments. | Can require extensive time and computational resources to train effectively. |

Learning is not a one-size-fits-all process – the key is selecting the right strategy for each unique challenge. The most effective autonomous systems often combine multiple learning approaches to achieve optimal results.

Michelle Connolly, Educational Consultant

The beauty of these learning strategies lies in their ability to mimic and even enhance natural learning processes. By understanding and implementing these approaches thoughtfully, we can create autonomous systems that not only adapt to their environment but continuously evolve to meet new challenges with increasing sophistication.

Real-World Applications of AI Agent Architectures

View of a self-driving Tesla car’s dashboard and city street.

Artificial intelligence agents have significantly impacted various industries through advanced decision-making architectures and autonomous control systems. These implementations highlight how AI agents can perceive, reason, and act in complex environments.

In robotics and autonomous vehicles, AI agent architectures have advanced from basic rule-based systems to sophisticated algorithms capable of real-time decision making. According to recent industry studies, modern autonomous driving systems use interconnected AI agents for environmental perception and vehicle control, ensuring safe navigation in complex scenarios.

The industrial automation sector has seen significant changes through AI agent implementations. These systems use distributed architectures where multiple agents collaborate to optimize manufacturing processes, monitor equipment health, and maintain quality control. For instance, embodied AI agents in mobile robotic platforms now navigate factory floors and interact with their environment using advanced sensors and actuators.

| Industry | AI Agent Application | Impact |

|---|---|---|

| Financial Services | Risk management, fraud detection, customer service | Enhanced risk management, real-time fraud detection, improved customer trust and satisfaction |

| Healthcare | Administrative tasks, patient care, diagnostic support | 30% increase in operational efficiency, streamlined administrative tasks, improved patient care |

| Retail | Inventory management, personalized customer experiences, sales optimization | 20% increase in sales, optimized stock levels, tailored product recommendations |

| Manufacturing | Production processes, quality control, predictive maintenance | 15% reduction in operational costs, reduced downtime, improved efficiency |

| Transportation | Autonomous vehicles, fleet management | Enhanced driving safety, efficient fleet operations |

| Agriculture | Precision farming, crop management | Optimized crop management, data-driven insights for farmers |

A fascinating development in smart laboratories shows AI agents coordinating complex experimental workflows. A groundbreaking demonstration connected two robotic labs in Cambridge and Singapore through a dynamic knowledge graph, enabling autonomous experimentation and real-time collaboration. This illustrates how AI architectures can bridge geographical boundaries to accelerate scientific discovery.

The practical impact of these AI agent architectures extends beyond single-domain applications. Modern implementations often integrate multiple AI agents specializing in different tasks while working cohesively. For example, in autonomous vehicles, separate agents handle perception (identifying objects and road conditions), planning (determining optimal routes), and control (managing vehicle operations), all while maintaining constant communication to ensure safe and efficient operation.

These real-world applications demonstrate the versatility and capability of AI agent architectures in solving complex problems across industries. As these systems evolve, there is increased emphasis on making them more robust, adaptable, and capable of handling the unpredictability of real-world environments.

Future Directions in AI Agent Development

The evolution of AI agents is reshaping our interaction with technology. Advanced learning algorithms are enabling more sophisticated decision-making capabilities that adapt and improve over time. These developments signal a shift from simple automation to intelligent collaboration between humans and machines.

The integration of AI agents with human activities represents an exciting frontier. We are moving beyond basic chatbots and automated tasks toward AI agents that can understand context, anticipate needs, and seamlessly participate in complex workflows. The ability of these systems to learn from human feedback while maintaining autonomous operation marks a significant leap in human-AI cooperation.

Autonomous decision-making capabilities are undergoing remarkable transformation, with AI agents now capable of interpreting data, creating new content, and making sophisticated choices that mimic real-world patterns. This advancement suggests a future where AI agents can handle increasingly complex tasks with minimal human oversight, while maintaining reliability and accountability.

SmythOS offers robust solutions that simplify the development and management of AI agents. Its visual workflow builder and reusable components democratize AI development, allowing organizations to create sophisticated agents without extensive coding knowledge. This accessibility is crucial as AI agent deployment becomes standard practice across industries.

The convergence of enhanced learning algorithms, seamless human integration, and advanced decision-making capabilities will likely accelerate the adoption of AI agents across sectors. As these technologies mature, platforms that can effectively orchestrate and manage AI agents while maintaining security and scalability will become vital to organizational success.