Imagine an AI agent as a master chess player, carefully observing the board, planning several moves ahead, and executing winning strategies. Just like a skilled player needs the right mental framework to excel, AI agents require thoughtfully designed architectures to effectively navigate their environment and accomplish goals. Agent architecture design has become the blueprint that determines whether an AI system will be a grandmaster or a novice.

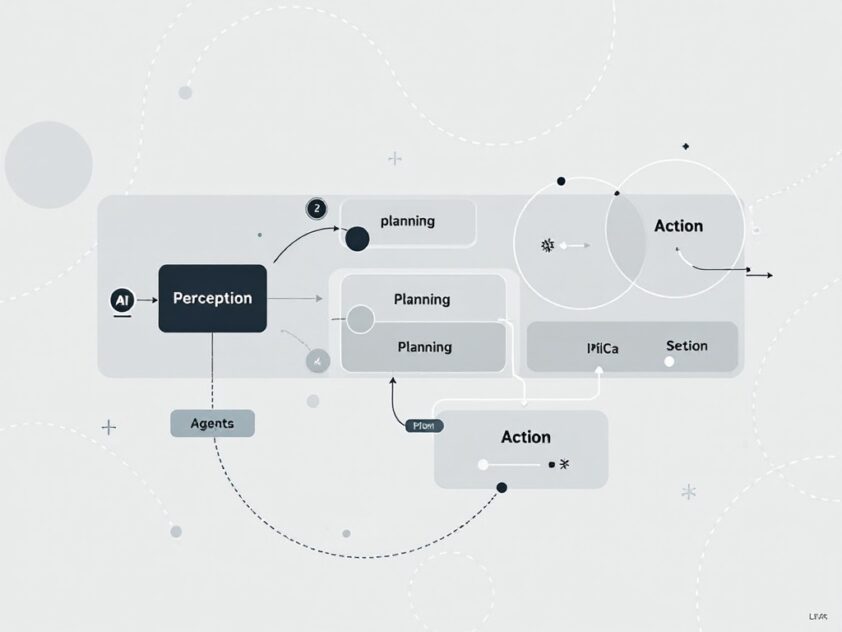

At its core, agent architecture design is the foundational framework that shapes how artificial intelligence systems perceive, analyze, and interact with their surroundings. Much like the human brain’s intricate neural pathways, these architectures consist of specialized modules working in harmony—from gathering sensory input to making complex decisions and taking action. The elegance of a well-designed agent architecture lies in how seamlessly these components collaborate to produce intelligent behavior.

The profiling module acts as the agent’s sensory system, gathering and interpreting data from the environment much like our eyes and ears process the world around us. This critical component helps the agent build an accurate understanding of its context and situation, forming the foundation for informed decision-making.

Working alongside perception is the planning module—the strategic center that evaluates options and charts optimal paths toward goals. Think of it as the agent’s inner strategist, analyzing various scenarios and their potential outcomes before committing to a course of action. This forward-thinking capability allows agents to navigate complex situations with remarkable sophistication.

The action module transforms plans into reality, serving as the bridge between decision and execution. Just as a pianist’s fingers translate musical intent into keystroke melodies, this module converts the agent’s chosen strategies into concrete steps that affect change in the environment. The precision and reliability of the action module often determine whether carefully laid plans succeed or fail.

Behind all these components lies a powerful learning engine that continuously refines the agent’s capabilities through experience. By analyzing successes, failures, and everything in between, modern AI architectures can adapt and improve over time—a testament to how far we’ve come in replicating aspects of human cognition.

Understanding Profiling Modules

The profiling module functions as the primary sensory system for an artificial intelligence agent, similar to how human senses help us understand and navigate our world. Just like our eyes, ears, and other senses gather information about our surroundings, this crucial component continuously collects and processes data from the agent’s environment.

At its core, the profiling module acts as an information processing pipeline. Raw sensory input—such as visual data from cameras, audio from microphones, or readings from other sensors—flows in and is transformed into structured data that the AI agent can utilize for decision-making. This process resembles how our brains convert raw sensory signals into meaningful perceptions.

Functioning like a sophisticated filtering system, the profiling module separates relevant signals from background noise. For example, an autonomous vehicle’s profiling module might analyze camera feeds to identify important objects like pedestrians, traffic signs, and other vehicles while dismissing irrelevant details in the background. The module does not simply collect data; it actively processes and organizes sensory information into recognizable patterns that the AI agent can act upon.

The profiling module is essentially the bridge between what we want to accomplish and how we actually get it done. It’s the difference between having a brilliant strategy and actually seeing results.

Think of it as a personal assistant that not only gathers facts but also organizes them in a way that enhances decision-making efficiency. Just as our brains learn to prioritize relevant sensory information and ignore distractions, the profiling module enables AI agents to develop increasingly sophisticated methods for processing sensory inputs. This adaptive capability allows agents to become more effective at understanding and responding to their environments over time.

The accuracy and efficiency of the profiling module directly impact an AI agent’s ability to make sound decisions. Just as human sensory impairments can hinder our ability to navigate the world safely, limitations in an agent’s profiling capabilities can restrict its effectiveness in performing designated tasks. Through continuous refinement and optimization of these sensory systems, AI agents can achieve higher levels of autonomy and intelligence in their operations.

The Role of Planning Modules

Planning modules serve as the strategic brain of autonomous AI agents, transforming raw data into actionable plans. These sophisticated components analyze information gathered by profiling modules to chart optimal paths toward achieving specific objectives. Much like a chess grandmaster evaluating possible moves, planning modules systematically process data to determine the most effective course of action.

Planning modules operate through a structured approach that breaks down complex goals into manageable subtasks. The process begins with analyzing the data collected from various sources, similar to how a self-driving car’s planning system processes sensor data to navigate safely through traffic. This initial analysis helps identify key patterns and priorities that inform the strategic planning process.

One of the most powerful features of planning modules is their ability to formulate dynamic strategies. Rather than following rigid, predetermined paths, these modules continuously adapt their plans based on new information and changing circumstances. For example, in a customer service AI agent, the planning module might adjust its response strategy based on analyzing the customer’s tone and previous interactions to provide more personalized solutions.

The importance of planning modules becomes particularly evident in complex environments where multiple variables must be considered simultaneously. These modules excel at balancing competing priorities and constraints to develop feasible plans. Consider how a supply chain AI agent’s planning module might weigh factors like inventory levels, shipping costs, and delivery deadlines to optimize logistics operations.

Modern planning modules often incorporate advanced decision-making algorithms that can evaluate thousands of potential scenarios before selecting the optimal approach. This capability enables AI agents to make sophisticated choices that account for both immediate needs and long-term consequences. For instance, a financial trading AI’s planning module might analyze market trends, risk factors, and historical data to develop investment strategies that balance potential returns with risk management.

The effectiveness of planning modules hinges on their ability to translate complex data analysis into clear, executable actions. They bridge the gap between raw information and practical implementation, ensuring that AI agents can pursue their objectives with precision and purpose. This translation process is crucial for maintaining the autonomy and efficiency of AI systems across various applications and industries.

Executing Actions with Action Modules

Action modules serve as the hands and feet of AI agents, translating carefully crafted plans into real-world results. Like a conductor directing an orchestra, these modules coordinate multiple components simultaneously to execute tasks with precision and purpose. Their sophisticated capabilities allow AI agents to move beyond theoretical planning into meaningful action.

The action module operates through a methodical process of translating high-level directives into specific, executable steps. When an AI agent determines a course of action, the action module breaks down complex plans into discrete, manageable tasks that can be carried out sequentially or in parallel. For example, a smart home system’s action module might coordinate adjusting the thermostat, dimming lights, and activating security systems based on a single ‘Good Night’ command.

Timing and coordination play crucial roles in effective action execution. As noted by SmythOS researchers, action modules must carefully orchestrate multiple operations while maintaining awareness of system states and environmental conditions. This orchestration ensures that actions occur in the correct sequence and adapt to changing circumstances.

One of the most important features of action modules is their ability to provide execution feedback. When an action is taken, the module monitors its implementation and reports on success or failure. This feedback loop is essential for continuous improvement and allows the AI agent to adjust its strategies based on real-world outcomes. For instance, if an automated trading system’s action module detects that a particular trade execution failed, it can immediately notify the planning module to develop alternative approaches.

The efficiency of the action module can make or break an AI agent’s performance. A brilliantly conceived plan is worthless if it can’t be executed properly.

Action modules must also maintain flexibility in their execution strategies. They often incorporate contingency handling and error recovery mechanisms to deal with unexpected situations. This adaptability ensures that AI agents can continue functioning effectively even when faced with unforeseen circumstances or partial system failures. For example, a manufacturing robot’s action module might automatically adjust its movements if it encounters an obstruction, rather than simply halting operations.

| AI System | Task | Description |

|---|---|---|

| CogAgent | Visual Question Answering (VQA) | Answers questions based on GUI screenshots |

| Gorilla | API Calls | Executes API calls based on natural language queries |

| Rabbit R1 | Task Management | Manages tasks like controlling music and booking transportation |

| Smart Home System | Home Automation | Coordinates adjusting thermostat, lights, and security systems |

| Automated Trading System | Trade Execution | Executes trades and develops alternative strategies based on feedback |

Learning Strategies for Continuous Improvement

Modern AI agents employ sophisticated learning strategies that enable them to adapt and evolve, much like how a master chess player refines their game through experience. These intelligent systems leverage two primary approaches to achieve continuous improvement: reinforcement learning and supervised learning.

Reinforcement learning represents a dynamic approach where AI agents learn through trial and error, similar to how children master new skills. The agent interacts with its environment, receives feedback in the form of rewards or penalties, and adjusts its behavior accordingly. For instance, research shows that this interactive learning process, while initially showing a dip in productivity (known as the Productivity J-Curve), ultimately leads to significant performance improvements.

Supervised learning, in contrast, follows a more structured path. The AI system learns from labeled examples, much like a student learning from solved problems. This approach excels in scenarios where clear right and wrong answers exist. The algorithm analyzes patterns in the training data to make increasingly accurate predictions over time.

The beauty of these learning strategies lies in their complementary nature. While reinforcement learning shines in dynamic environments where the best course of action isn’t always clear, supervised learning excels at pattern recognition and classification tasks. Together, they form a powerful toolkit for continuous improvement.

The impact of these learning strategies extends beyond mere performance enhancement. AI systems using these techniques can develop sophisticated decision-making capabilities that evolve with experience. For example, in healthcare diagnostics, AI models can continuously refine their ability to identify diseases by learning from new cases while maintaining accuracy on previously learned patterns.

The ability of AI to learn and adapt through these strategies represents one of the most promising aspects of artificial intelligence. It’s not just about getting better at specific tasks – it’s about developing systems that can autonomously improve over time.

Julie Cline, AI Transformation Expert

As these learning strategies continue to evolve, we’re seeing increasingly sophisticated applications across various industries. From optimizing supply chain operations to enhancing customer service interactions, AI agents equipped with these learning capabilities are transforming how businesses approach complex challenges.

Challenges in Agent Architecture Design

Building intelligent AI agents presents formidable challenges that developers must carefully navigate. Like constructing a sophisticated orchestra where each component must work in perfect harmony, agent architecture design requires balancing multiple complex elements while maintaining system stability and performance.

One of the most pressing challenges lies in managing uncertainty. AI agents must make decisions in dynamic environments where information is often incomplete or ambiguous. For instance, an autonomous agent processing natural language requests needs to handle variations in user input, context shifts, and unclear intentions while still providing reliable responses.

Scalability poses another significant hurdle in agent architecture design. As research indicates, AI systems must be resilient to failures and capable of handling unexpected situations. When workloads increase or requirements change, the architecture should adapt seamlessly without compromising performance or reliability.

Integration complexities also create substantial obstacles. Modern agent architectures must connect smoothly with existing systems while maintaining security and performance—a delicate balancing act that becomes exponentially more challenging as system scope expands. This often requires breaking down traditional silos between specialties and fostering meaningful interdisciplinary collaboration.

Data bias represents a particularly insidious challenge in agent development. When autonomous agents learn from flawed or limited datasets, they risk perpetuating inequalities through their decision-making processes. Creating balanced, representative training data requires careful curation and continuous monitoring to ensure fair and effective agent behavior.

Training data biases pose a fundamental challenge in developing autonomous agents that operate fairly and effectively. These biases often emerge when datasets lack proper representation across different demographics, scenarios, and use cases.

Technical compatibility issues frequently arise when integrating agent architectures with legacy systems. Many existing infrastructures weren’t designed with AI agent integration in mind, creating significant communication barriers. Organizations must implement innovative strategies like middleware solutions and incremental integration techniques to bridge these technological divides.

Despite these challenges, promising solutions are emerging. Advanced monitoring systems, visual debugging environments, and standardized development frameworks are transforming how developers approach agent architecture creation. Success lies in adopting a holistic approach that considers not just technical excellence, but also ethical implications, user needs, and long-term maintainability.

| Challenge | Solution |

|---|---|

| Managing Uncertainty | Develop adaptive decision-making algorithms that can handle incomplete or ambiguous information. |

| Scalability | Design resilient architectures that can seamlessly adapt to increased workloads and changing requirements. |

| Integration Complexities | Utilize middleware solutions and incremental integration techniques to ensure smooth connection with existing systems. |

| Data Bias | Curate balanced and representative training data and continuously monitor for fairness and effectiveness. |

| Technical Compatibility | Implement innovative strategies to bridge communication barriers between AI agents and legacy systems. |

Conclusion: Future of Agent Architecture Design

The evolution of AI agent architectures stands at a pivotal moment, where addressing current challenges promises to unlock unprecedented levels of autonomy and effectiveness. Enhanced reasoning capabilities and more sophisticated multi-agent collaboration are reshaping how AI systems operate and interact.

The industry’s shift toward more dynamic and adaptable architectures reflects a deeper understanding of what makes AI agents truly effective. Key developments in areas like state management, tool integration, and decision-making logic are pushing the boundaries of what autonomous systems can achieve. As these architectures mature, we’re witnessing the emergence of more robust and reliable AI systems capable of handling increasingly complex tasks.

Platforms like SmythOS are leading this transformation, offering comprehensive solutions that simplify the development and deployment of autonomous agents. By providing intuitive tools for building and orchestrating AI systems, such platforms democratize access to advanced AI capabilities while maintaining the high standards necessary for enterprise-grade applications.

The future of agent architecture design appears remarkably promising. We can expect more sophisticated collaboration between agents, enhanced learning capabilities, and improved integration with existing systems. These advancements will likely transform how businesses operate, making AI agents more accessible and effective across various industries.

The journey toward more capable and autonomous AI systems continues, driven by innovative architectural approaches and powerful development platforms. Moving forward, the focus remains on creating architectures that enhance AI capabilities and ensure responsible and effective deployment of these transformative technologies.