The rapid evolution of AI development tools has led to a variety of platforms for building intelligent applications. Among these, SmythOS and LangChain have emerged as notable solutions, each addressing the challenge of creating and deploying AI-driven agents in distinct ways.

SmythOS is a full-fledged AI automation and orchestration platform geared toward fast, enterprise-ready development with minimal coding, whereas LangChain is an open-source framework that provides building blocks for developers to create advanced AI applications from scratch. This article provides a structured comparison of SmythOS and LangChain, examining their architectures, capabilities, security measures, scalability, integration ecosystems, deployment options, and developer experiences. By analyzing these key aspects, we can understand the strengths and trade-offs of each platform and identify which scenarios favor one over the other.

As organizations increasingly seek to leverage large language models (LLMs) and AI agents in production, choosing the right platform is critical.

LangChain has gained popularity among developers for its flexibility and rich library of components since its launch in 2022.

SmythOS, on the other hand, is a newer entrant that aims to streamline AI agent development for businesses by offering an “AI operating system” with an intuitive interface and robust built-in features.

In the following sections, we delve into an overview of each platform and then present a detailed side-by-side comparison across various criteria.

The goal is to understand where each shines and how they differ – especially for teams looking to deploy AI in real-world business environments.

SHORT Feature Comparison at a Glance

The table below provides a quick feature overview:

| Capability | SmythOS | LangChain |

|---|---|---|

| No-Code Visual Workflow Builder | ✅ | ⚠️ |

| AI-Assisted Workflow Generation (Weaver) | ✅ | ❌ |

| Multi-Agent Orchestration (Concurrent Agents) | ✅ | ❌ |

| Dedicated Secure Execution Runtime | ✅ | ❌ |

| Alignment & Policy Guardrails | ✅ | ❌ |

| Extensive Integration Library (APIs & Models) | ✅ | ✅ |

✅ = supported natively; ❌ = not supported; ⚠️ = partially supported or requires custom work

As shown above, SmythOS offers comprehensive out-of-the-box capabilities across these categories, whereas LangChain often requires additional custom development or third-party tools to achieve similar functionality.

LONG Feature Comparison at a Glance

The table below provides a high-level comparison of core features and capabilities between SmythOS and LangChain.

| Feature | SmythOS | LangChain |

|---|---|---|

| Core Development | ||

| Visual Drag-and-Drop Builder | ✅ Yes | ⚠️ Requires external software |

| No-Code Options for Workflow | ✅ Yes | ❌ No |

| Low-Code Extensibility | ✅ Yes | ⚠️ Partial |

| Python/API Programming | ⚠️ Limited | ✅ Yes |

| Autonomous Agents | ||

| Built-in Autonomous Agent Support | ✅ Yes | ✅ Yes |

| Multi-step Reasoning | ✅ Yes | ✅ Yes |

| Multi-Agent Collaboration | ✅ Yes | ⚠️ Partial |

| Agent Work Scheduler (Task Scheduling) | ✅ Yes | ⚠️ Requires external scheduler |

| Integrations & Data | ||

| Pre-built API Integrations (CRM, Slack, etc.) | ✅ Yes | ⚠️ Many via community, code required |

| Pre-built AI Model Integrations | ✅ 1M+ models | ⚠️ Many via libraries |

| Zapier Integration | ✅ Yes | ✅ Yes |

| Data Lake / Vector DB Included | ✅ Built-in | ❌ No (external required) |

| Document & Web Data Loaders | ✅ Yes (via component and data lake) | ✅ Yes (via loaders) |

| Knowledge Base (RAG) Support | ✅ Native | ✅ Via external vector store |

| Security & Compliance | ||

| Sandboxed Execution | ✅ Yes | ❌ No |

| Role-Based Access Control | ✅ Yes | ❌ No |

| Action/Tool Allow & Deny Lists | ✅ Yes | ❌ No (manual) |

| Constrained AI Alignment | ✅ Yes | ❌ No |

| Data Encryption in Platform | ✅ Yes | ❌ No |

| Audit Logging of Agent Actions | ✅ Yes | ❌ No (must implement) |

| Execution Architecture | ||

| Dedicated Runtime Engine | ✅ Yes | ❌ No (runs in Python) |

| Dynamic Code Generation | ✅ Yes | ✅ Yes (agent can generate code) |

| Parallel Task Execution | ✅ Yes (native async support) | ⚠️ Partial (requires threading/async) |

| Reliability (Auto-Retry/Failover) | ✅ Yes | ❌ No (manual) |

| Performance Optimizations | ✅ Yes (optimized internals) | ⚠️ Dependent on coding |

| Monitoring & Debugging | ||

| Live Logs & Traces | ✅ Yes | ⚠️ Via LangSmith or custom |

| Error Alerts/Handling | ✅ Yes | ❌ Not built-in |

| Human-in-the-loop Controls | ✅ Yes (escalation possible) | ❌ No (custom code) |

| Deployment & DevOps | ||

| One-Click/Managed Deployment | ✅ Yes | ❌ No |

| Cloud Service Availability | ✅ Yes | ❌ N/A (self-deploy) |

| On-Premises Deployment | ✅ Yes | ✅ Yes (being custom app) |

| Edge Deployment | ✅ Supported via runtime | ✅ Possible (with limitations) |

| REST API Endpoint (Webhook) | ✅ Yes | ✅ Yes (if coded) |

| Custom Domain Support | ✅ Yes | ⚠️ Yes (if deployed behind custom domain) |

| Separate Staging/Prod Environments | ✅ Yes | ❌ No inherent support |

| Scheduling (Cron) | ✅ Built-in | ⚠️ Use external scheduler |

| CI/CD Integration | ⚠️ N/A (platform managed) | ✅ Yes (standard code CI/CD) |

| Support & Community | ||

| Official Support (Vendor) | ✅ Yes | ⚠️ Community support |

| Open-Source Community | ❌ Proprietary | ✅ Large OSS community |

| Frequent Updates | ✅ Planned/controlled | ✅ Rapid (community-driven) |

| Learning Resources | ✅ Docs, Community, vendor help | ✅ Abundant community tutorials |

✅ = supported natively; ❌ = not supported; ⚠️ = partially supported or requires custom work

What’s an AI Agent?

Not all AI agents are created equal. Everyone talks about having AI agents, but that doesn’t mean we’re talking about the same thing.

Some are really, really basic: Give an LLM model a prompt, add some skills (like web browsing), and some data. This is what most people call an agent. This lacks safety. The models have unrestricted freedom to use the skills how they like (like deleting your drive), which is not enterprise-friendly. SmythOS is fundamentally different and gives security, control, and transparency.

Some are advanced, but coded: With code you can build anything, but it’s slow, hard to understand what’s going on, hard to debug, and hard to maintain. Code frameworks meanwhile are fast to get started but too opinionated and many people dislike them.

Some are rebranded automation: Some vendors take automation (if-this-then-that) and add the ability to add AI steps, then call it “AI agents”. This type of retrofit doesn’t solve the fundamental weakness of brittle RPA automations and can barely meet the definition of AI agents. SmythOS is truly agentic from the ground up.

Multi-Agent frameworks: Platforms that focus on orchestrating AI agents as teams are wonderful, however teams are only as capable or safe as the individual AI agents operating in such teams. When orchestrating agents, they expect you to build them with code first. Moreover, the orchestration layer will be replaced by AI reasoner models in 2025, therefore such platforms have no moat. SmythOS focuses instead on building the most safe, aligned, and powerful individual agents, so they can be orchestrated for maximum ROI.

SmythOS Overview

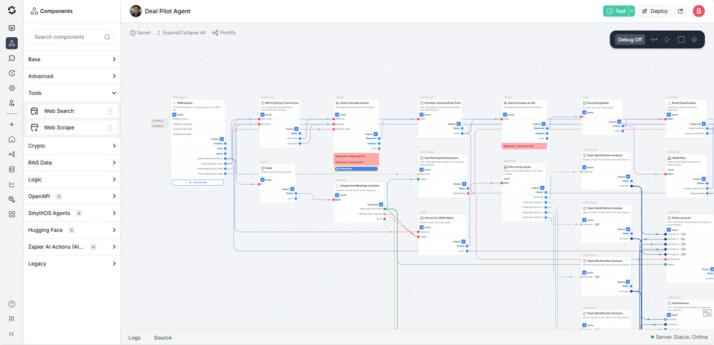

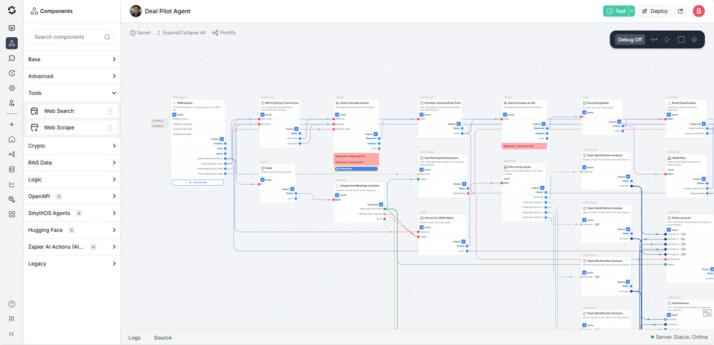

SmythOS is a comprehensive platform for building, deploying, and managing AI-driven agents and workflows. It combines advanced AI capabilities—such as multi-agent collaboration, long-term memory, and autonomous task execution—with an intuitive no-code/low-code interface. The goal of SmythOS is to democratize the creation of sophisticated AI solutions, enabling both software developers and non-technical users to design complex AI-driven workflows quickly. By abstracting away low-level complexities (like orchestrating multiple models or tools in code) while still allowing extensibility for developers, SmythOS lets teams focus on business logic and innovation rather than plumbing and integration.

In terms of real-world traction, SmythOS has already demonstrated significant adoption. The platform boasts over 11,500 users and 16,500 AI agents built using its tools, including deployments in Development and Marketing Agencies. This early traction underscores SmythOS’s credibility and suggests that it addresses real needs across industries, from government to global enterprises. SmythOS advertises dramatic improvements in development speed and ease compared to traditional methods – reportedly enabling AI agent deployment up to 1000× faster than conventional approaches, thanks to features like real-time debugging and extensive pre-built integrations. In essence, SmythOS positions itself as an “AI operating system” for business, aiming to provide the flexibility and power of coding-centric frameworks (like LangChain) combined with the user-friendly experience of visual tools, while surpassing both in key enterprise features.

Some of SmythOS’s core features include a drag-and-drop workflow builder for creating AI agent logic, built-in memory and context handling for agents, support for orchestrating multiple agents that can collaborate on tasks, and a library of pre-integrated tools and APIs that agents can use (e.g. connecting to databases, applications, or web services). It also offers enterprise-oriented capabilities such as a hosted runtime environment for agents, audit logs of agent actions, security controls, and scheduling for autonomous agent tasks. These built-in features mean that many functionalities that would require custom development in other frameworks come out-of-the-box in SmythOS. Overall, SmythOS’s value proposition is to dramatically accelerate AI solution development (both in prototyping and production) while ensuring robustness, security, and scalability from the start.

LangChain Overview

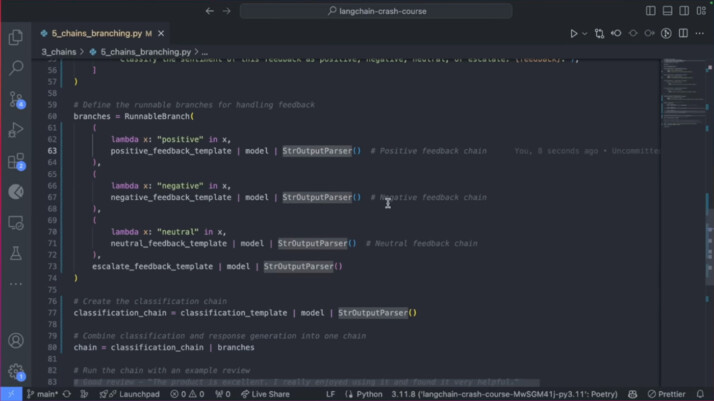

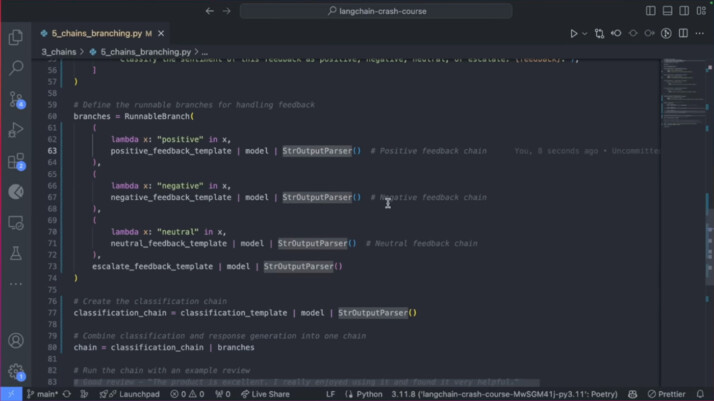

LangChain is an open-source framework that provides developers with a suite of tools and abstractions for building applications powered by large language models. Launched by Harrison Chase in October 2022, LangChain quickly rose to prominence in the AI developer community – by mid-2023 it was noted as one of the fastest-growing open-source projects on GitHub. LangChain’s primary strength lies in its flexibility and extensibility: it acts as a “glue” allowing LLMs to be connected with external data sources, APIs, and logical chains of prompts or actions. Essentially, LangChain offers modular components to handle various aspects of an LLM-powered application, such as prompt templates, memory (to store conversation context), chains for linking multiple steps or model calls, and “agents” that use LLMs to decide which actions (tools) to invoke next.

As a developer framework, LangChain requires coding in Python (or JavaScript, in its JS incarnation) to define the behavior of AI agents or applications. It does not provide a graphical interface – instead, developers write code to compose prompts, select language models, and integrate with tools. This code-first approach gives skilled developers fine-grained control over their AI systems and the ability to customize any part of the chain. However, it also means a steeper learning curve for those without programming expertise. In contrast to SmythOS’s out-of-the-box solutions, LangChain users often need to manually integrate external services or build additional infrastructure for features like monitoring, security, or deployment. LangChain “serves as a generic interface for nearly any LLM,” making it model-agnostic and versatile. Developers have used LangChain to build a wide range of LLM applications – from chatbots and intelligent assistants to query engines that connect language models with databases or documents.

One of LangChain’s key advantages is its vibrant open-source ecosystem. Being open-source, it has attracted a large community that contributes new modules and improvements. There are many community-driven integrations and extensions for LangChain, allowing it to connect with various APIs or services (for example, modules for web search, calculators, databases, etc., that an LLM agent can use). This means that while LangChain doesn’t come with a managed environment or a no-code UI, it benefits from community support and examples. It’s commonly used for rapid prototyping of LLM ideas in research and startups due to its flexibility. That said, using LangChain effectively in a production scenario typically requires significant software development effort and expertise in areas like prompt engineering, error handling, and system architecture. In short, LangChain provides the raw ingredients to build powerful AI-driven apps, but it’s up to the user to mix those ingredients correctly and safely.

Detailed Comparison Chart: SmythOS vs LangChain

To understand how SmythOS and LangChain stack up, the following table compares them across key aspects of functionality and enterprise readiness:

| Aspect | SmythOS | LangChain |

|---|---|---|

| Architecture & Design | Runtime-based orchestration – Agents run within an optimized SmythOS runtime environment (SRE) that ensures stable, low-latency execution without generating new code at runtime. SmythOS was built “agent-first,” supporting concurrent multi-agent workflows and structured execution flows out-of-the-box. | Framework/library approach – Agents are implemented by dynamically generating and executing code (Python) at runtime in user applications. LangChain itself is a library that developers incorporate into their code; it doesn’t provide a dedicated runtime, so execution flow and concurrency control must be handled by the developer’s infrastructure. |

| Security & Compliance | Built-in security guardrails – SmythOS enforces platform-level security policies such as sandboxed agent execution, role-based access control (RBAC), and audit logging of all action. Agents operate within strict boundaries to prevent unauthorized actions (no unrestricted code execution), and the platform is designed with enterprise compliance in mind. | Developer-managed security – LangChain leaves security to the application developer. By default, there are no integrated sandboxing or permission systems; an agent can execute whatever the LLM instructs if not manually constrained. This has led to known vulnerabilities (e.g., remote code execution exploits when LLMs generated dangerous code). Enterprises must build their own guardrails (access controls, logging, filtering) on top of LangChain to meet compliance and safety requirements. |

| Scalability & Performance | Concurrent, scalable execution – SmythOS supports parallel execution of multiple agents and is optimized for performance at scale. Its architecture handles concurrency, load balancing, and failure recovery internally. This means as workloads grow, SmythOS can manage distributing tasks across resources without requiring the user to write extra code. The runtime-first design avoids the overhead and unpredictability of on-the-fly code generation, resulting in more consistent performance. | Custom scalability required – LangChain by itself doesn’t scale automatically; it runs within whatever environment the developer provides. By default, LangChain chains run sequentially/synchronously (often on a single thread or process) unless one builds additional asynchronous or distributed systems around it. To scale a LangChain application to handle many requests or long-running agents, developers must implement their own infrastructure (e.g., using task queues, parallelism, or microservices). Performance can vary since each step involves dynamic LLM calls and possibly launching code, which adds latency compared to an optimized runtime. |

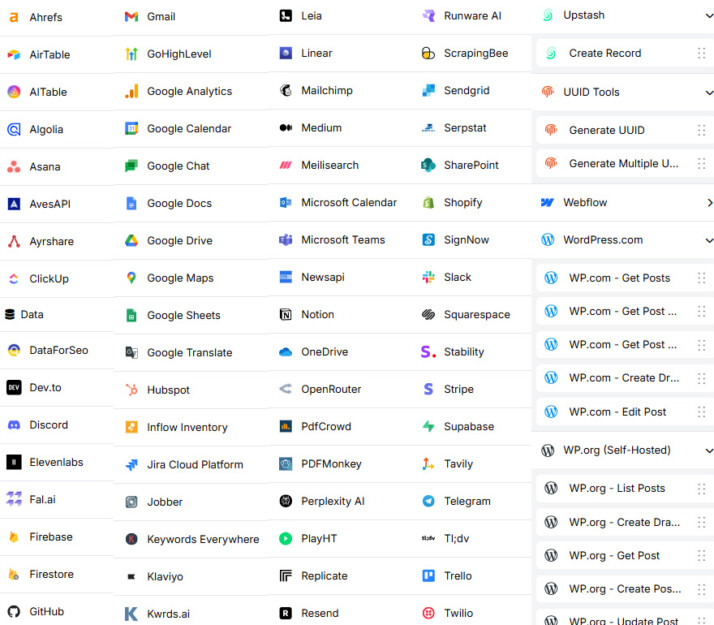

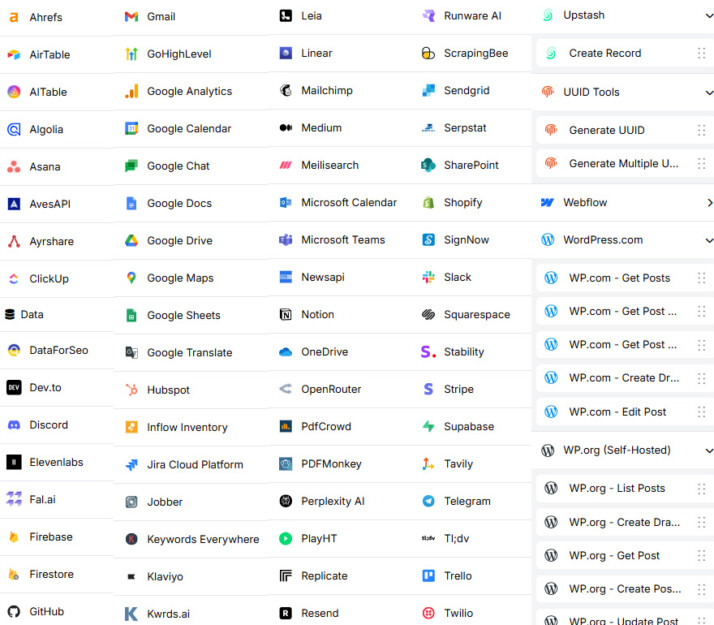

| Integrations & Ecosystem | Extensive pre-built integrations – SmythOS comes with a vast ecosystem of connectors and tools out-of-the-box. It natively supports integration with over 300,000 APIs and services (Slack, Stripe, databases, etc.) and offers thousands of pre-built actions/agent templates. This plug-and-play integration library means agents can immediately interact with enterprise applications or data sources without custom coding. The breadth of built-in integrations in SmythOS far exceeds what typical frameworks provide by default, enabling use-case-specific functionality (e.g., sending emails, querying databases, triggering RPA bots) with minimal setup. | Modular but manual integration – LangChain has a growing set of modules and community-contributed integrations, but nowhere near the comprehensive coverage of SmythOS’s library Common tools (web search, calculators, some databases) have LangChain wrappers, and developers can integrate any API or service by writing Python code or using community packages. However, each new integration may require additional coding and troubleshooting. LangChain provides the flexibility to connect to almost anything, but the process is not “one-click” – developers must handle the integration logic themselves or rely on third-party contributions, which can vary in quality and completeness. |

| Development Experience | No-code interface with optional coding – SmythOS emphasizes ease of development through its visual workflow builder and no-code interface. Non-technical users can design agent logic via drag-and-drop blocks and configuration, drastically lowering the barrier to creating AI agents. For developers, SmythOS allows custom code and model integration where needed, blending ease-of-use with extensibility. Features like real-time debugging dashboards, version control for agent flows, and built-in testing tools accelerate the development cycle. Teams report significantly faster prototyping and iteration (on the order of 10× faster to go from idea to a working agent) compared to coding from scratch. | Code-centric workflow – LangChain is designed for programmers. Building an application means writing code to assemble the chain of prompts, memory, and tools. This grants maximum flexibility to tweak logic, but requires proficiency in Python/JS and understanding of LLM behavior. There is no native GUI; debugging involves reading logs or printouts and manually adjusting code. For a developer experienced with AI, LangChain’s approach is powerful, but for non-programmers or fast iteration by cross-functional teams, it can be slow and impractical. The learning curve is steep for those not familiar with software development, as even simple adjustments require code changes. |

| Deployment & Infrastructure | Managed deployment options – SmythOS can be deployed in the cloud or on-premises, providing flexibility to meet enterprise IT requirements. The platform manages the runtime environment for agents (SmythOS Runtime Environment), meaning once an agent is designed, it can be deployed with one-click into a secure, scalable execution context without devops overhead. SmythOS agents can also be exported or embedded into other ecosystems (for example, packaged into a service for platforms like Azure or AWS, or integrated with tools like Vertex AI) without locking users into a single cloud. This “design once, deploy anywhere” capability, along with features like built-in monitoring and logging, simplifies moving from development to production. | User-managed deployment – LangChain, being a framework, does not offer deployment infrastructure. Applications built with LangChain must be deployed like any custom software project. Developers might wrap LangChain logic into a web service, container, or script and then host it on cloud platforms or on-prem servers as needed. This offers freedom – LangChain apps can run anywhere Python/JS runs – but also means the burden of setting up servers, scaling them, monitoring, and maintaining the runtime falls on the user. There is no dedicated support for deployment in LangChain itself; teams often integrate with other tools (like Flask/FastAPI for serving, or Kubernetes for scaling) to operationalize LangChain applications. |

| Features & Capabilities | Broad AI functionality out-of-the-box – SmythOS provides a rich set of AI capabilities natively. This includes support for multi-agent collaborations (agents communicating with each other), long-term memory storage for agents to accumulate knowledge, and even multimodal processing (handling text, images, audio within the same workflow). Specialized features like web crawling, document parsing, or scheduling recurring agent tasks are built into the platform, enabling complex workflows (e.g., an agent that reads a PDF and an image, then emails a summary) without external services. SmythOS’s philosophy is to cover the end-to-end needs of enterprise AI solutions (from data ingestion to action execution) in one unified interface. | Extensible via code libraries – LangChain’s capabilities are as broad as the code and models you integrate. It excels at chaining LLM calls and managing conversational state, and developers have extended it to many use cases by plugging in various tools. For example, LangChain can utilize computer vision or audio processing if the developer calls external libraries or APIs for those tasks. However, those are not part of LangChain itself. There is no inherent multimodal support or scheduling mechanism in the core LangChain library; such functionality must be added by the developer or by combining LangChain with other frameworks. Essentially, LangChain provides the basics for LLM applications (text in/out, tool usage, memory), but higher-level capabilities need to be custom-built or integrated from elsewhere. |

| Community & Support | Vendor support and documentation – SmythOS is a commercial platform (with a growing user base) and offers dedicated support, documentation, and service-level agreements for enterprise customers. Because it’s not open-source, new features and fixes are managed by the SmythOS team. This ensures a level of consistency and reliability in the platform’s roadmap. Users benefit from a guided experience and official channels for help, such as their Discord Channel. On the other hand, the community-driven third-party resources might be smaller compared to an open-source project, given SmythOS’s proprietary nature. | Large open-source community – LangChain’s open-source status has fostered an active community. There are extensive documentation, examples, and discussions available publicly. Users can find tutorials, ask questions on forums (or GitHub), and contribute to the codebase. This community innovation has rapidly expanded LangChain’s features. However, because it’s community-driven, support is best-effort (unless one opts for a commercial service around LangChain, if available). The fast pace of updates can be a double-edged sword: there’s a wealth of community extensions, but they may not all be production-grade, and breaking changes can occur as the project evolves quickly. |

With the above comprehensive chart, we can see how the two platforms differ fundamentally. Next, we’ll break down these points in an in-depth discussion, highlighting the implications of each aspect.

Architecture and Design Philosophy

SmythOS and LangChain have very different foundational architectures. SmythOS is built around the idea of a controlled runtime specifically for AI agent execution, whereas LangChain is essentially a library that operates within the user’s application runtime.

SmythOS: The architecture of SmythOS is often described as “runtime-first.” Instead of generating agent code on the fly, SmythOS runs agents in a dedicated execution environment known as the SmythOS Runtime Environment (SRE). This runtime is optimized for AI workflows – it ensures that each agent follows a predefined logic flow that’s been configured (via the visual editor or code) and executes those steps reliably. By doing so, SmythOS avoids a class of issues associated with dynamic code generation: namely, unpredictability and potential errors each time an agent runs. The platform’s design treats agents somewhat like managed processes with scheduling, error handling, and resource management baked in. In practical terms, when you deploy an agent on SmythOS, the platform takes care of how and where it runs, how it scales, and how to monitor it.

This approach stems from SmythOS’s philosophy of making AI agents production-ready by default. The system coordinates all AI tasks, much like an operating system manages processes on a computer. SmythOS’s architecture was designed with multi-agent orchestration in mind from the start. Agents can call each other or work in parallel without the developer needing to manage threads or asynchronous loops – the platform’s runtime handles concurrency control and inter-agent communication safely. This is a notable contrast to frameworks where adding concurrency or multi-agent interactions can become complicated.

Another aspect of SmythOS’s design is the separation of configuration from execution. Users define what an agent should do (its logic, tools it can use, triggers, etc.) in a high-level manner. At runtime, SmythOS ensures those instructions are followed exactly, rather than letting an LLM arbitrarily execute new code. This structured execution model contributes to reliability and security, since the agents can’t do things outside of what they were explicitly designed to do. It’s a design choice that trades off a bit of flexibility (you operate within SmythOS’s provided structure) in exchange for consistency and safety.

LangChain: LangChain’s design is more free-form. It’s a lightweight framework that sits on top of LLMs and allows developers to compose chains of actions. In LangChain, an “agent” often means an LLM-driven loop that decides which tool to use next, where each tool might be a piece of code executed on the fly. For example, LangChain provides an agent that can use a Python REPL tool: the LLM, following a reasoning prompt, can output some Python code as a string, and LangChain will execute that code in a Python interpreter and return the result. This dynamic approach is powerful because it means the AI can effectively write and run new code during its reasoning process. However, it also means the system’s behavior can be less predictable and potentially unsafe if not controlled.

LangChain itself does not dictate any specific runtime environment; the developer’s application is the runtime. This means architecture-wise, LangChain is very flexible and can be integrated anywhere (any environment that can call an API to an LLM and run code). The design philosophy here is to provide building blocks – prompt templates, memory stores, tool interfaces, etc. – and let developers assemble them as needed. It’s akin to a toolkit, enabling creation of highly customized agent behaviors. The downside is that every LangChain-based app might reinvent certain wheels (like how to handle errors or long-running processes) unless common patterns or additional libraries are used.

In summary, SmythOS’s architecture is more opinionated and integrated – it provides a complete system to execute AI agents reliably – whereas LangChain’s is open-ended and modular, requiring developers to impose their own structure for execution. SmythOS’s approach reduces the burden on the user to manage the complexities of running AI agents, but one has to work within SmythOS’s platform. LangChain gives maximum freedom to design any kind of chain or agent, but the developer carries the responsibility for making it robust.

Security and Compliance

Security is a critical concern, especially as AI agents might be provided access to sensitive data or allowed to perform actions in enterprise environments. Here the managed versus DIY nature of SmythOS and LangChain leads to very different security postures.

SmythOS: Security is a centerpiece of SmythOS’s design. Because it targets enterprise use cases, SmythOS includes built-in guardrails to ensure that AI agents do not perform unauthorized actions and that all activity can be monitored. The agents in SmythOS run in a sandboxed environment – meaning any code or tool usage they attempt is executed in isolation with restricted permissions. This prevents an agent from, say, directly accessing a file system or external network beyond what it is allowed to do via the platform’s connectors. SmythOS also implements role-based access control (RBAC) and policy enforcement at the platform level. For example, an organization using SmythOS can set which users or departments are allowed to deploy certain agents, or what external systems an agent is permitted to connect to. Every action an agent takes (like calling an API or writing to a database) is logged for audit purposes.

Importantly, SmythOS is designed to meet enterprise security standards. Features like encryption of data in transit and at rest, audit trails, and integration with single sign-on or identity management likely support these compliance goals. Essentially, SmythOS tries to eliminate the security risks of open-ended AI agents by containing their behavior and providing oversight. There are also safety nets like anomaly detection and optional human-in-the-loop approvals for certain actions, which can catch if an AI starts doing something unexpected or potentially harmful.

The result is that an organization can trust SmythOS to enforce a security baseline, rather than having to implement all checks from scratch. For instance, if an agent is supposed to just read from a database and email a report, SmythOS would prevent it from doing something entirely different like deleting records or calling unrelated external APIs, unless those were explicitly part of its defined workflow.

LangChain: By contrast, LangChain does not inherently provide security mechanisms because it’s not a managed service – it’s a toolkit running within your code. If you create an agent with LangChain that has access to a Python execution tool (for example), that agent can effectively do anything that the Python environment allows. There are no default sandboxing or permission limits; it is up to the developer to sandbox the environment or restrict capabilities. The flexibility of LangChain can become a vulnerability if an LLM using the toolkit is prompted (or tricked via prompt injection) into performing malicious actions. Indeed, there have been real examples of this risk: in mid-2023, security researchers highlighted that certain LangChain configurations could allow arbitrary code execution through prompt injection if exposed to end-users. Essentially, if an agent built with LangChain is not carefully controlled, an attacker could potentially get it to execute harmful code by feeding it crafted inputs.

For enterprise use, this means when using LangChain, security must be designed and layered on by the development team. This might involve steps like: running the agent’s code in a restricted container or sandbox, validating or filtering LLM outputs that are going to be executed as code, limiting network access for the agent process, storing secrets securely and only providing necessary credentials with least privilege, and implementing detailed logging. LangChain does provide some guidelines on avoiding dangerous configurations, but it does not enforce them.

Another aspect is compliance and auditing. Out-of-the-box, an agent built with LangChain is just part of your application, so if you need audit logs of every action the agent took, you have to instrument that yourself (for example, by logging each tool invocation or model response). There’s no built-in compliance certification; it inherits whatever compliance your deployment environment has.

In summary, SmythOS offers a secure-by-default environment for AI agents, which is a major advantage for enterprise deployment, whereas LangChain offers flexibility at the cost of requiring one’s own security architecture. A team choosing LangChain for a sensitive application should be prepared to invest in creating the necessary guardrails to reach the level of security and compliance that something like SmythOS provides out-of-the-box.

Scalability and Performance

When it comes to scaling up AI workflows and ensuring good performance under load, SmythOS and LangChain again take different paths due to their nature as a platform vs. a library.

SmythOS: Scalability was a design consideration for SmythOS from the beginning. Since it runs as a centralized orchestration platform, it can manage resources across many agents and handle a high volume of tasks. SmythOS supports parallel execution and multi-agent orchestration natively. This means if you have dozens of agents that need to run simultaneously, the SmythOS runtime can schedule them appropriately (likely spawning multiple worker processes or threads behind the scenes, or even distributing them across a cluster if the platform is deployed that way). Users of SmythOS do not have to explicitly code for concurrency; for example, if Agent A triggers Agents B and C, the system can run B and C in parallel and wait for both to finish.

Performance-wise, SmythOS aims for low-latency execution by optimizing the interaction between the agent logic and the underlying AI models. Because the agent logic is pre-defined and runs within the SRE, there’s less overhead each time an agent executes a step (compared to, say, constructing code and executing it anew). Notably, alternatives which generate code dynamically can incur latency and inefficiencies, which SmythOS avoids by using a fixed, structured runtime approach. Additionally, SmythOS likely has built-in caching or persistent sessions for LLM connections, and possibly model-hosting optimizations, which can improve performance at scale.

Another aspect of scalability is error handling and robustness. SmythOS’s controlled environment can catch errors in one agent without crashing others, and can perform automatic retries or failovers for tasks. When many agents are running, these management features prevent small issues from becoming large failures. The platform also monitors performance metrics (like response times and throughput) for each agent, so it can scale resources or alert developers if something slows down.

LangChain: LangChain itself is not a server or service – it’s part of your application code. So scaling a LangChain-based application is essentially the same as scaling any custom software. If you write a Python service using LangChain and you want to handle more load, you might run multiple instances of that service behind a load balancer, or incorporate asynchronous processing to handle more tasks concurrently. LangChain doesn’t provide a built-in mechanism for distributing work across machines or threads; that’s up to the developer or external infrastructure.

By default, many LangChain usage patterns are synchronous. For instance, if you create a chain of calls, your program will execute them one after the other. To achieve parallelism (say, handle multiple user queries at once, or allow an agent to perform multiple tool actions simultaneously), a developer must implement multi-threading, asynchronous I/O, or use task queues. There is inherent complexity here: LLM calls are often I/O-bound (waiting on the model’s response), so using asyncio or background workers can improve throughput, but it requires careful programming and is not provided by LangChain out-of-the-box.

In terms of raw performance, when both SmythOS and LangChain are making calls to an external LLM (like an OpenAI API), the latency of those calls will be similar. The differences come from overhead and how well each can handle concurrent operations. LangChain’s dynamic nature might introduce overhead – e.g., if an agent frequently spawns new code execution, that startup cost can add latency. SmythOS, by managing a persistent runtime, can streamline repeated operations.

Scaling LangChain often means leveraging standard cloud infrastructure: for example, deploying on serverless functions for concurrency or using Kubernetes to scale pods of a LangChain service. It’s feasible, but it requires DevOps work and careful architecture. Also, ensuring that all instances share necessary state (like memory or knowledge bases) is something the developer must plan (perhaps by using a shared database or vector store).

In conclusion, SmythOS provides built-in scalability – it can handle growing workloads with minimal additional effort from the user, whereas LangChain’s scalability is entirely user-implemented, meaning the engineering team must design and manage the environment for large-scale operation. For a small project or a proof-of-concept, LangChain’s lightweight approach is often sufficient, but for enterprise-scale deployment, SmythOS offers convenience and performance optimizations that can save considerable time and ensure reliability.

Integrations and Ecosystem

A critical factor in adopting an AI platform is how easily it connects with the rest of your technology stack – data sources, third-party services, databases, and other APIs. Here, SmythOS heavily emphasizes its integration ecosystem, whereas LangChain relies on community contributions and custom coding for integrations.

SmythOS: A standout feature of SmythOS is its extensive library of pre-built integrations. According to the provided information, SmythOS can natively connect to 300,000+ different APIs and tools across various domains. This covers everything from common enterprise applications (Salesforce, Slack, Stripe, GitHub, etc.) to databases and legacy systems. In practice, this means if your AI agent needs to interact with a certain service or software – for example, send a message on Microsoft Teams, query an ERP database, or fetch data from a CRM – SmythOS likely already has a connector or “action” available for that task.

Moreover, SmythOS provides thousands of pre-built actions and templates for agents. These can be thought of as ready-made blocks that perform specific tasks (e.g., “send an email report”, “create a support ticket”, “extract text from a PDF”) which can be dropped into an agent’s workflow. For a developer or business analyst building an agent, this drastically reduces the effort required – instead of writing code to integrate with a service or handle a file format, they can utilize a predefined action.

The breadth of SmythOS’s integrations far exceeds what typical open-source frameworks offer out-of-the-box. Open-source solutions might have plugins for popular services, but on the order of 300k connectors is massive – it suggests SmythOS aims to work in virtually any enterprise IT environment without additional development. This is a huge advantage for complex enterprise use cases where an AI workflow might need to touch many systems; SmythOS acts as a central hub that already “speaks” to those systems.

For example, consider a scenario: a company wants an AI agent to handle a customer support workflow. The agent needs to read a customer email, look up the customer’s purchase history in a database, cross-reference a shipping status via an external API, create a support ticket in ServiceNow, and send a summary back via email. With SmythOS, one could configure each of these steps using pre-built integrations (database connector, API call action, ServiceNow connector, email action) largely through configuration. With LangChain, a developer would have to write or use libraries for each of those steps and ensure the agent calls them appropriately.

Additionally, SmythOS’s integration ecosystem allows it to deploy or embed agents in various environments. As mentioned, agents can be exposed as chatbots (e.g., on Slack or a website), run on a schedule (cron-like automation), or even be integrated into cloud AI platforms like Google Vertex AI or Microsoft’s AI services. This “create once, deploy anywhere” flexibility means the logic you build in SmythOS isn’t confined to one channel – it can be reused in multiple contexts without rewriting.

LangChain: LangChain’s integrations and ecosystem come from the open-source community and the developer’s own code. LangChain provides interfaces and abstractions for tools, which means it’s relatively straightforward to create an integration by writing some Python code. For instance, LangChain can treat any Python function as a tool that an agent can call. This means if you want to integrate a new API, you can write a Python function to call that API (using requests or an SDK) and then add it as a tool in your LangChain agent’s toolkit.

Many common integrations have been created by the community or by LangChain’s maintainers. For example, there are modules for certain vector databases, for web searching, for math/calculator tools, and so on. However, the coverage of integrations in LangChain is nowhere near the 300k figure of SmythOS. Typically, if you need to connect to an enterprise system (like SAP or ServiceNow) or a less common service, you will be either writing that integration yourself or searching if someone has shared a wrapper online.

Setting up integrations in LangChain is manual and code-driven. Each new integration might involve installing a library or SDK, writing the glue code to interface with LangChain’s tool format, handling authentication (API keys, OAuth), and testing it thoroughly. This gives you flexibility (you can integrate basically anything that has an API), but it requires time and expertise.

The difference in approach is significant: SmythOS offers extensive, ready-made connectivity, which can save weeks of development time for integration-heavy projects, while LangChain offers the potential to connect to anything but requires you to do the connecting. If your use case only needs a few integrations and you have those skills available, LangChain might suffice. But if you foresee the need to integrate many systems or rapidly prototype connections to various services, SmythOS provides a big productivity advantage.

In summary, SmythOS provides an extensive, plug-and-play integration ecosystem that caters to enterprise needs with minimal additional coding, whereas LangChain relies on a modular but manual integration process, benefiting from community contributions but often requiring custom development for less common connections.

Development Experience and Ease of Use

The experience of building and maintaining AI agents on SmythOS versus LangChain is markedly different, and which platform feels more “user-friendly” can depend on the team’s skills and workflow preferences.

SmythOS: The development experience in SmythOS is geared towards rapid creation and iteration, often described as no-code or low-code. With its visual interface, many tasks that would normally require writing code are accomplished by configuring graphical elements. For example, designing an agent’s logic might involve drawing a workflow: you could have a node for an LLM prompt, followed by a node for a decision, then nodes for different actions depending on the decision outcome, all connected by arrows. This approach is highly accessible – business analysts or domain experts who aren’t experienced programmers could potentially build or modify an AI workflow themselves.

The visual nature also aids in clarity and collaboration: it’s easier for a team to discuss an agent’s logic by looking at a flowchart than by reading code. Changes can be made by tweaking configurations or rearranging nodes rather than rewriting code, which speeds up iteration.

For developers, SmythOS still provides extensibility. It allows injection of custom code or custom models where necessary. This is important because no visual platform can cover every possible need; advanced users will sometimes need to write code for specialized tasks or to integrate a new capability. SmythOS’s philosophy is to let you handle 90% of the work without code, and give you hooks to code the remaining 10% if needed. Crucially, developers only need to write code for the parts that truly require it – everything else (orchestration, standard integrations, etc.) is handled by the platform. This can lead to a significant reduction in development effort for complex projects.

Another major aspect of the development experience is debugging and monitoring. SmythOS provides real-time debugging and monitoring tools during agent execution. For instance, a developer could step through an agent’s run, seeing each action it takes, the responses from the LLM, and the data passing between steps. This is invaluable when refining prompts or fixing logic issues, because it provides immediate insight into the agent’s behavior. Instead of adding print statements or logs and re-running, you can watch the agent in action in a more interactive way. SmythOS also offers version control for workflows, so you can track changes, revert to earlier versions, and manage updates systematically (similar to how you’d use Git for code).

All these features contribute to what SmythOS claims is a much faster development cycle — possibly 10× faster to get to a working solution in some cases. While “10×” might be a bold claim, it’s clear that the combination of pre-built components, visual assembly, and integrated debugging can drastically reduce the time from idea to prototype to deployment.

LangChain: LangChain’s development experience is essentially traditional software development. You work in your IDE or notebook, write code, and use standard programming tools. For a developer, this can feel very natural – you have full control, and you’re not constrained by a graphical interface. If you want to do something unconventional, you just code it.

However, this means the speed of development and ease of use depends heavily on programming skill. There is no drag-and-drop interface or wizard to build your agent; you need to understand the LangChain library, write Python/JS code to construct your chains or agents, and handle the flow of data between components. This is fine for prototyping if you are comfortable coding, but it can slow down the process for more complex logic as you need to carefully implement and test each part.

Debugging in LangChain is done via logs or interactive debuggers. You might use print() statements to see intermediate outputs from the LLM or to trace which tool was selected by an agent. Some developers use Jupyter notebooks to iteratively test parts of their LangChain logic. There isn’t an official visual debugger that shows the chain execution step by step, so it’s a bit more manual. That said, because you have the code, you can instrument it as you wish or use standard debugging techniques.

Collaboration in a LangChain project is like any code project: you use version control (Git), code reviews, etc. Non-developers would find it difficult to directly contribute, since even a minor change (like adjusting a prompt or adding a new step) requires editing code. This can create a dependency on developers for every tweak, which might slow down iteration if, for example, a domain expert needs to fine-tune the agent’s behavior frequently.

The learning curve for LangChain specifically involves learning its abstractions and best practices. For developers already familiar with AI and APIs, LangChain is learnable, but those without a programming background will likely find it inaccessible. Even among developers, there’s a learning period to understand how to structure prompts, how memory works in LangChain, how to optimize chain calls, etc. The community provides a lot of examples and documentation, which helps, but it’s still more complex than using a dedicated platform like SmythOS.

In summary, SmythOS prioritizes ease and speed by providing a guided, visual development experience where much of the heavy lifting is handled by the platform. This enables faster prototyping and involvement from non-engineers in the development process. LangChain prioritizes flexibility and control, catering to developers who want to directly craft the solution. It allows ultimate customization at the cost of a steeper learning curve and a slower development cycle for complex tasks. Teams should consider whether they want a more turnkey development experience or a code-centric approach when choosing between these options.

Deployment and Infrastructure Considerations

Deploying an AI agent into a production environment involves considerations around where the agent runs, how it’s accessed by users or systems, and how it’s maintained over time. SmythOS and LangChain imply different deployment strategies given their nature.

SmythOS: As a platform, SmythOS provides deployment options as part of its offering. It can be deployed in the cloud, on-premises, or in hybrid environments to meet enterprise needs. This flexibility is important for organizations that have specific data security requirements (for example, banks might require on-prem or private cloud deployments).

When an agent is built in SmythOS, deploying it is typically straightforward. Because SmythOS manages the runtime, you might simply click “deploy” or enable a trigger for the agent (like an API endpoint or a schedule). The platform then takes care of provisioning the necessary compute, running the agent in its managed environment, and keeping it running. This managed deployment means that you don’t have to set up a separate server or container for each agent; SmythOS’s infrastructure runs the agents for you, either in their cloud service or within the installed platform in your environment.

SmythOS supports various execution modes for agents. For instance, you could expose an agent as a REST API endpoint for your applications to call, or as a chatbot integrated into Microsoft Teams or Slack, or schedule it to run nightly as a batch job. Because the platform handles these details, you configure how you want it to be available and SmythOS does the rest. The mention that SmythOS agents can be embedded into other ecosystems (like deploying into Azure’s AI tools or AWS services) suggests that SmythOS is built to interoperate with cloud providers, possibly by packaging agents as microservices or via connectors.

Another benefit of platform-managed deployment is monitoring and maintenance. SmythOS will have dashboards or logs for deployed agents, showing their status, usage statistics, errors, etc. It likely has mechanisms for scaling up the underlying resources if an agent is getting heavy usage (especially if running in a cloud environment). Upgrading an agent (after you improve its logic) is managed through the platform – you might update the workflow and redeploy, and the platform ensures the new version runs.

Because SmythOS can run on your own cloud or servers, using it doesn’t necessarily mean giving up control over data. They emphasize no proprietary lock-in, meaning you’re not forced to use their cloud if you don’t want to. You can maintain control of the environment while still enjoying a managed deployment experience.

LangChain: With LangChain, deployment is entirely up to you because you are essentially writing a custom application. Once you’ve built an AI application or agent with LangChain, you need to decide how it will run in production. Common approaches include:

- Embedding in an application backend: If the LangChain agent is part of a larger application (e.g., a feature in a web app), you might integrate it into your existing server code (say, a Flask or FastAPI app) and deploy that application normally.

- As a standalone service: You could wrap your LangChain logic in a simple API (for example, an endpoint that takes a request, passes it to the LangChain agent, and returns the response) and then deploy that API service. This could be done on a VM, a container, or serverless function depending on needs.

- Serverless or cloud functions: For agents that are invoked on demand, something like AWS Lambda or Azure Functions could be used to execute the LangChain agent code in response to events or API calls.

- Batch jobs or scripts: If an agent is supposed to run on a schedule, you might deploy it as a cron job or a scheduled task in your environment.

In all cases, the burden of infrastructure – ensuring there’s enough compute, that it’s secure, that it scales, and stays up – falls on your engineering/DevOps team. You’d use standard tools like Docker, Kubernetes, cloud services scaling, monitoring tools (like Prometheus, CloudWatch, etc.) to manage it.

This approach offers maximum flexibility. You can optimize for your specific scenario: choose the programming language environment, optimize memory and CPU usage, place the service in a specific network for latency reasons, etc. If your company already has a robust cloud infrastructure, deploying a new microservice for a LangChain agent might be straightforward for your DevOps team.

However, it also means more work whenever the agent needs changes or when usage grows. For example, if suddenly your LangChain-based chatbot becomes very popular, you’ll need to deploy additional instances or move to a larger server, etc., manually or via auto-scaling rules that you set up. Monitoring needs to be set up to catch errors (e.g., if the LLM API fails or returns invalid data, your service should handle it gracefully or alert someone).

In the LangChain scenario, if something goes wrong in production (say, the agent enters a loop or a new input causes an error), your team is responsible for diagnosing and fixing it. In contrast, on SmythOS, some of those issues might be mitigated by the platform’s safeguards or at least be easier to observe with built-in monitoring.

To put it simply, SmythOS deployment is like using a managed platform (similar to how one might use a Platform-as-a-Service), while LangChain deployment is like deploying custom code (in Infrastructure-as-a-Service). The former is easier and faster to get running, the latter is more customizable. Organizations that lack strong DevOps might lean towards SmythOS to avoid DevOps complexity, whereas organizations that have stringent control requirements or existing infrastructure may not mind deploying LangChain apps themselves.

Summary Comparison Table

To highlight the key differences and trade-offs between SmythOS and LangChain, below is a high-level summary:

| Key Dimension | SmythOS Strengths | LangChain Strengths |

|---|---|---|

| Ease of Use | No-code visual builder for agents; quicker learning curve for non-programmers. Built-in debugging and templates accelerate development. | Highly flexible for developers who code; fine-grained control via programming. No constraints of a platform’s UI – any logic can be implemented in code. |

| Security & Governance | Enterprise-grade security by default (sandboxing, RBAC, audit logs). Compliance features ready out-of-box. | Open-source transparency (code can be reviewed). Security can be tailored by the developer to specific needs (at the cost of more work). No inherent restrictions if full control is required. |

| Scalability | Scales automatically with multi-agent orchestration and concurrency managed by the platform. Performance optimizations built-in for agent workloads. | Can be scaled in any environment using standard software scaling techniques. Lightweight library can be embedded in custom distributed systems. No platform overhead if integrated directly into existing infra. |

| Integrations | Thousands of pre-built integrations for enterprise apps and services, enabling immediate connectivity (300k+ APIs/tools). “Plug and play” integrations save development time. | Many integration options via Python libraries and community modules. Virtually unlimited integration potential since any API or service usable in code can be hooked in by the developer. |

| Features & AI Capabilities | Comprehensive feature set (multi-agent, memory, multimodal support, scheduling, etc.) available natively in one platform. Consistent environment for all AI tasks. | Rich ecosystem of LLM application patterns (chains, agents, tools) that can be combined creatively. Rapid evolution with community contributions adds cutting-edge ideas quickly. |

| Deployment | One-click or managed deployment to cloud/on-prem with monitoring. Less DevOps burden – platform handles execution environment and updates. | Total freedom to deploy anywhere and anyhow (on-prem, cloud, serverless). Can integrate into existing software products or backends seamlessly since it’s just code. |

| Community & Support | Vendor-backed support, documentation, and reliability. Clear roadmap and less risk of breaking changes. | Large open-source community, abundant learning resources and examples. No license cost (Apache 2.0), and community-driven improvements. |

| Overall Trade-off | High productivity & enterprise readiness – ideal for teams that want a ready-made platform to accelerate AI adoption with minimal friction, especially in corporate settings requiring security and integration breadth. | High flexibility & customization – ideal for developer-heavy teams that require full control over AI logic and infrastructure, and are willing to build and maintain the surrounding systems to tailor their solution. |

(Table: High-level summary of SmythOS vs LangChain, emphasizing their respective strengths.)

Conclusion

SmythOS and LangChain represent two different paradigms for developing AI agent solutions. SmythOS offers a holistic, integrated platform that aims to cover end-to-end needs – from design and development through to deployment and oversight – with a focus on ease-of-use, speed, and enterprise-grade robustness. LangChain provides a flexible, open-source toolkit that gives developers the freedom to build anything they envision by writing code, leveraging a rapidly growing ecosystem of modules but requiring them to assemble and harden the solution themselves.

For organizations that prioritize rapid development, a lower barrier to entry for non-engineers, and built-in safeguards (security, compliance, reliability), SmythOS presents a compelling choice. Its ability to accelerate time-to-value – by offering visual development, out-of-the-box integrations, and managed runtime performance – means businesses can prototype and deploy AI applications faster, with fewer specialized resources. Moreover, in environments where governance is non-negotiable, SmythOS’s baked-in audit trails and policy enforcement reduce the risk inherent in AI-driven automation.

On the other hand, organizations with strong engineering capabilities or unique requirements might favor LangChain. As an open-source project, LangChain comes with no licensing cost and an open canvas for innovation. Developers comfortable with it can push the boundaries of AI agents, mixing and matching tools and models without platform constraints. LangChain’s thriving community ensures that it stays at the cutting edge of what’s possible with LLMs, and it integrates well with bespoke systems. However, these benefits come with the responsibility of managing complexity – ensuring security, building infrastructure for scale, and handling maintenance over time.

In many cases, the choice might also depend on the stage of the project. Teams could start experimenting with LangChain for initial proofs-of-concept and then transition to SmythOS when they need to scale up and harden their solution for production, or vice versa. Some may even use both: for example, using SmythOS for certain use cases where speed and enterprise features are paramount, and LangChain for others where complete control is needed.

Ultimately, both SmythOS and LangChain are powerful in their own right. SmythOS shines as a turnkey platform that brings together the best of AI orchestration in a unified experience, making it arguably greater than the sum of its parts. LangChain shines as a flexible framework that has democratized access to building with LLMs for developers worldwide. The decision between them should be guided by an organization’s specific needs: the expertise of its team, the requirements for security/compliance, the urgency of deployment, and the complexity of integrations required. By understanding the comparative strengths outlined in this article, decision-makers can better align their choice of platform with their strategic objectives in the AI domain.

To experience the transformative power of SmythOS for your business, explore our diverse range of AI-powered agent templates or create a free SmythOS account to start building your own AI solutions today. Unlock the full potential of AI with SmythOS and revolutionize your workflow.