Building and scaling AI agents often means wrestling with large code repositories, fragmented context, and unpredictable output—issues that cost developers time and stall business innovation. For many teams, these challenges translate into costly rework, inaccurate insights, and delayed project launches that hurt both efficiency and revenue.

Enter GPT‑4.1. This groundbreaking model eliminates common stumbling blocks by delivering unmatched coding accuracy, robust instruction-following. Furthermore, it has the capacity to handle up to one million tokens in a single prompt.

What does this mean for developers and business owners?

In practical terms, developers can now feed entire codebases or massive documents into the model without tedious chunking, freeing them to focus on innovation rather than manual overhead. Similarly, businesses gain faster time-to-market for new features, more reliable automation, and the confidence that their AI agents will perform at scale.

If you’re aiming to streamline development, boost agent reliability, and maximize value, GPT‑4.1 is the upgrade that delivers on every front.

What is GPT-4.1?

GPT‑4.1 is the newest addition to OpenAI’s GPT model family, building on the breakthroughs of GPT‑4o and GPT‑4.5 with an emphasis on real-world utility. Unlike its predecessors, GPT‑4.1 boasts a significantly larger context window—up to one million tokens. This means you can feed entire projects, large data sets, or multiple lengthy documents into a single prompt without breaking them into chunks.

It excels in coding tasks thanks to a 21% absolute improvement on SWE-bench Verified over GPT‑4o, as well as more robust diff-following capabilities. From a usability standpoint, GPT‑4.1 has been refined for improved instruction following. According to Open AI, the model is:

- More precise when formatting its output

- More compliant with negative instructions

- Less likely to provide extraneous or unwanted edits

With strengthened long-context comprehension and advanced reasoning across large input volumes, GPT‑4.1 sets a new standard for AI agents that require in-depth, multi-document analysis and code-centric workflows.

So what are its ideal use cases?

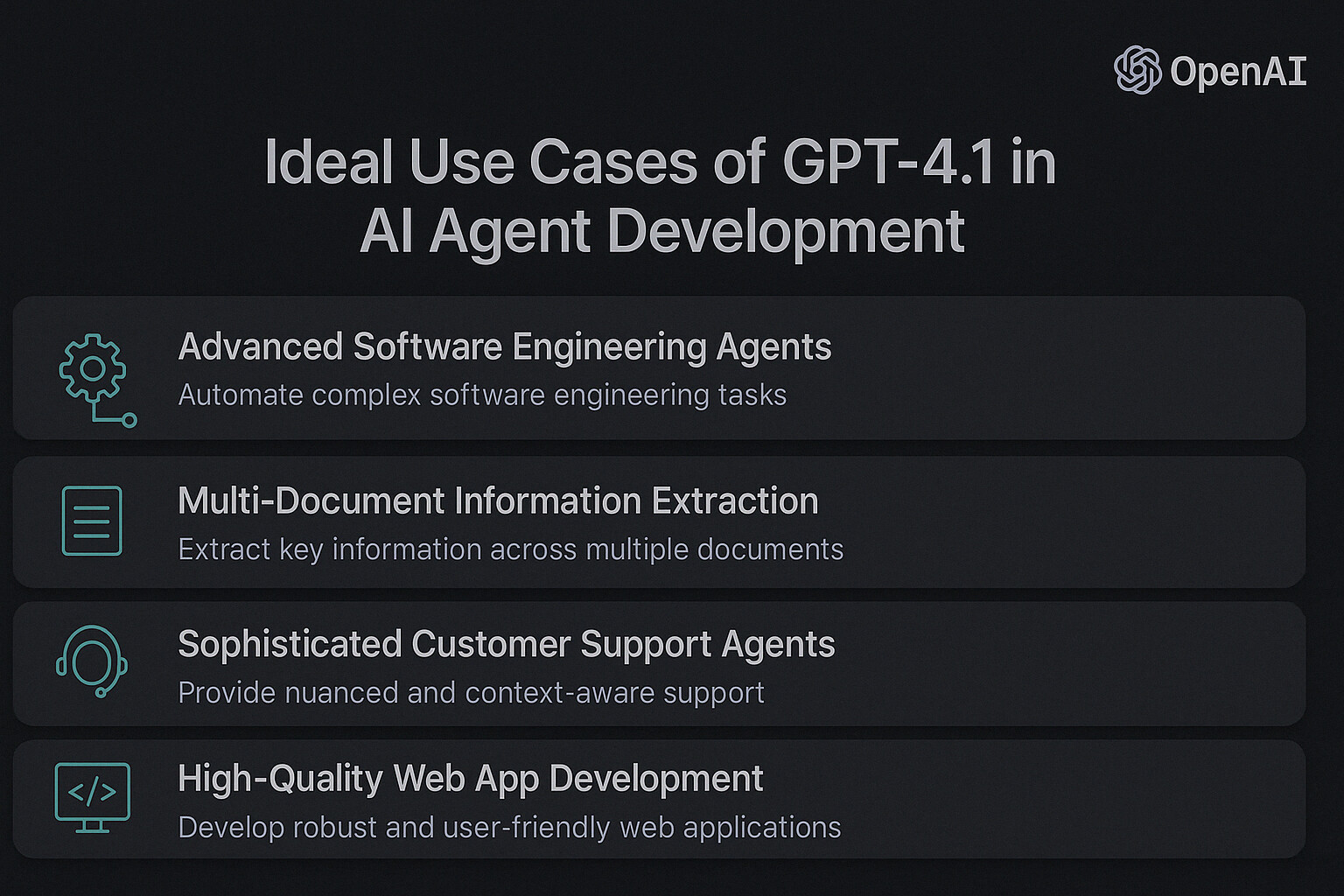

Ideal Use Cases of GPT-4.1 in AI Agent Development

GPT‑4.1’s strength in coding, high-level reasoning, and handling vast amounts of data makes it an excellent choice for developers seeking to build powerful, context-aware AI agents.

1. Advanced Software Engineering Agents

GPT‑4.1 stands out for coding and bug fixing. It can review pull requests, refactor code, and generate patches with fewer mistakes. Unlike older models, GPT‑4.1 handles large projects more easily thanks to its one-million-token context window. This means less back-and-forth and faster, more accurate solutions.

2. Multi‑Document Information Extraction

Many fields—like law and finance—rely on huge amounts of text. GPT‑4.1 can scan and summarize dense reports or multiple files without losing important details. It goes beyond finding a single “needle” of information and excels at gathering multiple pieces of data.

In fact, OpenAI’s new OpenAI-MRCR test shows how GPT‑4.1 can pick the right answer from many similar prompts, even in very long conversations.

3. Sophisticated Customer Support Agents

GPT‑4.1 improves multi-turn chats by remembering what users said earlier. It can also follow brand guidelines or formatting rules. Whether it’s returning a correct answer to a technical question or keeping a conversation clear, GPT‑4.1 reduces errors and boosts customer satisfaction.

4. High‑Quality Web App Development

GPT‑4.1 isn’t just for back-end coding. It also does a great job on front-end tasks.

Testers found that GPT‑4.1 produces more functional and visually appealing web apps 80% of the time when compared to GPT‑4o. This means faster prototypes, cleaner UI, and happier end users.

5. Context‑Heavy Knowledge Agents

When you need to handle complex tasks that require gathering many facts, GPT‑4.1 shines.

The model’s ability to track different requests hidden inside long prompts (as shown in OpenAI’s multi-round coreference test) proves it can manage tricky scenarios. It can tell a poem about tapirs from a story about frogs and deliver the exact response users want—even in very long chats.

Practical Guide: How to Use GPT-4.1 API in Your AI Agent

If you’re building agents that need to reason across large documents, follow structured formats, or generate complex outputs, GPT‑4.1 in SmythOS gives you a reliable and scalable starting point.

How to Set Up GPT‑4.1 in SmythOS Agent

Getting started with GPT‑4.1 in SmythOS is simple—no manual API setup required. SmythOS comes with native support for GPT‑4.1 built right into its LLM component, making it easy for teams to integrate this powerful model into their AI agents.

To begin, add an LLM component to your agent flow. From the dropdown list of available models, select gpt-4.1. That’s it—no need to copy API keys or configure endpoints manually. SmythOS handles the integration behind the scenes so you can focus on building intelligent behaviors.

This built-in support allows your agents to immediately take advantage of GPT‑4.1’s strengths. Since SmythOS is designed for real-world deployment, you can pair GPT‑4.1 with other components — like for Nodejs, web search, and web scrape — to build powerful, multi-step agents without writing boilerplate code.

Crafting Effective Prompts for Smarter Agents

GPT‑4.1 is more literal than earlier models. That’s a good thing—if you know how to guide it. Small tweaks in how you word your prompt can change the entire outcome. Clear, step-by-step instructions help your AI agent behave the way you want, especially when the task gets complex.

To get the most out of GPT‑4.1 inside SmythOS, follow three proven prompt patterns:

1. Be Explicit About Agent Behavior

Let GPT‑4.1 know it’s part of a multi-turn task. Tell it not to stop until the job is done. Without this, the model may hand control back too early—even when there’s more to do.

Tip: Add something like:

“You are an autonomous agent. Continue working until the task is complete. Don’t stop unless the goal is met.”

This kind of persistence prompt helps GPT‑4.1 behave like a smart assistant, not a one-shot chatbot.

2. Encourage Tool Use Instead of Guessing

When GPT‑4.1 isn’t sure of something, don’t let it guess. Tell it to use available tools—like reading files, calling APIs, or checking databases.

Tip: Include a line like:

“Use your tools to look up information when uncertain. Do not make assumptions.”

This kind of prompt reduces hallucinations and makes your agent more trustworthy.

3. Ask It to Plan (Optional but Helpful)

Planning helps the model work smarter. Ask it to outline its steps before acting, then reflect afterward. This is especially helpful when chaining tool calls or making decisions.

Tip: Try adding:

“Before you act, describe your plan. After taking action, explain what happened.”

This small change can improve performance on complex, multi-step tasks.

Agents using this approach in OpenAI’s tests performed nearly 20% better on coding tasks. So if you’re building agents that code, search, summarize, or handle workflows—prompt structure really matters. And for repeated tasks? Use prompt caching to save on cost and speed up performance.

The Challenge of Context Management

While a million-token window is powerful, efficiently managing this expanded context presents unique challenges. OpenAI’s internal testing shows that accuracy can decrease when processing information at the extreme ends of the context window, suggesting that maximum data input isn’t always optimal.

Effective context management requires strategic approaches to organizing, prioritizing, and structuring information. This includes determining which information is most relevant, how to compress or summarize less crucial details, and when to refresh certain context elements.

The Real-World Impact of GPT‑4.1 on AI Agent Development

GPT‑4.1 marks a practical leap forward for how AI agents perform in real-world settings. Developers benefit from a model that follows instructions more reliably. At the same time, businesses gain AI agents that can scale with confidence—handling long documents, ambiguous data, and multi-turn tasks with ease.

According to OpenAi, here’s how it performs in production:

Blue J: Smarter Tax Research Agents

Blue J saw a 53% improvement in accuracy when testing GPT‑4.1 on their most complex tax scenarios.

The model followed detailed instructions more precisely and handled long-context legal prompts with greater consistency. This translated into faster, more dependable tax research and allowed professionals to shift focus from repetitive lookups to higher-value advisory work.

Hex: Improved SQL Understanding and Fewer Debug Loops

For Hex, GPT‑4.1 delivered nearly double the accuracy on their toughest SQL evaluation set. It was significantly more reliable in selecting the correct tables from large, complex database schemas—something even strong prompts couldn’t fix in previous models.

This reduced the need for manual debugging and accelerated the path to production-ready workflows.

Thomson Reuters: Better Multi-Document Legal Analysis

Thomson Reuters used GPT‑4.1 to improve CoCounsel, their AI legal assistant.

Compared to GPT‑4o, they achieved a 17% boost in multi-document review accuracy. GPT‑4.1 handled conflicting clauses, tracked details across long documents, and identified key legal relationships with much higher precision—critical for compliance, litigation, and risk evaluation.

Carlyle: High-Fidelity Financial Data Extraction

Carlyle relied on GPT‑4.1 to extract detailed financial insights from a mix of PDFs, Excel files, and dense reports.

The model outperformed others by 50% on retrieval tasks and succeeded where others failed—such as pulling information from the middle of documents or connecting data points across multiple files. This reliability helped streamline due diligence and unlock faster data-driven decisions.

GPT-4.1 Pricing and Cost Efficiency

One of GPT‑4.1’s standout benefits is its cost-effectiveness, a result of OpenAI’s optimization in both modeling and infrastructure.

Despite offering more robust performance than its predecessors, GPT‑4.1 is priced competitively. As a result, it’s an attractive model for teams looking to handle extensive, resource-intensive tasks—such as parsing large data sets or generating complex code—in a single prompt.

The introduction of a higher prompt caching discount (75% for repeated contexts) further slashes costs for developers repeatedly sending the same background data.

| Model (Prices are per 1M tokens) | Input | Cached input | Output | Blended Pricing* |

|---|---|---|---|---|

| gpt-4.1 | $2.00 | $0.50 | $8.00 | $1.84 |

| gpt-4.1-mini | $0.40 | $0.10 | $1.60 | $0.42 |

| gpt-4.1-nano | $0.10 | $0.025 | $0.40 | $0.12 |

Moreover, GPT‑4.1’s flexible pricing tiers empower developers to scale their usage as needed—whether you need a small, experimental deployment or a robust, production-grade agent with consistent usage. This can be useful to organizations handling frequent or high-volume queries, since each token-based operation becomes more affordable with caching and bulk operations.

Ultimately, GPT‑4.1’s combination of top-tier performance and minimized expenditure paves the way for cutting-edge AI agent functionality without breaking the bank.

Conclusion: Embracing GPT-4.1 for Advanced AI Agent Creation

GPT-4.1 marks a significant advancement in AI agent development. With its expanded million-token context window, improved coding performance, and enhanced instruction-following precision, it equips developers with exceptional tools to create sophisticated and efficient AI systems.

These enhancements lead to faster software development cycles, more effective legacy code analysis, and the ability to build complex agents capable of handling multi-step tasks with access to extensive knowledge bases.

The benefits of GPT-4.1 extend beyond technical capabilities. Its significantly reduced API pricing—up to 80% more cost-effective than previous models—makes advanced AI accessible to developers and businesses of all sizes. This democratization of AI technology fosters innovation across industries, from healthcare diagnostics to financial analysis to creative content production.

To fully leverage these capabilities, developers require a robust platform specifically designed for AI agent deployment and orchestration. SmythOS addresses this need with its no-code environment for building, deploying, and managing advanced AI agents.

Its visual builder, extensive integration ecosystem, and runtime-first architecture provide the secure, scalable foundation necessary for enterprise-grade AI implementation. By integrating GPT-4.1’s intelligence with SmythOS’s deployment capabilities, organizations can transform complex workflows into seamless, AI-driven processes that enhance productivity and innovation.