OpenAI just dropped GPT-5.2, and honestly? This one actually matters. Not because of marketing hype, but because the improvements land exactly where AI agent builders need them most: tool calling, long-context reasoning, and reliability.

If you’re building production agents on SmythOS, here’s what you need to know.

The Headline Numbers That Actually Matter

Let’s skip the benchmarks nobody uses and focus on what changes your day-to-day work.

According to OpenAI’s official announcement, GPT-5.2 Thinking scores 98.7% on Tau2-bench Telecom, a test that measures how well models use tools across long, multi-turn tasks. That’s not a small improvement. It means fewer broken workflows, less babysitting of your agents, and more reliable end-to-end task completion.

The model also hallucinates less. OpenAI reports that responses with errors were 30% less common compared to GPT-5.1 Thinking when tested on de-identified ChatGPT queries. For anyone who’s had an agent confidently state wrong facts to customers, that number means something.

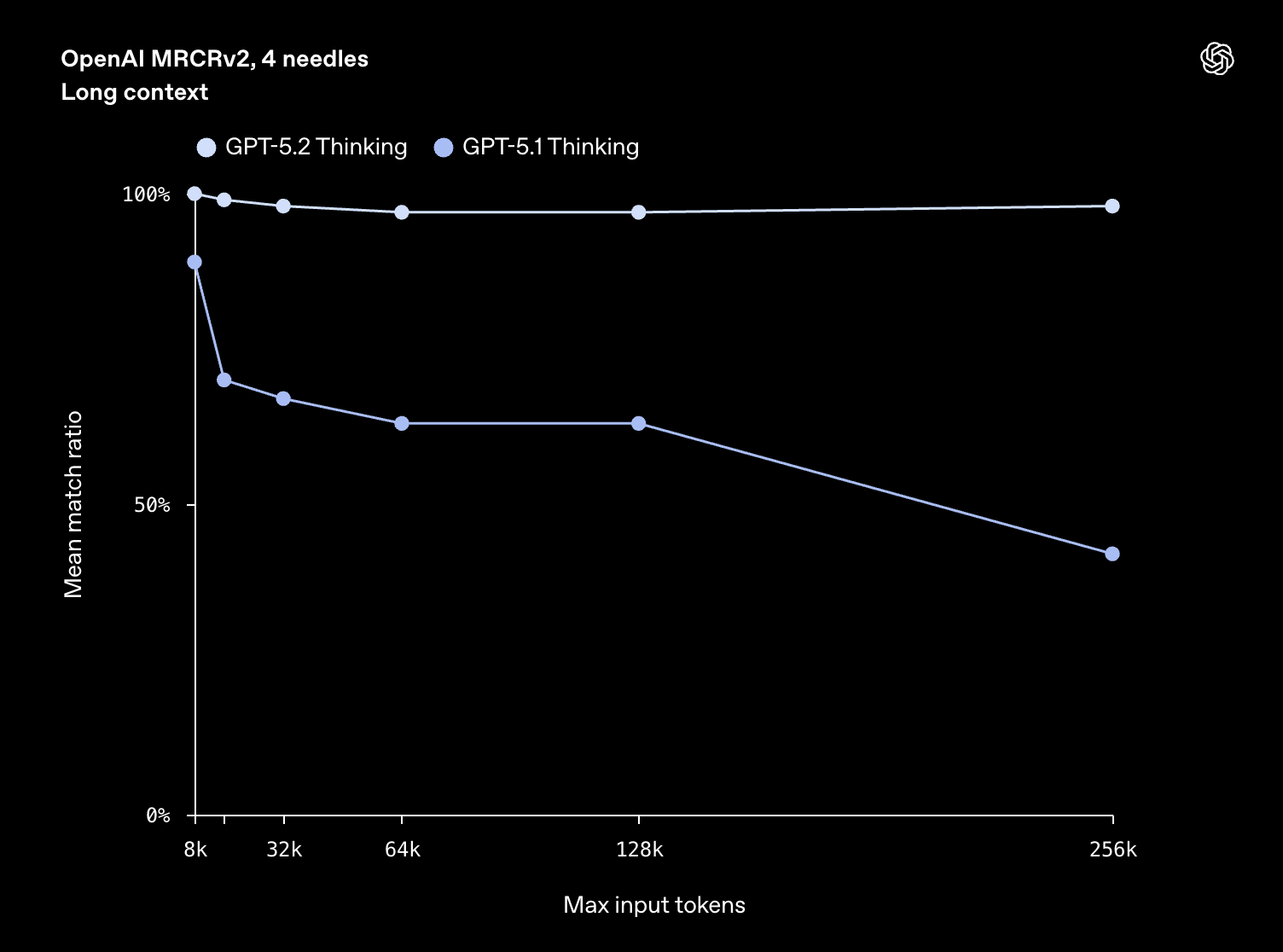

And here’s one that doesn’t get enough attention: GPT-5.2 is the first model to achieve near 100% accuracy on the 4-needle MRCR variant out to 256,000 tokens, according to OpenAI’s benchmarks. Your agents can now work with massive documents (contracts, research papers, multi-file codebases) without losing the thread.

What This Means for SmythOS Agent Builders

SmythOS already supports OpenAI models through its LLM Manager Subsystem. You can bring your own API key, swap models without rebuilding workflows, and run any supported provider through a unified interface. With GPT-5.2, that flexibility becomes even more powerful.

Better tool calling means more reliable agents. SmythOS workflows often chain together API calls, database queries, web scraping, and LLM reasoning. When the underlying model fumbles tool calls, the whole chain breaks. GPT-5.2’s 98.7% accuracy on complex tool-use tasks translates directly into agents that actually finish what they start.

Consider a customer support workflow that needs to check order status, verify identity, process a refund, and send a confirmation. With previous models, you might see the agent trip over one of those steps maybe 10-15% of the time. With GPT-5.2, that failure rate drops substantially.

Longer context windows mean smarter document agents. SmythOS components, such as RAG Remember, already help agents work with large knowledge bases. But when the LLM itself can maintain coherence across 256,000 tokens, your document analysis agents become genuinely useful for enterprise-scale work.

Think legal contract review. Think codebase analysis. Think processing a 200-page research report and answering specific questions about page 47 while considering context from page 12.

Less hallucination means fewer embarrassing mistakes. Brand agents talking to customers need to get facts right. Process agents making decisions based on data can’t afford to fabricate information. The 30% reduction in response-level errors isn’t just a nice stat; it’s the difference between an agent you can trust and one you constantly have to supervise.

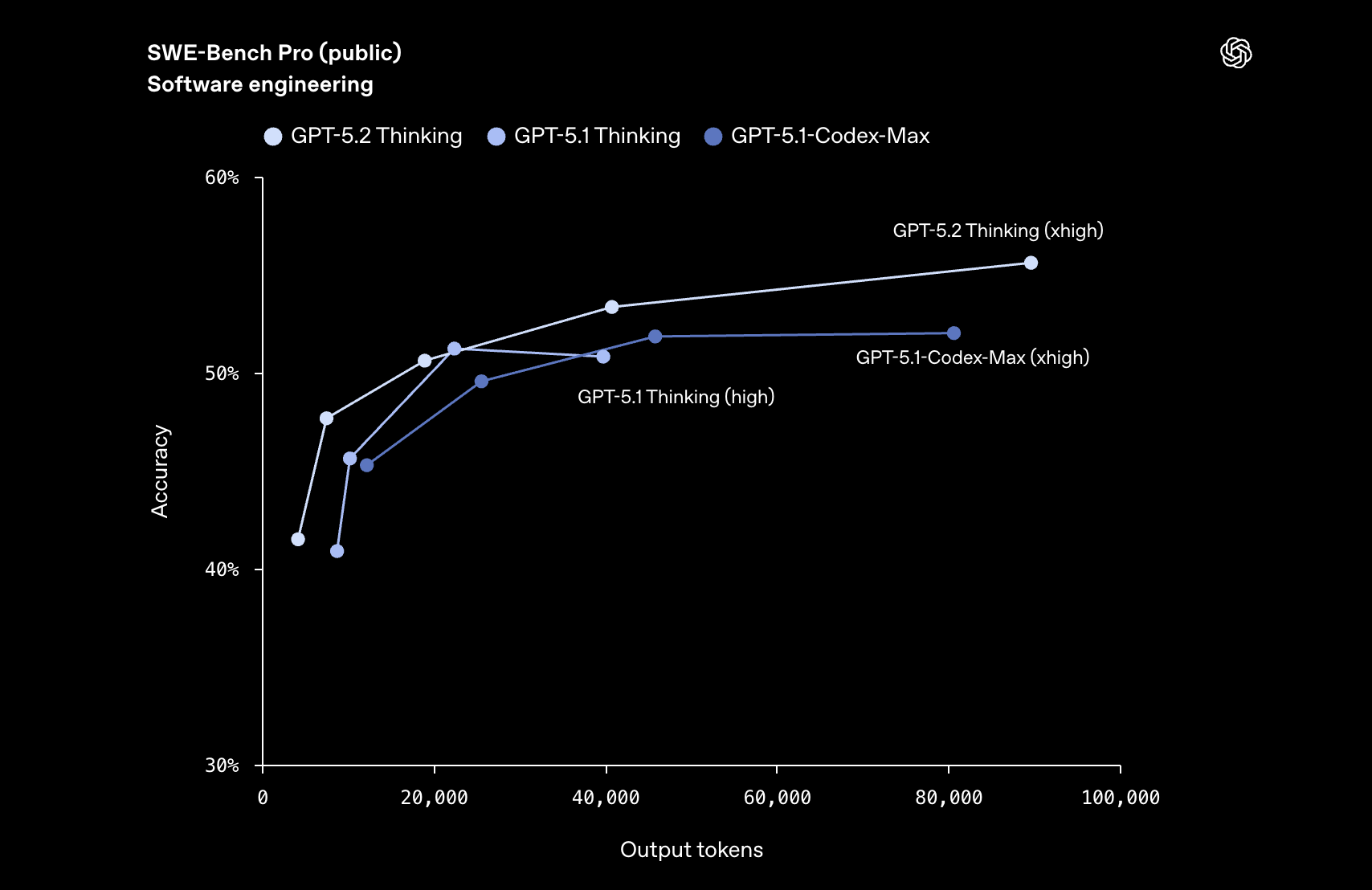

The Coding Improvements Hit Different

GPT-5.2 scores 80% on SWE-bench Verified, up from 76.3%. More interestingly, it sets a new state of the art at 55.6% on SWE-Bench Pro, which tests real-world software engineering across multiple programming languages.

For SmythOS users, this matters in two ways.

First, the Node.js Component allows you to run custom Node.js code inside your agent workflows. When the underlying model writes better code, your custom logic components become more reliable. Fewer bugs. Cleaner implementations. Less time debugging auto-generated functions.

Second, if you’re using SmythOS to build agents that assist with software development tasks (code review, bug finding, refactoring), GPT-5.2’s improvements provide a significant upgrade for those agents. JetBrains, Augment Code, and Warp all reported measurable improvements in their early testing.

Vision Gets Practical

Here’s something that often gets overlooked: GPT-5.2’s vision capabilities cut error rates roughly in half on chart reasoning and interface understanding tasks, according to OpenAI’s benchmarks.

Specifically, accuracy on CharXiv Reasoning jumped from 80.3% to 88.7%. ScreenSpot-Pro scores, measuring UI understanding, improved from 64.2% to 86.3%.

If you’re building agents that process screenshots, analyze dashboards, or extract information from visual documents, that’s a significant improvement. SmythOS workflows that incorporate the GenAI LLM component with vision inputs become substantially more useful.

Consider an agent that monitors competitor pricing by analyzing screenshot captures of their websites. Or a quality assurance bot that verifies UI elements match design specs. Or a financial analysis agent that interprets charts and graphs from quarterly reports.

All of these become more viable with GPT-5.2’s improved spatial understanding.

The Professional Work Angle

OpenAI introduced a benchmark called GDPval that tests knowledge work tasks across 44 occupations. According to their official release, GPT-5.2 Thinking wins or ties against industry professionals 70.9% of the time on well-specified tasks like creating presentations, building spreadsheets, and producing reports.

More importantly, it does this at more than 11 times the speed and less than 1% the cost of human professionals.

For SmythOS users building internal process automation, this frames the opportunity clearly. Agents powered by GPT-5.2 can handle legitimate knowledge work, not just chat responses, but actual artifacts like documents, spreadsheets, and presentations.

SmythOS already supports scheduled and bulk work, allowing you to give agents tasks that run automatically. Combine that with GPT-5.2’s improved professional capabilities, and you have a real path to meaningful automation of knowledge work.

Pricing and Access

According to OpenAI’s pricing page, GPT-5.2 is priced at $1.75 per million input tokens and $14 per million output tokens. That’s higher than GPT-5.1’s $1.25/$10 rates, but OpenAI claims improved token efficiency means the actual cost of achieving a given quality level is lower.

Cached inputs get a 90% discount at $0.175 per million tokens. The Pro tier runs $21 input and $168 output per million tokens for the most demanding workloads.

For context, that puts OpenAI roughly in line with Google’s Gemini 3 Pro at $2/$12 per million tokens. Anthropic’s Claude Opus 4.5 remains pricier at $5/$25.

Getting Started

If you’re already building on SmythOS, the upgrade path is straightforward. SmythOS supports multiple LLM providers through connectors, and GPT-5.2 is available now through OpenAI’s API.

You can:

- Update your GenAI LLM components to use the new model

- Test existing workflows with the upgraded model to measure improvements

- Build new agents that leverage GPT-5.2’s enhanced tool calling and long-context capabilities

- Use the vision improvements for image-heavy workflows

- Deploy agents via multiple channels, including API, web chat, and voice assistants

Why This Matters

GPT-5.2 changes what’s possible with AI agents. But possibility and production are different things.

That 98.7% tool-calling accuracy? It only matters if your agent can actually chain those tools together, handle failures gracefully, and maintain state across long-running workflows. That’s not something the model provides. That’s infrastructure.

The 256k token context window? Useful only if you have a system that can ingest documents, chunk them intelligently, and route the right context to the right agent at the right time. The model holds the context. Something else has to manage it.

And those 30% fewer hallucinations? Still means roughly 6% of responses could be wrong. In production, you need observability to catch those errors, audit trails to understand what happened, and guardrails to prevent bad outputs from reaching customers.

This is where SmythOS fits. Not as a replacement for GPT-5.2, but as the layer that makes GPT-5.2 actually usable at scale. The Smyth Runtime Environment handles the orchestration, memory, security, and monitoring that turn a capable model into a reliable system.

When you build in Agent Studio, you’re not just connecting GPT-5.2 to some APIs. You’re defining workflows that self-heal when tools fail, that persist state across sessions, that enforce access controls on sensitive operations. The model got smarter. SmythOS makes sure that intelligence actually ships.

Try It Yourself

GPT-5.2 is available now. If you want to see what 98.7% tool-calling accuracy and 256k context actually feels like in a production workflow, SmythOS is the fastest way to find out.

Build an agent in Agent Studio. Connect it to GPT-5.2. Deploy it. See what breaks, what works, and what you can actually ship.

The model is ready. The question is whether your infrastructure is.

Star us on GitHub.

Join our Discord community. Questions about integrating GPT-5.2 with your existing workflows? Our team can help.