In the industrial sector, operational expertise often outpaces digital presence. For a recycling leader in waste management, this gap was becoming a liability. While they were industry leaders on the ground, they were invisible online.

Their website traffic was stagnant, and their small internal team could not sustain the content velocity required to compete for high-volume keywords. They didn’t just need a few blog posts; they needed a complete digital overhaul.

To solve this, they partnered with us to deploy a SmythOS-powered “SEO Heist” system. This wasn’t a standard automation script, but a complex multi-agent system designed to autonomously handle the entire content lifecycle; from keyword inception to final publication and ongoing maintenance.

From Content Bottlenecks to Automated Scale

The challenge was clear: search engines reward freshness, depth, and topical authority. Achieving this manually requires a coordinated team of researchers, writers, editors, and SEO specialists.

The client needed to bypass the limitations of human scaling. They required a system that could emulate a full marketing department, producing high-quality, media-rich, and interlinked content without human intervention.

Technical Deep Dive: Orchestrating the Agent Swarm

We utilized SmythOS to architect a modular system where specific “agents” handled distinct cognitive tasks. Instead of overloading a single LLM context window, we broke the workflow into specialized nodes.

The Creation Pipeline

The workflow begins with the Keyword Researcher, an agent tasked with identifying high-potential search terms and passing them downstream. This data feeds the Outliner, which analyzes the current top-ranking content (SERPs) for those keywords. It constructs a comprehensive, topically relevant outline, accepting primary and secondary keywords to ensure semantic coverage.

Once the structure is set, Penn takes over. Penn is the dedicated writer agent that accepts the outline, drafts the full article, and interfaces with WordPress to create the initial post object.

The Enrichment Layer

Text alone is rarely enough to capture modern search intent. To solve this, we deployed a trio of enrichment agents:

- Artie: Scours the web for relevant stock photography or generates custom AI images to match the context.

- Tweety: Queries live social data to fetch relevant tweets, adding social proof and expert commentary to the piece.

- Tabby: Parses the article’s data points to construct HTML tables, improving readability and snippet capture potential.

Orchestration and Optimization

The magic happens with Marshall, the orchestrator agent. Marshall accepts the raw text from Penn and the media assets from the enrichment layer, intelligently inserting the images, tweets, and tables into the article flow where they add the most value.

Following assembly, Linc scans the draft and the client’s existing sitemap to inject internal and external links, building the site’s link graph. Finally, Finn acts as the Editor-in-Chief. Finn reviews the article for tone and accuracy, performs final SEO checks, and makes the decision to publish live or save as a WordPress draft for human review.

The Maintenance Loop

Content decay is a major SEO issue, so we introduced Refresher. This agent proactively fetches existing articles from WordPress, analyzes them against current search trends to identify content gaps, writes new sections to fill those gaps, and updates the post—keeping the library evergreen.

The Impact: Dominating the Niche

The deployment of this agent swarm fundamentally changed the client’s business trajectory, delivering results across traditional search and the new wave of AI search.

1. Traditional Search Dominance

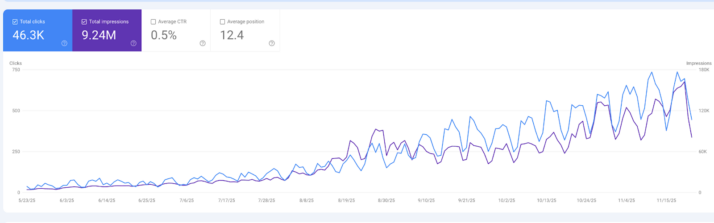

The data above illustrates a “hockey stick” growth curve. In just six months, the site exploded from negligible traffic to 9.24 Million Impressions and 46.3K Clicks.

The agents successfully secured an average position of 12.4, proving that the content was high-quality enough to rank on the first or second page of Google consistently.

2. Future-Proofing with AI Citations

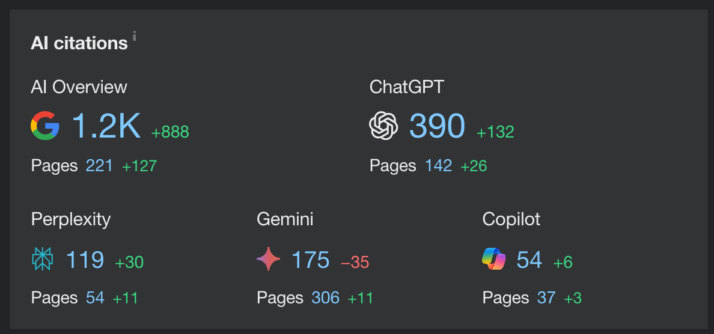

Crucially, the structured nature of the content, which is rich with tables, clear definitions, and schema data, made it highly readable for Large Language Models.

As seen in the metrics above, the site secured 1,200+ citations in Google AI Overviews (an increase of 888 pages). This ensures the brand is capturing the growing number of “zero-click” searches where users get their answers directly from the AI.

3. Real-World Business Outcomes

This digital visibility translated into tangible business results. As organic traffic climbed past 11,000 monthly visits, The recycling company reported a significant surge in direct inquiries.

More businesses and individuals began reaching out with specific recycling needs and technical recycling questions, directly attributing their discovery of the firm to the informational articles produced by the agents.

Final Takeaways

This project demonstrates that the future of SEO is agentic. By moving away from simple text generation and embracing a modular architecture with SmythOS, we were able to replicate the nuance of a human content team at a speed and scale that is simply impossible to match manually.

The success of the “SEO Heist” proves that when you combine specialized agents such as researchers, writers, orchestrators, and editors, you don’t just get more content; you get a smarter, more resilient digital footprint that drives real-world revenue.

Want to know more about how SmythOS can help your organization, like we were able to with this partner? Reach out to our team for more information. Want to get involved with the SmythOS community? Join us on Discord, check out our GitHub Repos, and help build the future of Agentic AI.