Moonshot AI open‑sourced Kimi K2 on 11 July 2025. The goal is simple: hand off work that usually requires whole teams, such as debugging code, running data pipelines, and wiring up tools, to a single model built for action.

Kimi K2 ships in two checkpoints, namely Kimi‑K2‑Base and Kimi‑K2‑Instruct. Let’s take a look at what this AI model has to offer.

What Is the KIMI K2 Open-Source Model? Inside the Stack

Moonshot promises “advanced agentic intelligence” with the Kimi‑K2‑Base for custom fine‑tuning and Kimi‑K2‑Instruct for immediate production use. However, both variants share identical internals.

K2 is a Mixture‑of‑Experts transformer model with 1 trillion total parameters, but a router lights up only 32 billion on any token.

Basically, the network spans 61 transformer layers with 384 experts sitting behind them. The router picks eight experts per token, so each forward pass feels more like a slim‑line 30‑odd‑b model in latency and cost.

The model keeps 64 attention heads, switches on a SwiGLU activation, and speaks a 160 k‑token vocabulary. Thanks to Multi‑head Latent Attention (MLA), the context window stretches to 128 k tokens. This means the model reads an entire codebase or multi‑document brief in one go.

Training at this scale stayed stable because Moonshot swapped the usual AdamW for its Muon optimizer. Now paired with a MuonClip tweak that clips runaway attention logits and flattens loss spikes. What does this mean? Well, no training crashes even at trillion‑parameter breadth.

Taken together, these design choices give K2 a head start while keeping inference costs in line with those of mid-sized dense models. That efficiency is what unlocks its “agentic” edge: coding, tool use, and long‑horizon reasoning. All without locking you into a black‑box vendor!

How Does It Differ from Other Open Source Models?

So what’s new here, and how does it compare to other heavyweight models on the market? Here’s the rundown of exactly what Kimi K2 offers, and why it matters.

Completely Open and Customizable

Unlike proprietary models such as GPT-4 or Claude, Kimi K2 arrives fully open under a permissive MIT-style license.

In other words, you can inspect every parameter and fine-tune it for your specific use cases. Or better still, host the model yourself. Moonshot also ensured compatibility by offering an OpenAI- and Anthropic-compatible API.

Kimi K2 provides genuine transparency and complete ownership of your AI pipeline.

Mixture-of-Experts Architecture

As we said earlier, Kimi K2 incorporates a “Mixture-of-Experts” (MoE) approach with 1 trillion parameters. However, only 32 billion parameters activate per token, managed efficiently by an internal routing mechanism.

This means you gain the knowledge and reasoning abilities of a trillion-parameter model while incurring only a fraction of the typical computational cost. It’s roughly equivalent to running a much smaller, dense model.

This design differs from traditional “dense” models like GPT-4 and Claude. Moonshot is offering large-model capabilities without an equally large infrastructure burden.

MuonClip Optimizer

Training AI at the trillion-parameter scale is fragile. Instabilities like exploding attention logits can crash training or corrupt model quality halfway through.

To address this, Moonshot introduced MuonClip, an optimizer that builds on their earlier work with Muon. Its core innovation is a technique called qk-clip, which rescales the query and key weight matrices after each optimizer step.

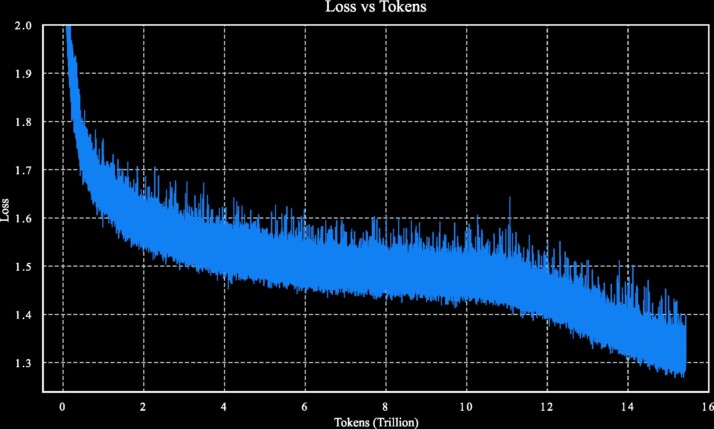

This keeps the attention logits from spiraling out of control during training. A common failure mode in large, sparse models. That way, the Kimi K2 was trained on 15.5 trillion tokens without a single training spike. Undoubtedly, this is a technical win at scale.

128,000-Token Context

One of the most limiting factors in traditional AI models is context length. Kimi K2 addresses this with Multi-head Latent Attention (MLA), supporting up to 128,000 tokens in a single prompt.

That’s enough to feed an entire codebase, a legal case file, or multiple research papers without breaking things into chunks.

This removes workarounds, reduces complexity, and makes large-scale reasoning much easier to deploy for developers and teams building real tools.

Deployment-Ready From Day One

Moonshot offers Kimi K2 in block‑FP8 format, optimized for inference and compatible with vLLM, SGLang, KTransformers, and TensorRT‑LLM. They don’t mandate specific hardware. However, the model’s architecture favors multi‑GPU server setups, typically using NVIDIA H100 or H200, for smooth, high‑throughput deployment.

Single‑GPU use is possible but demanding, given the model’s trillion‑parameter MoE design. Still, expert routing and quantization keep Kimi K2 far more efficient than dense models of similar scale. The focus here is practical. Kimi K2 is meant to run in the real world.

Benchmarks: KIMI K2 Models Vs Others

KIMI K2 Instruct vs Others

| Category | Benchmark (metric) | Kimi K2 Instruct | Claude Opus 4 | GPT‑4.1 |

|---|---|---|---|---|

| Coding | SWE‑Bench Verified · single‑attempt Pass @ 1 | 65.8 % | 72.5 % | 54.6 % |

| LiveCodeBench v6 · Pass @ 1 | 53.7 % | 47.4 % | 44.7 % | |

| MultiPL‑E · Pass @ 1 | 85.7 % | 89.6 % | 86.7 % | |

| Tool use | Tau2 (retail) · Avg @ 4 | 70.6 % | 81.8 % | 74.8 % |

| AceBench · Accuracy | 76.5 % | 75.6 % | 80.1 % | |

| Math & STEM | MATH‑500 · Accuracy | 97.4 % | 94.4 % | 92.4 % |

| ZebraLogic · Accuracy | 89.0 % | 59.3 % | 58.5 % | |

| GPQA‑Diamond · Avg @ 8 | 75.1 % | 74.9 % | 66.3 % | |

| General knowledge | MMLU · Exact‑Match | 89.5 % | 92.9 % | 90.4 % |

Let’s give the Base checkpoint its spotlight. For reference, here’s how the raw, unfine‑tuned K2‑Base stacks up against the strongest open checkpoints before any instruction‑tuning

Kimi K2 Base vs. Flagship Closed Models

| Benchmark (metric) | Kimi K2 Base | DeepSeek‑V3 Base | Qwen 2.5‑72B | Llama‑4 Maverick |

|---|---|---|---|---|

| MMLU · EM (5‑shot) | 87.8 % | 87.1 % | 86.1 % | 84.9 % |

| MMLU‑Pro · EM (5‑shot) | 69.2 % | 60.6 % | 62.8 % | 63.5 % |

| MATH · EM (4‑shot) | 70.2 % | 60.1 % | 61.0 % | 63.0 % |

| GSM8k · EM (8‑shot) | 92.1 % | 91.7 % | 90.4 % | 86.3 % |

| LiveCodeBench v6 · Pass @ 1 | 26.3 % | 22.9 % | 21.1 % | 25.1 % |

| EvalPlus · Pass @ 1 | 80.3 % | 65.6 % | 66.0 % | 65.5 % |

| TriviaQA · EM (5‑shot) | 85.1 % | 84.1 % | 76.0 % | 79.3 % |

Known Limitations and Trade-offs

Moonshot explicitly notes a few known limitations in the current Kimi K2 model:

Excessive Token Generation

For challenging reasoning tasks or situations where tool definitions are unclear, Kimi K2 sometimes generates too many tokens. This can lead to responses being truncated or tool calls being incomplete.

Performance Decline With Tool Use

In certain scenarios, enabling tool integration negatively affects the model’s overall performance, causing it to underperform compared to tool-free contexts.

Reduced Effectiveness of One-shot Prompting

When tasked with building complete software projects, Kimi K2 shows decreased performance if given instructions in a single prompt (one-shot prompting). Rather than through a structured, step-by-step agentic workflow.

Hallucination & Stubborn Replying

Users on r/LocalLLaMA report Kimi K2 “frequently made things up… insisted it was correct” even when it wasn’t, refusing to reconsider until challenged. That suggests the model can cling to incorrect assumptions rather than self-correct reflexively.

Slow Token Generation

In production environments (e.g., Cursor + Fireworks), users observed that K2’s token generation was significantly slower than competitors.

One user claimed that requests like “creating two readme.md files” could take 15–17 minutes or even fail outright. This highlights a gap between theoretical throughput and real-world speed.

Note that Moonshot openly acknowledges some of these limitations and states they’re actively working to resolve these issues in upcoming releases. Moonshot would also love feedback to keep rolling in as they continue refining the model.

Bottom line

Kimi K2 swaps the comfort of a paid API for total control, stronger coding chops, and a genuine tool‑use brain. If you have the appetite for ownership, it’s ready to work hard for you.

If not, keep it on your radar. Open models evolve quickly, and the next minor release might hit your price‑performance sweet spot.