Knowledge Graphs and Natural Language Processing

Imagine having a vast network of interconnected information that helps computers understand and process human language with remarkable accuracy. That’s exactly what knowledge graphs bring to Natural Language Processing (NLP), transforming how machines interpret and respond to our communications.

Think of knowledge graphs as sophisticated digital maps that connect facts, concepts, and relationships in ways that mirror human understanding. When integrated with NLP systems, these graphs serve as powerful frameworks that enable everything from more intelligent search results to virtual assistants that understand context and nuance in our questions.

In a world where knowledge graphs are instrumental in enhancing machine understanding, their impact reaches far beyond simple data storage. They transform how AI systems process language, making interactions more natural and responses more accurate.

The beauty of this integration lies in its practical applications. From helping healthcare systems understand patient records to enabling e-commerce platforms to provide better product recommendations, knowledge graphs power the AI revolution in ways that touch our daily lives.

At the intersection of data science and artificial intelligence, knowledge graphs represent more than just a technological advancement – they’re the bridge between human language and machine understanding, opening doors to possibilities we’re only beginning to explore. Let’s explore how these systems are reshaping our digital world and improving machine understanding.

Semantic Relationships in Knowledge Graphs

Knowledge graphs represent a significant leap forward in how machines understand and process human language. By establishing meaningful connections between entities, these structures move beyond simple word associations to capture the nuanced ways concepts relate to each other in the real world.

Take, for example, the relationship between a writer and their published works. A basic database might list authors and books separately, but a knowledge graph can express that Harper Lee wrote To Kill a Mockingbird, which was published in 1960 and takes place in Alabama. These semantic relationships provide crucial context that enhances natural language processing applications in multiple ways.

One of the most powerful applications of semantic relationships in knowledge graphs is their ability to improve search accuracy. According to research on knowledge graph applications, when users search for ‘budget-friendly laptops,’ systems can understand this is semantically equivalent to searches for ‘affordable computers’ or ‘cheap notebooks,’ delivering more relevant results.

Knowledge graphs also enable more sophisticated question-answering systems. Rather than just matching keywords, these systems can traverse the web of relationships to find indirect connections and implicit knowledge. This allows them to answer complex queries like ‘Which authors wrote about social justice in the American South during the 1960s?’ by following chains of semantic relationships between concepts.

| Entity | Relationship | Related Entity |

|---|---|---|

| Harper Lee | wrote | To Kill a Mockingbird |

| To Kill a Mockingbird | published in | 1960 |

| To Kill a Mockingbird | takes place in | Alabama |

| budget-friendly laptops | semantically equivalent to | affordable computers |

| affordable computers | semantically equivalent to | cheap notebooks |

| James Bond | played by | various actors |

| Barack Obama | family | Michelle Obama, Malia Obama, Sasha Obama |

| movie’s director | directed by | various directors |

The impact of semantic relationships extends to chatbots and virtual assistants as well. By understanding how entities and concepts connect meaningfully, these systems can maintain context across conversations and provide more natural, intelligent responses. When a user asks about a movie’s director and then follows up with ‘What else did they make?’, the system knows who ‘they’ refers to through stored semantic relationships.

Knowledge graphs in NLP have undergone a remarkable evolution, spurred by the need to better understand and interpret human language at a deeper level than ever before.

Semantic Matching in NLP Techniques and Implementations

Perhaps most importantly, semantic relationships help address one of the fundamental challenges in natural language processing – ambiguity. Words often have multiple meanings depending on context. Knowledge graphs can disambiguate these meanings by examining the surrounding relationship patterns, much like humans use context clues to understand language in conversation.

As natural language processing continues to advance, the role of semantic relationships in knowledge graphs becomes increasingly critical. They provide the foundational structure that allows AI systems to not just process language, but to truly understand it in ways that more closely mirror human comprehension.

Challenges in Integrating Knowledge Graphs with NLP

Knowledge graphs and Natural Language Processing (NLP) technologies share an intricate relationship, but their integration isn’t without significant hurdles. Understanding these challenges is crucial for data scientists and developers working to create more robust AI systems that can effectively reason over structured knowledge.

Data heterogeneity stands as one of the primary obstacles when merging knowledge graphs with NLP systems. As recent research highlights, the fundamental difference between structured graph data and unstructured natural language creates a complex impedance mismatch. NLP models expect fluid, contextual text input, while knowledge graphs rely on rigid, precisely defined relationships between entities.

Scale presents another formidable challenge. Modern knowledge graphs can contain millions or even billions of entities and relationships. Processing this vast amount of structured data alongside natural language queries requires significant computational resources and sophisticated architectural designs. This becomes particularly evident when attempting to maintain real-time performance in applications that need to traverse complex graph structures while processing natural language input.

Accuracy and Consistency Challenges

Maintaining accuracy when bridging knowledge graphs and NLP systems proves especially demanding. Language models can produce hallucinations or inconsistent outputs, which may corrupt the structured nature of knowledge graphs if not properly managed. The challenge intensifies when dealing with domain-specific knowledge that requires high precision.

These accuracy concerns become particularly apparent in systems like ERNIE (Enhanced Language RepresentatioN with Informative Entities), which attempts to fuse knowledge graph information with language model capabilities. While such systems show promise, they must carefully balance the preservation of structured knowledge with the flexibility needed for natural language understanding.

The effectiveness of KG and LLM integration is an area that demands further research. Only a marginal amount of knowledge is successfully integrated into well-known knowledge-enhanced Language Models.

Time sensitivity represents another critical challenge. Knowledge graphs need regular updates to remain current, while language models typically operate with static training data. This temporal disconnect can lead to inconsistencies between the structured knowledge and the model’s understanding of recent developments or changes in relationships between entities.

The solution space for these challenges continues to evolve. Researchers are exploring hybrid approaches that leverage the strengths of both technologies while mitigating their respective weaknesses. These include innovative architectures that separate knowledge representation from language understanding, allowing each component to be updated and maintained independently while still working in concert.

Best Practices for Building Knowledge Graphs for NLP

Building effective knowledge graphs for natural language processing requires methodical planning and rigorous execution. Following established best practices can significantly improve your results and avoid common pitfalls.

Data quality stands as the cornerstone of any successful knowledge graph implementation. According to ONTOFORCE research, adhering to FAIR principles (Findability, Accessibility, Interoperability, and Reusability) is essential for ensuring your knowledge graph remains valuable and maintainable over time. This means carefully validating data sources, standardizing formats, and implementing robust quality control measures from the start.

When selecting ontologies, resist the temptation to create custom frameworks without first examining established standards. Industry-standard ontologies not only save development time but also ensure better interoperability with existing systems. For instance, in the life sciences domain, switching from UMLS to Mondo proved crucial for avoiding drug-related synonym overlaps that could compromise machine learning analysis.

Data Integration and Validation Strategies

Effective knowledge graph construction demands a systematic approach to data integration. Start with a clearly defined scope and gradually expand rather than attempting to incorporate all available data at once. This measured approach helps maintain quality while preventing the introduction of noise that could compromise your NLP applications.

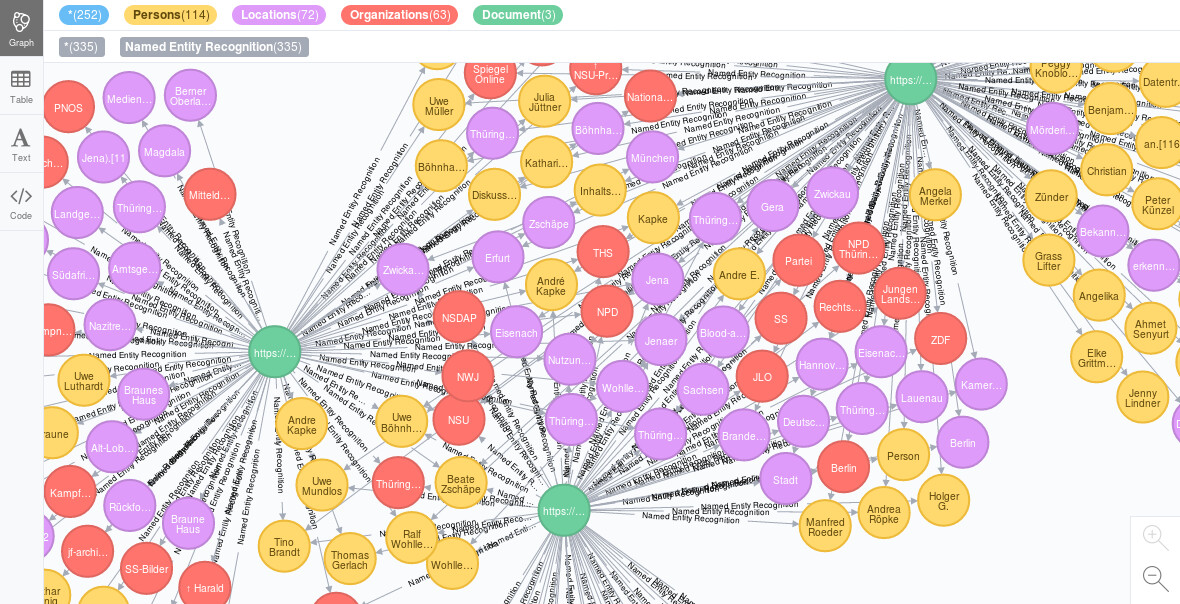

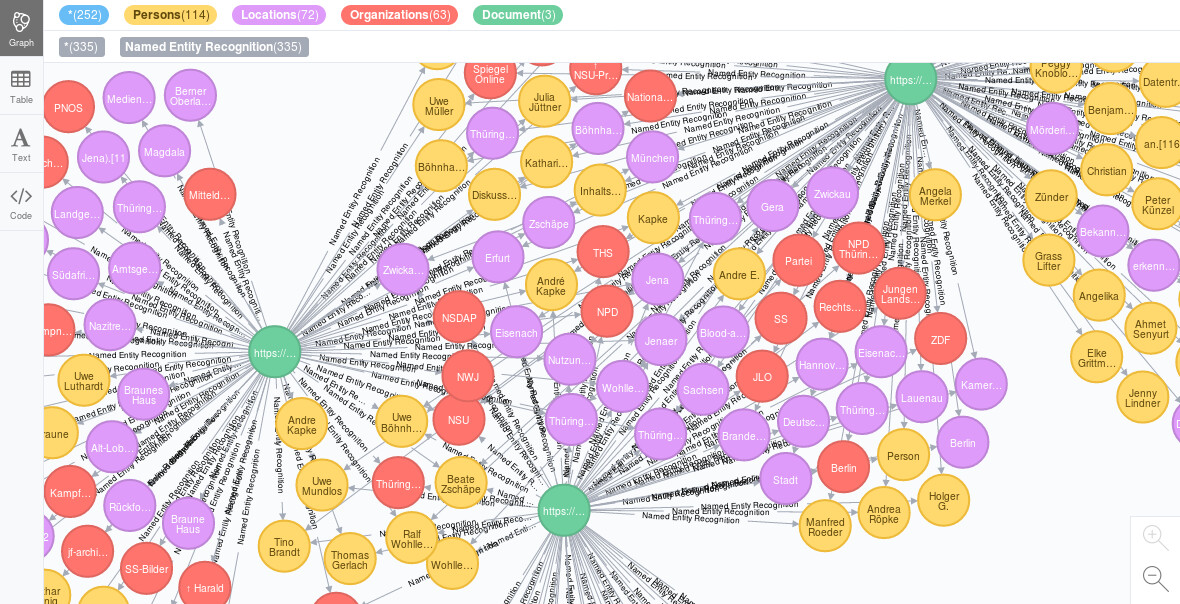

Implement thorough validation processes at multiple stages – during data ingestion, relationship mapping, and after any significant updates. Automated testing should check for logical consistency, relationship validity, and adherence to your chosen ontological framework. Consider implementing visualization tools to help spot anomalies and potential errors in your graph structure.

Quality control capabilities that allow you to quickly identify and respond to changes or errors in your data are essential. This is where visualization tools really help – they can reveal outliers and inconsistencies that might be missed in raw data.

ONTOFORCE VP of Engineering

Regular maintenance and updates are crucial for keeping your knowledge graph relevant and accurate. Establish clear protocols for adding new entities and relationships, and ensure proper documentation of any changes to maintain consistency across your development team.

| Task | Description |

|---|---|

| Define Data Quality Standards | Clearly define the standards and criteria for high-quality data relevant to the organization’s goals, including data accuracy, completeness, and consistency. |

| Establish Validation Rules | Set up validation rules to enforce data quality by ensuring data meets predefined standards and criteria. |

| Collect and Organize Datasets | Gather and organize datasets to facilitate easier validation and quality checks. |

| Verify Data Against Rules | Check data against the defined validation rules to identify errors or inconsistencies. |

| Identify and Address Errors | Identify errors or inconsistencies in data and take steps to correct them. |

| Conduct Regular Audits | Perform regular audits to ensure ongoing data quality and compliance with standards. |

| Implement Data Cleansing | Regularly clean data to remove errors, duplicates, and outdated information. |

| Train Staff | Provide training to staff on data quality standards and validation techniques. |

Case Studies: Successful Knowledge Graph Implementations

Leading enterprises have witnessed remarkable improvements in their Natural Language Processing (NLP) capabilities through strategic knowledge graph implementations. These real-world applications demonstrate how organizations are leveraging graph technologies to enhance language understanding and generate more accurate insights.

AP-HP Healthcare’s implementation showcases how knowledge graphs can transform patient data analysis. By structuring complex medical information into interconnected nodes and relationships, their NLP systems gained the ability to interpret clinical notes with higher accuracy, leading to improved diagnosis suggestions and treatment recommendations.

Deutsche Bank presents another compelling case study in regulatory compliance. Their knowledge graph implementation enables real-time analysis of customer and transaction data, significantly reducing false positives in sanctions screening. The system’s enhanced NLP capabilities allow it to process complex queries across multiple documents, providing rapid insights for compliance officers.

At eBay, knowledge graphs power sophisticated fraud detection systems. The e-commerce giant’s implementation connects user behavior patterns and transaction histories in a graph structure, enabling their NLP models to identify subtle indicators of fraudulent activity. This approach has notably improved the platform’s ability to detect and prevent fraud through natural language analysis of user communications and listing descriptions.

TomTom’s implementation demonstrates how knowledge graphs can enhance geospatial NLP applications. By representing location data in a graph format, their system processes natural language queries about routes and points of interest with greater accuracy. The graph structure allows their NLP models to understand complex spatial relationships and provide more precise navigation instructions.

Knowledge graphs can turn data from a ‘problem to be managed’ into data as a ‘resource to be exploited’ – they provide a framework for data integration, unification, analytics and sharing.

These implementations highlight a crucial pattern: knowledge graphs excel at providing context and relationships that traditional databases cannot capture. By organizing information in a more natural, interconnected way, they enable NLP systems to better understand and process human language, leading to more accurate and contextually aware applications.

Leveraging SmythOS for Knowledge Graph Development

Building robust knowledge graphs demands sophisticated tools and seamless workflow integration—challenges that SmythOS directly addresses through its comprehensive visual development environment. The platform transforms complex knowledge graph creation into an intuitive process through its visual workflow builder, enabling both technical and non-technical teams to construct sophisticated knowledge representations.

At the core of SmythOS’s capabilities lies its advanced debugging environment. The platform’s built-in debugger allows developers to examine knowledge graph workflows in real-time, providing unprecedented visibility into data flows, relationship mappings, and potential issues. This visual debugging approach significantly accelerates the development cycle while ensuring knowledge graph accuracy and reliability.

Integration capabilities set SmythOS apart in the enterprise space. The platform seamlessly connects with major graph databases and semantic technologies, allowing organizations to leverage their existing data investments while building more sophisticated knowledge representations. As noted on G2, ‘SmythOS truly excels in automating chores; its true strength lies in seamlessly connecting with all of your favorite tools.’

Enterprise-grade security features are woven throughout the SmythOS platform, addressing a critical requirement for organizations working with sensitive knowledge bases. The platform implements robust access controls, data encryption, and security monitoring to protect valuable organizational knowledge while still enabling appropriate sharing and collaboration.

For teams new to knowledge graph development, SmythOS offers a unique advantage through its free runtime environment. This allows organizations to prototype and test knowledge graph integrations without significant upfront investment, reducing the barriers to adoption while maintaining professional-grade capabilities.

SmythOS slashes AI agent development time from weeks to minutes, while cutting infrastructure costs by 70%. It’s not just faster – it’s smarter.

Alexander De Ridder, CTO of SmythOS

Future Trends in Knowledge Graphs and NLP

The integration of knowledge graphs and natural language processing is entering an exciting new phase, propelled by significant advances in artificial intelligence. A decade of research and development has laid the groundwork for what promises to be a transformative convergence between these two critical technologies. Recent studies highlight how this fusion is experiencing rapid spread and wide adoption within academia and industry, particularly in applications requiring sophisticated knowledge representation.

The emerging landscape reveals several promising trends. Large language models are increasingly being augmented with structured knowledge from graphs, enabling more accurate and contextual understanding of complex information. This symbiosis addresses historical limitations of both technologies—the rigidity of traditional knowledge graphs and the occasional imprecision of standalone NLP systems.

Particularly noteworthy is the rise of hybrid architectures that combine the semantic precision of knowledge graphs with the flexible reasoning capabilities of advanced NLP models. These systems demonstrate unprecedented abilities in tasks requiring both broad knowledge access and nuanced language understanding, such as answering complex queries and generating accurate, context-aware responses.

Looking ahead, several developments appear on the horizon. More sophisticated frameworks for automatic knowledge graph construction and maintenance through advanced NLP techniques are likely. Improvements in graph neural networks and attention mechanisms promise to enhance how systems traverse and utilize structured knowledge. Additionally, the integration of multimodal data—text, images, and other formats—within knowledge graphs points toward more comprehensive and versatile information systems.

As these technologies continue to mature, their impact on real-world applications grows increasingly significant. From enhancing search engines and recommendation systems to powering more intelligent virtual assistants, the convergence of knowledge graphs and NLP is reshaping how we interact with and derive value from information. This evolution represents not just a technical advancement, but a fundamental shift in how machines understand and process human knowledge.

Last updated:

Disclaimer: The information presented in this article is for general informational purposes only and is provided as is. While we strive to keep the content up-to-date and accurate, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained in this article.

Any reliance you place on such information is strictly at your own risk. We reserve the right to make additions, deletions, or modifications to the contents of this article at any time without prior notice.

In no event will we be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from loss of data, profits, or any other loss not specified herein arising out of, or in connection with, the use of this article.

Despite our best efforts, this article may contain oversights, errors, or omissions. If you notice any inaccuracies or have concerns about the content, please report them through our content feedback form. Your input helps us maintain the quality and reliability of our information.