Agent Architectures and Reasoning: Building Smarter, More Adaptive AI

Imagine autonomous AI agents as highly skilled workers, each equipped with unique capabilities to tackle complex tasks. These digital professionals don’t just follow simple instructions; they think critically, plan methodically, and execute actions with precision through sophisticated architectures that mirror human decision-making processes.

At the heart of modern AI development lies a fascinating evolution: the shift from basic chatbots to intelligent agents capable of rich reasoning and strategic planning. Whether operating solo or as part of a coordinated team, these agents represent the next frontier in artificial intelligence, pushing boundaries in how machines can understand and solve real-world challenges.

We’ll dissect how different agent architectures—from streamlined single-agent systems to intricate multi-agent networks—leverage advanced reasoning capabilities to complete complex tasks. You’ll discover how these systems plan their actions, make informed decisions, and utilize specialized tools to achieve their goals.

As recent research reveals, agent architectures are enhancing AI applications by incorporating sophisticated planning, iteration, and reflection capabilities that go far beyond simple prompt-response interactions. Through this lens, we’ll examine how these architectures enable unprecedented levels of autonomous problem-solving.

We’ll unravel the intricate world of AI agent architectures and their reasoning systems—from understanding their fundamental building blocks to exploring how they’re reshaping the future of artificial intelligence.

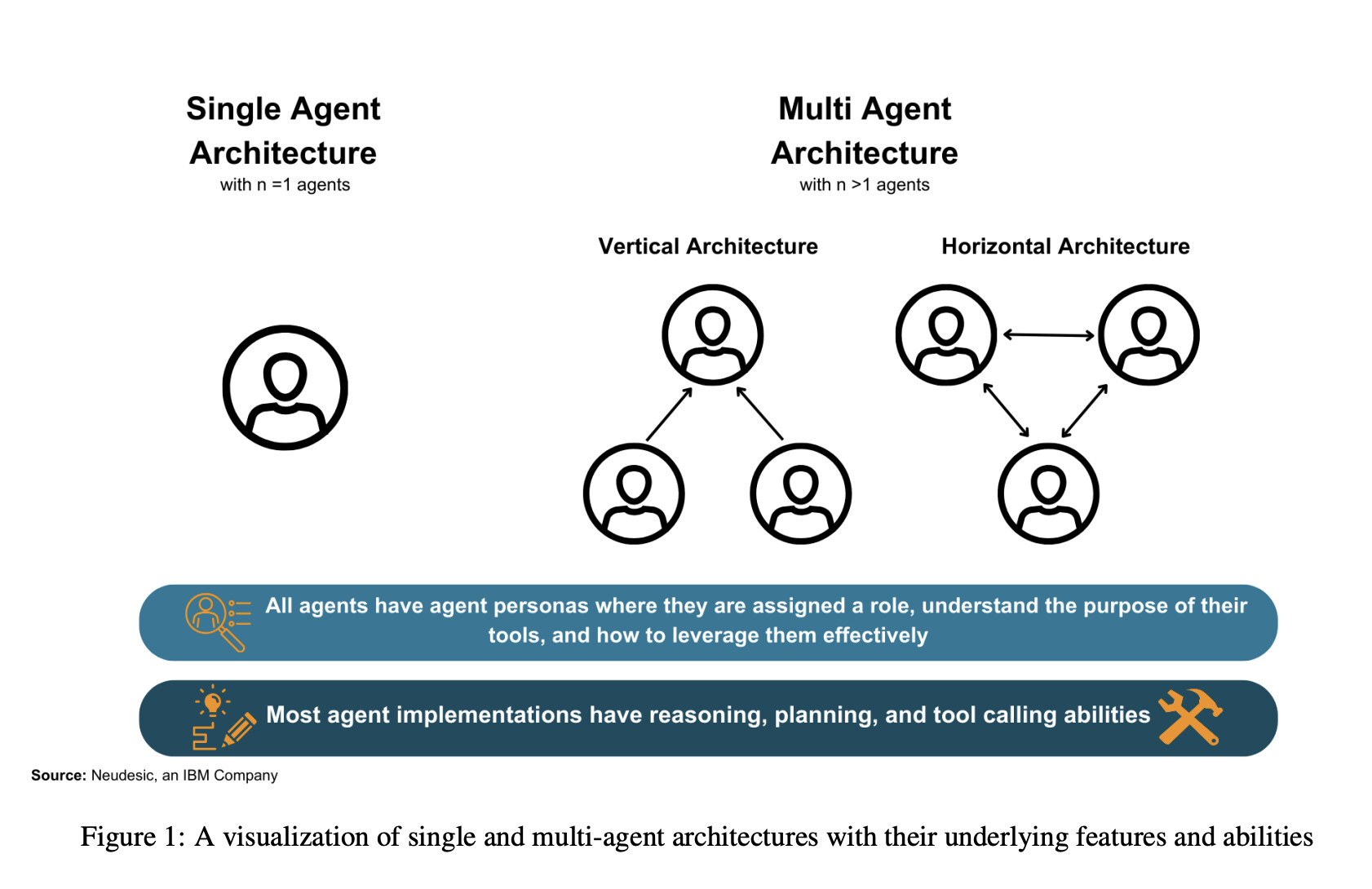

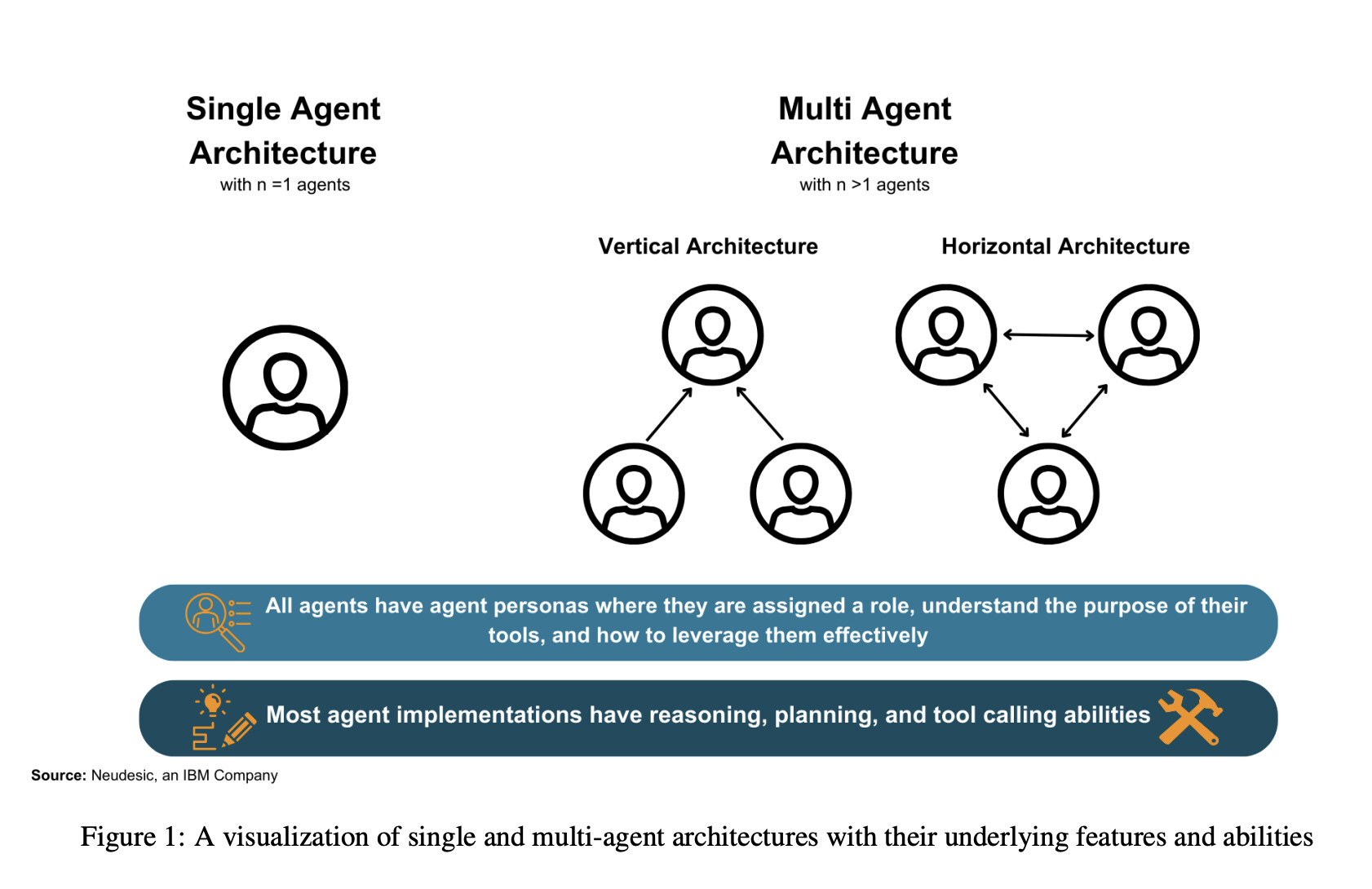

Single-Agent vs. Multi-Agent Architectures

Understanding the distinction between single-agent and multi-agent architectures is crucial for developers building autonomous systems. Each approach offers unique advantages and faces specific challenges in handling complex tasks.

Single-agent architectures rely on one language model to handle all operations independently. These systems excel at straightforward, well-defined tasks where processes have clear boundaries. According to recent research, single-agent systems demonstrate particular strength when feedback from other agents isn’t necessary, and tasks require focused execution with a specific set of tools.

However, single-agent systems face notable limitations. Without external feedback mechanisms, they may become trapped in execution loops, repeatedly attempting the same approach without progress. They also struggle with complex sequences of subtasks, particularly when multiple operations need to occur simultaneously.

Multi-agent architectures, in contrast, distribute responsibilities across several specialized agents working in concert. This approach shines when tasks require parallel processing or benefit from diverse perspectives. Each agent can operate independently on subtasks while contributing to the overall goal, enabling more dynamic and flexible problem-solving approaches.

The power of multi-agent systems becomes evident in their ability to parallelize tasks. While a single agent must handle operations sequentially, multi-agent systems can truly process tasks in parallel, with each agent autonomously managing its responsibilities without waiting for others to complete their work.

Multi-agent systems significantly boost efficiency and productivity by dividing complex tasks among specialized agents. Each agent focuses on its area of expertise, leading to faster and more accurate results.

| Feature | Single-Agent Architecture | Multi-Agent Architecture |

|---|---|---|

| Number of Agents | One | Multiple |

| Task Execution | Sequential | Parallel |

| Feedback Mechanism | None | Built-in feedback loops |

| Strengths | Simple, well-defined tasks | Complex, parallel tasks |

| Weaknesses | May get stuck in execution loops | Requires careful management to avoid unproductive discussions |

Feedback mechanisms represent another crucial difference between these architectures. Multi-agent systems benefit from built-in feedback loops, where agents can critique and refine each other’s work. This collaborative approach often leads to more robust solutions, though it requires careful management to prevent agents from becoming caught in unproductive discussions or incorporating invalid feedback.

The choice between single-agent and multi-agent architectures ultimately depends on the specific requirements of your project. Single-agent systems offer simplicity and directness for well-defined tasks, while multi-agent systems provide enhanced flexibility and collaborative problem-solving capabilities for more complex challenges.

Role of Reasoning in Agent Architectures

AI agents need robust reasoning capabilities to tackle novel challenges, much like humans think critically to handle new situations. These abilities help agents move beyond their initial training and make intelligent decisions when faced with unfamiliar circumstances.

In single-agent architectures, reasoning powers an agent’s decision-making process. When encountering a new situation, the agent analyzes the current state, evaluates potential actions, and determines the most logical path forward. For example, an autonomous reasoning engine might help a delivery robot find an alternative route when its usual path is blocked by unexpected construction work.

Reasoning becomes even more fascinating in multi-agent setups, where multiple AI agents collaborate to solve complex problems. Here, agents need to reason about their own actions and consider the intentions and potential moves of other agents. This collaborative reasoning enables effective coordination, much like a team of emergency responders working together during a crisis.

Critical thinking manifests in several key ways within agent architectures. First, agents engage in means-ends reasoning, analyzing what actions they need to take to achieve their goals. Second, they employ causal reasoning to understand how their actions might affect the environment and other agents. Finally, they utilize adaptive reasoning to modify their approaches based on new information and changing circumstances.

The practical impact of reasoning in agent architectures extends beyond simple task completion. Agents with strong reasoning capabilities can explain their decision-making process, adapt to unexpected situations, and identify potential problems before they arise. This makes them more reliable and trustworthy partners in complex real-world applications.

The reasoning processes involved here are distinct from post hoc rationalizations and have a very real impact on countless intuitive judgments in concrete situations.

Frontiers in Integrative Neuroscience

Modern agent architectures continue to evolve, incorporating increasingly sophisticated reasoning methods. These advancements are pushing the boundaries of what’s possible in artificial intelligence, creating agents that can not only follow pre-programmed rules but truly think through complex challenges in ways that more closely mirror human cognitive processes.

Planning Techniques for Autonomous Agents

The ability to effectively plan and execute complex tasks is fundamental for autonomous agents operating in real-world environments. Modern planning approaches leverage sophisticated techniques that enable agents to break down complex problems and adapt to changing scenarios.

Task decomposition stands as a cornerstone technique in agent planning. Rather than attempting to solve complex problems in one step, agents break down tasks into manageable sub-tasks. For example, when an agent needs to “prepare coffee”, it decomposes this into discrete steps like locating the coffee maker, adding water, measuring coffee grounds, and initiating the brewing process. This approach significantly reduces complexity while improving success rates in task completion.

Multi-plan selection represents another powerful planning strategy that enhances agent capabilities. Instead of committing to a single course of action, agents generate multiple potential plans and evaluate them based on various criteria. As noted in recent research by Wang et al. (2023), this approach allows agents to explore different solutions and select the most promising one based on factors like efficiency, resource usage, and likelihood of success.

Memory-augmented planning takes agent capabilities to the next level by incorporating both short-term and long-term memory components. This technique allows agents to learn from past experiences and apply that knowledge to new situations. For instance, if an agent previously encountered difficulties navigating a particular area, it can reference this information when planning future routes through similar spaces.

The effectiveness of these planning techniques relies heavily on the agent’s ability to understand and adapt to environmental feedback. When executing decomposed tasks, agents continuously monitor progress and adjust their plans based on real-time results. This adaptive approach helps maintain progress even when unexpected obstacles arise.

Contemporary planning systems often combine multiple techniques to achieve optimal results. For example, an agent might use task decomposition to break down a complex goal, generate multiple potential plans for each sub-task, and leverage memory-augmented planning to inform its decisions based on prior experiences. This integrated approach significantly improves the agent’s ability to handle complex, real-world scenarios.

Memory plays a crucial role in planning, acting like a transient store that can be used to persist elements of a plan. Memory-augmented planning shows particular promise in improving agent performance across diverse tasks.

Zhang et al. (2023)

The development of these planning techniques continues to evolve, with researchers exploring new ways to enhance agent decision-making capabilities. Recent advances in large language models and neural architectures have opened up possibilities for more sophisticated planning strategies that can handle increasingly complex tasks while maintaining reliability and efficiency.

As autonomous agents become more prevalent in real-world applications, the importance of robust planning techniques grows. Whether operating in manufacturing environments, navigating urban spaces, or assisting in healthcare settings, agents must reliably plan and execute tasks while adapting to dynamic conditions and unforeseen challenges.

Tool Calling and External Integration

Large language models gain remarkable new capabilities when equipped with tool calling—the ability to interact with external APIs, data sources, and services to accomplish real-world tasks. This integration transforms AI agents from passive chatbots into dynamic problem solvers that can take meaningful actions.

Tool calling enables agents to break free from the constraints of their training data and access up-to-date information. For example, rather than relying on potentially outdated knowledge, an agent can query external APIs to retrieve current weather data, stock prices, or news headlines. Research has shown that agents with well-implemented tool calling demonstrate significantly improved performance on real-world tasks compared to standalone models.

The implementation of tool calling varies across different agent architectures. Single-agent systems typically handle tool execution sequentially, carefully planning each API call and processing the results before moving forward. Multi-agent systems can parallelize tool calling across specialized agents—for instance, having one agent focused on database queries while another handles external API requests.

Effective tool calling requires thoughtful design of the tool interfaces themselves. Each tool needs clear documentation of its parameters, expected outputs, and potential error cases. The agent must be able to reason about when and how to use each tool appropriately. Some frameworks implement this through structured schemas that define the tools’ capabilities and requirements.

Security and rate limiting present important considerations when implementing tool calling. Agents need appropriate access controls and monitoring to prevent misuse of external services. Error handling becomes critical—agents must gracefully handle API failures, invalid responses, and usage limits without disrupting the broader conversation flow.

The evolution of tool calling capabilities has dramatically expanded what AI agents can accomplish. By connecting language models to real-world systems and data sources, we’re moving beyond simple chat interfaces to truly useful AI assistants that can take action on users’ behalf.

Tula Masterman, AI Researcher

Looking ahead, tool calling will likely become even more sophisticated through improved reasoning capabilities and expanded integration options. The ability to dynamically discover and combine tools opens exciting possibilities for more flexible and capable AI agents that can tackle increasingly complex real-world tasks.

Challenges and Solutions in Agent Development

As autonomous agents become more prevalent in our daily lives, developers face significant hurdles in creating reliable and unbiased systems. Two critical challenges stand at the forefront: data bias and integration complexity. Understanding and addressing these issues is crucial for developing trustworthy AI agents that can serve humanity effectively.

Data bias represents perhaps the most pressing concern in agent development today. When AI systems learn from flawed or limited datasets, they can perpetuate and even amplify existing societal prejudices. For instance, as noted in recent research, an AI recruitment tool might favor male candidates if trained primarily on historical data from male-dominated industries, potentially perpetuating workplace gender disparities.

To combat data bias, developers must implement robust bias-checking mechanisms throughout the development process. This includes carefully auditing training data for representation across different demographics and regularly testing agent outputs for unfair patterns. Creating diverse development teams can also help spot potential biases early in the design phase.

Integration challenges pose another significant obstacle. Modern AI agents must seamlessly interact with various systems and APIs while maintaining performance and reliability. These technical complexities often lead to compatibility issues and scalability concerns, particularly when deploying agents across different platforms and environments.

There’s a growing focus on developing the necessary infrastructure to ensure the safe and ethical deployment of AI agents. If an LLM has a hallucination rate of even just 0.1% it could never be trusted in any critical process.

Unite.AI Research Report

| Challenge | Solution |

|---|---|

| Data Bias | Implement robust bias-checking mechanisms, audit training data, and create diverse development teams. |

| Integration Complexity | Adopt standardized communication protocols and robust testing frameworks, and use modular architectures. |

| Security | Implement strong encryption, access controls, and continuous monitoring systems. |

| Cloud Security and Compliance | Use solid data encryption, IAM practices, and provide security awareness training. |

| Cloud Architecture Challenges | Adopt a hybrid cloud integration approach and use middleware solutions. |

| Network Latency | Use CDNs, edge computing, and distributed caching. |

| Data Governance | Implement data quality checks and establish a data governance framework. |

| Legacy Systems Integration | Use middleware and data transformation tools, and consider phased integration. |

Solutions to integration challenges include implementing standardized communication protocols and developing robust testing frameworks. Organizations should also consider adopting modular architectures that allow for easier updates and maintenance of individual components without disrupting the entire system.

Security represents another critical concern in agent development. As these systems gain access to sensitive data and critical operations, protecting against potential breaches becomes paramount. Developers must implement strong encryption, access controls, and continuous monitoring systems to ensure agent security without compromising performance.

Looking ahead, the key to successful agent development lies in adopting a comprehensive approach that addresses these challenges holistically. This includes investing in diverse training data, implementing robust integration frameworks, and maintaining strict security protocols. By tackling these challenges head-on, developers can create more reliable and effective autonomous agents that truly benefit society.

Conclusion and Future Directions

The evolution of autonomous agents stands at a pivotal moment, with emerging technologies and architectures reshaping our approach to artificial intelligence. Recent developments, particularly in multi-agent systems, show that the landscape of agent development continues to mature and expand in sophistication. Integrating large language models with autonomous capabilities has opened new frontiers in what agents can achieve.

Several key advancements are poised to transform the field. Collaborative multi-agent systems promise more robust problem-solving capabilities, where agents can work together seamlessly to tackle complex tasks. These systems are becoming increasingly adept at handling nuanced interactions, learning from experience, and adapting to dynamic environments in ways that mirror human cognitive processes.

A promising development lies in the realm of agent orchestration. As recent industry analysis suggests, agent orchestration is emerging as a critical bridge between enterprise data and customer engagement, fundamentally changing how businesses approach complex workflows and decision-making processes.

The future of agent architectures will likely see enhanced reasoning capabilities and more sophisticated decision-making mechanisms. We are moving toward systems that can not only execute tasks but also understand context, learn from interactions, and make nuanced judgments about complex situations. This evolution in agent intelligence suggests a future where autonomous systems can handle increasingly sophisticated responsibilities while maintaining reliability and transparency.

Last updated:

Disclaimer: The information presented in this article is for general informational purposes only and is provided as is. While we strive to keep the content up-to-date and accurate, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained in this article.

Any reliance you place on such information is strictly at your own risk. We reserve the right to make additions, deletions, or modifications to the contents of this article at any time without prior notice.

In no event will we be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from loss of data, profits, or any other loss not specified herein arising out of, or in connection with, the use of this article.

Despite our best efforts, this article may contain oversights, errors, or omissions. If you notice any inaccuracies or have concerns about the content, please report them through our content feedback form. Your input helps us maintain the quality and reliability of our information.