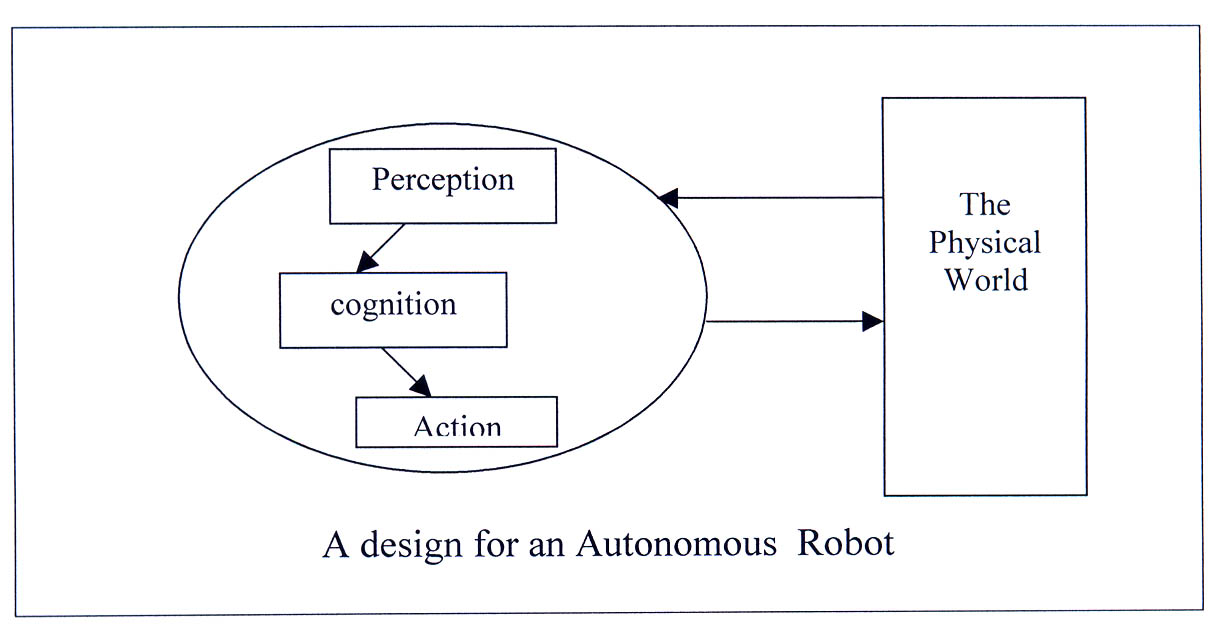

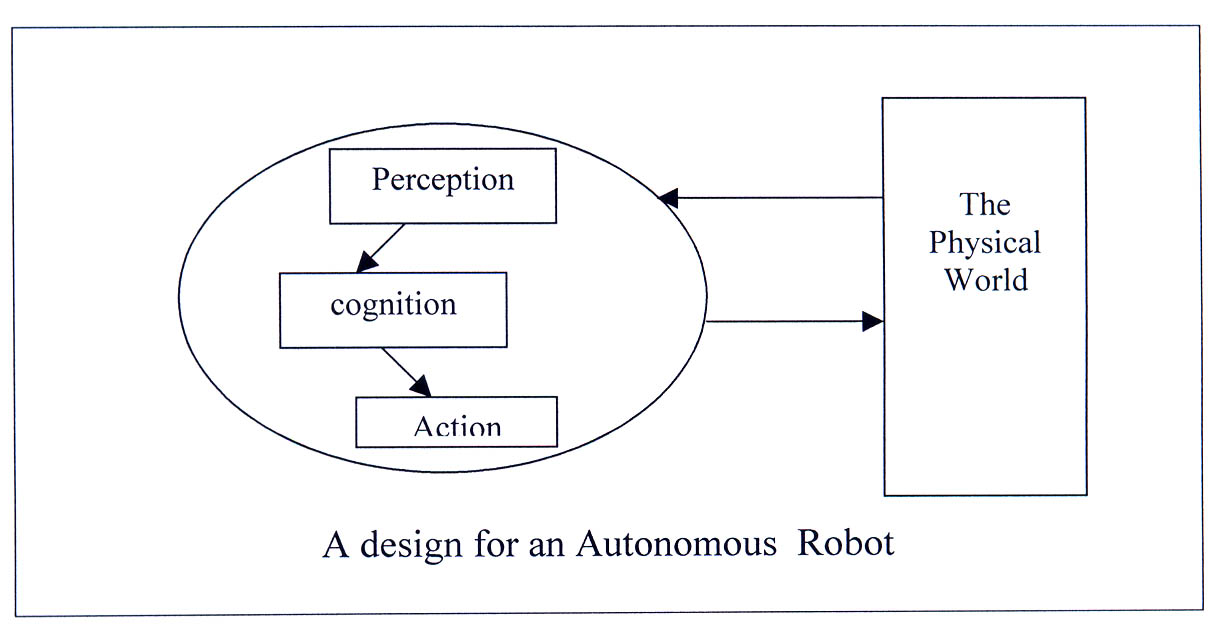

Agent Architectures and Perception

Imagine a smart robot that can see its world, think about what to do, and take action on its own. That’s what agent architectures make possible—they’re like the brain and nervous system of artificial intelligence, giving machines the power to understand and interact with their environment.

Just as humans use their senses to perceive the world around them, AI agents rely on specialized perception modules to gather and process information. These digital eyes and ears help agents make sense of everything from visual data to text and numbers. Whether it’s a self-driving car reading road signs or a chatbot understanding your questions, perception is where it all begins.

At the heart of every agent lies its reasoning engine—the command center that processes information and makes decisions. Think of it as the agent’s brain, carefully weighing options and choosing the best course of action. This isn’t just simple if-then logic; modern reasoning engines can handle complex situations and adapt their strategies based on what they learn.

Speaking of learning, that’s where things get really interesting. Today’s AI agents don’t just follow pre-programmed rules—they get better over time through experience, just like we do. Learning modules allow agents to recognize patterns, remember what works (and what doesn’t), and continuously improve their performance.

We’ll explore three fascinating approaches to building these intelligent systems: symbolic architectures that use logical rules and symbols, connectionist systems inspired by the human brain’s neural networks, and evolutionary designs that learn through a process similar to natural selection. Each offers unique strengths in helping agents become smarter and more capable.

Components of the Perception Module

The perception module serves as the sensory system of an AI agent, enabling it to understand and interact with its environment through sophisticated data processing. This critical component transforms raw sensory input into meaningful information that guides the agent’s decision-making process.

At the foundation of the perception module are sensors that act as the agent’s interface with the physical world. Like a self-driving car equipped with cameras and LiDAR sensors for visual processing, the sensory components collect environmental data across multiple modalities. These sensors can detect various stimuli including visual, audio, and other forms of input that the agent needs to process.

The data processing units serve as the next crucial layer, handling the massive streams of raw sensory data. These units filter, normalize, and transform the incoming signals into structured formats that the agent can effectively analyze. For instance, when processing visual data, these units might handle tasks like noise reduction and feature extraction, similar to how the human brain preprocesses visual information before deeper analysis.

Pattern recognition components represent perhaps the most sophisticated element of the perception module. Using advanced algorithms and neural networks, these components identify meaningful patterns and regularities in the processed data. An autonomous robot in a warehouse, for example, uses pattern recognition to identify different types of packages and obstacles, allowing it to navigate safely while performing its tasks.

The decision-making interface acts as the bridge between perception and action, integrating the processed sensory information with the agent’s knowledge base to facilitate informed choices. This component evaluates the recognized patterns and contextual information to determine appropriate responses. For instance, when an AI-powered security system detects unusual activity, the decision-making interface analyzes the pattern against known security threats before triggering any alerts.

Working in concert, these components enable the perception module to process complex environmental data efficiently. The seamless integration of sensors, data processing units, pattern recognition, and decision-making interfaces allows AI agents to maintain awareness of their surroundings and respond appropriately to changing conditions. This sophisticated interplay of components mirrors the human perceptual system, though operating at different scales and with distinct mechanisms.

Symbolic, Connectionist, and Evolutionary Architectures

The landscape of artificial intelligence architectures presents three distinct approaches, each offering unique solutions for building intelligent systems. These architectures represent different philosophies about how to create machines that can think and learn.

Symbolic architectures, often called classic AI or rule-based systems, rely on explicit logic and well-defined symbols to make decisions. Much like how humans use symbols to assign meaning to objects and concepts, these systems process information through an expert system containing if-then rules. As industry experts note, symbolic AI excels in applications with clear-cut rules and goals, such as chess games or basic decision trees.

Connectionist architectures take an entirely different approach, drawing inspiration from the human brain’s neural structure. These systems utilize artificial neural networks composed of interconnected nodes, or ‘neurons,’ that process and transmit information. Unlike symbolic systems, connectionist architectures learn through exposure to data, identifying patterns and relationships without explicitly programmed rules. This approach has proven particularly effective in tasks involving pattern recognition and data-driven decision making.

Evolutionary architectures represent the third major paradigm, mimicking the principles of biological evolution to develop adaptive solutions. These systems employ algorithms that generate, test, and refine potential solutions through processes analogous to natural selection. The strength of evolutionary architectures lies in their ability to discover novel solutions to complex problems through iterative improvement and adaptation.

Strengths and Applications

Each architectural approach brings distinct advantages to different types of problems. Symbolic architectures excel in scenarios requiring explicit reasoning and transparent decision-making processes. Their rule-based nature makes them particularly valuable in applications where decisions must be explainable and verifiable, such as medical diagnosis systems or financial compliance tools.

Connectionist systems shine in handling noisy or incomplete data, making them ideal for tasks like image recognition, natural language processing, and other pattern-matching applications. Their ability to learn from examples rather than explicit programming makes them highly adaptable to new situations.

Evolutionary architectures prove most valuable when tackling optimization problems or situations where the solution space is too vast for traditional approaches. They excel at finding creative solutions to complex problems, particularly in scenarios where multiple competing objectives must be balanced.

Integration and Future Directions

Modern AI development increasingly recognizes that these architectures need not be mutually exclusive. In fact, some of the most promising advances come from hybrid approaches that combine elements from multiple architectural paradigms. For instance, neuro-symbolic systems merge the learning capabilities of connectionist networks with the explicit reasoning of symbolic systems.

The future of AI architecture likely lies in finding innovative ways to leverage the strengths of each approach while minimizing their individual limitations. Through careful integration and continued refinement, these architectural approaches will continue to evolve, pushing the boundaries of what artificial intelligence can achieve.

The key to advancing AI lies not in choosing between these architectures, but in understanding how to combine their strengths to create more capable and adaptable systems.

Dr. Rebecca Parsons, ThoughtWorks CTO

The Role of Reasoning Engines

At the core of every autonomous AI agent lies a sophisticated reasoning engine—the computational brain that powers intelligent decision-making. These specialized software components go far beyond simple data processing, employing advanced techniques to analyze information, draw logical conclusions, and determine optimal courses of action. Modern reasoning engines leverage multiple approaches to replicate human-like decision making.

Rule-based systems form the foundation, using explicit if-then logic to evaluate scenarios and reach conclusions. This provides a transparent framework for understanding how the engine arrives at specific decisions—crucial for applications where accountability matters. Machine learning models add another powerful dimension, enabling reasoning engines to learn from experience and improve their decision-making capabilities over time.

Rather than relying solely on predefined rules, these systems can identify patterns in data and adapt their reasoning strategies accordingly. This flexibility allows them to handle novel situations and evolving requirements with increasing sophistication. Search algorithms represent yet another critical tool in the reasoning engine’s arsenal. These computational methods help AI agents efficiently explore possible solutions and identify optimal paths forward, even in complex problem spaces with numerous variables.

By systematically evaluating different options against defined criteria, search algorithms ensure the reasoning process remains both thorough and practical. The true power of modern reasoning engines comes from their ability to combine these various techniques synergistically.

For instance, a reasoning engine might use rule-based logic to establish fundamental constraints, leverage machine learning to optimize within those boundaries, and employ search algorithms to efficiently explore the solution space. This multi-faceted approach enables AI agents to tackle increasingly sophisticated challenges while maintaining reliable and explicable decision-making processes.

Learning Modules for Continuous Improvement

Learning modules are central to intelligent AI systems, enabling agents to continuously evolve and refine their capabilities. They leverage reinforcement learning techniques to transform raw experiences into optimized decision-making patterns, similar to how a child learns through trial and error.

Through reinforcement learning, AI agents develop sophisticated policies for action selection by interacting with their environment. When an agent takes an action, it receives feedback in the form of rewards or penalties. This feedback mechanism allows the agent to understand which actions lead to positive outcomes in different situations. For example, an autonomous vehicle’s learning module might receive positive rewards for maintaining safe distances from other cars and negative feedback for unsafe maneuvers.

Research has shown that these learning modules excel at handling complex, dynamic environments where predefined rules would be insufficient. Through continuous interaction and feedback, agents can discover optimal strategies for achieving their objectives while adapting to changing conditions. This adaptability is crucial in real-world applications where environments are rarely static.

The strength of learning modules lies in their ability to generalize from past experiences to new situations. Rather than simply memorizing specific scenarios, agents learn underlying patterns and principles that can be applied broadly. For instance, a robotic manufacturing system might learn general principles about handling different materials safely, allowing it to adapt its behavior when encountering new materials with similar properties.

Traditional programming relies on explicit instructions, whereas learning modules enable AI agents to discover solutions autonomously. This self-improving capability is particularly valuable in scenarios where optimal strategies are not obvious to human designers or when conditions change unexpectedly. A trading agent, for example, can continuously refine its strategy based on market conditions without requiring constant human intervention.

As an interdisciplinary field of trial-and-error learning and optimal control, reinforcement learning resembles how humans reinforce their intelligence by interacting with the environment and provides a principled solution for sequential decision making and optimal control in large-scale and complex problems.

Shengbo Eben Li, Professor at Tsinghua University

Modern learning modules often combine multiple learning approaches to enhance their effectiveness. They might integrate supervised learning for pattern recognition with reinforcement learning for decision-making, creating more robust and capable systems. This hybrid approach allows agents to leverage different types of learning for different aspects of their operation, leading to more sophisticated and nuanced behavior.

| Aspect | Traditional Reinforcement Learning | Deep Reinforcement Learning |

|---|---|---|

| Core Algorithms | Q-learning, Policy Gradient Methods | Deep Q-Networks (DQN), Deep Deterministic Policy Gradient (DDPG), Proximal Policy Optimization (PPO) |

| Data Handling | Lookup tables for values/policies | Neural networks for approximating values/policies |

| Scalability | Effective for smaller problems | Handles large, unstructured environments |

| Computation and Resource Demand | Modest computational setups | Requires significant computational power and memory, often needs GPUs or TPUs |

| Learning from Raw Data | Needs structured data | Can learn from raw, unstructured data like images |

| Applications | Basic robotics, inventory management, simple board games | Autonomous driving, healthcare diagnostics, complex video games |

| Exploration Methods | Simple strategies | Advanced strategies like epsilon-greedy and experience replay |

Applications of AI Agent Architectures

AI agent architectures have significantly impacted multiple industries through their ability to perceive, reason, and learn from their environments. In autonomous vehicles, companies like Cruise, a subsidiary of General Motors, deploy sophisticated AI agents that continuously process sensor data to navigate complex urban environments safely and efficiently. These self-driving systems leverage computer vision and decision-making algorithms to analyze road conditions, predict other vehicles’ movements, and make split-second driving decisions.

In healthcare, AI agents are enhancing diagnostic capabilities. Aidoc’s AI system analyzes medical imaging scans, helping radiologists identify anomalies like tumors or fractures with greater speed and accuracy. The agent’s perception components process medical images, while its reasoning modules apply learned patterns from thousands of previous cases to assist in diagnosis.

Financial markets benefit from AI agents’ analytical capabilities in market analysis. These systems process vast amounts of market data, news feeds, and economic indicators in real time. The agents learn from historical patterns and adapt their strategies based on changing market conditions, enabling more informed trading decisions and risk management.

In the retail sector, recommendation engines showcase the power of personalized AI agents. Netflix’s recommendation system analyzes viewing patterns, preferences, and user behavior to suggest relevant content. The agent continuously learns from user interactions, refining its understanding of individual preferences to deliver increasingly accurate recommendations.

Navigation systems represent another crucial application, where AI agents optimize route planning and traffic management. These systems process real-time traffic data, weather conditions, and historical patterns to suggest optimal routes. Companies like Nokia employ AI agents to monitor network performance, ensuring reliable navigation services while minimizing system downtime.

Summary of AI agent applications in various industries

How SmythOS Enhances AI Agent Development

Humanoid robots interact in a high-tech workspace. – Via smythos.com

SmythOS represents a significant leap forward in autonomous AI agent development, offering developers a comprehensive platform that transforms complex coding tasks into intuitive workflows. Through its innovative visual builder, teams can create sophisticated AI agents in minutes rather than weeks, dramatically accelerating the development cycle while maintaining robust security and scalability.

At the heart of SmythOS lies a powerful visual workflow builder that changes how developers approach AI agent creation. Rather than wrestling with complex code, developers can craft sophisticated AI workflows through simple drag-and-drop actions. This visual approach doesn’t just speed up development; it opens doors for subject matter experts who possess valuable domain knowledge but may lack deep coding expertise.

The platform’s built-in monitoring and logging capabilities provide unprecedented visibility into AI agent operations. Developers can track performance metrics in real-time, identify potential issues before they impact production, and ensure their agents operate at peak efficiency. This comprehensive oversight means teams can deploy AI agents with confidence, knowing they have the tools to maintain and optimize performance.

SmythOS excels in scalability, handling everything from small-scale deployments to enterprise-level operations. The platform automatically manages resource allocation, ensuring AI agents can scale seamlessly as demand grows. This built-in scalability eliminates many of the technical hurdles traditionally associated with AI deployment, allowing developers to focus on innovation rather than infrastructure management.

Integration capabilities stand as another cornerstone of the SmythOS platform. With pre-built API integrations for popular services like Slack, Trello, and GitHub, developers can quickly connect their AI agents to existing tools and workflows. This extensive integration ecosystem ensures AI agents can seamlessly interact with the broader technology stack, maximizing their utility and impact.

Security remains paramount in the SmythOS architecture. The platform implements robust data encryption, OAuth integration, and IP control features, providing enterprise-grade protection for sensitive information. These security measures give organizations the confidence to deploy AI agents in production environments, knowing their data and operations remain protected.

SmythOS democratizes AI, putting the power of autonomous agents into the hands of businesses of all sizes. It breaks down barriers, speeds up development, and opens new frontiers of what’s possible with AI.

Alexander De Ridder, Co-Founder and CTO of SmythOS

The platform’s visual debugging environment represents another significant advancement in AI agent development. This innovative tool allows developers to identify and resolve issues quickly, ensuring their AI agents run smoothly and efficiently. By providing clear visibility into the agent’s decision-making process, SmythOS enables faster troubleshooting and more reliable deployments.

Addressing Challenges in AI Agent Architectures

AI agents promise significant advances in automation and decision-making, but implementing them effectively involves overcoming complex technical and operational hurdles. Organizations must address these challenges thoughtfully to realize the full potential of autonomous systems.

Integration with existing IT infrastructure presents one of the most significant obstacles. According to research by TechTarget, many enterprises struggle when their development lifecycles don’t align with AI deployment needs. Legacy systems often lack the flexibility and processing capabilities required for AI agents, leading to compatibility issues and performance bottlenecks.

Bias in training data is another critical concern that can severely impact AI agent performance. Historical prejudices and unbalanced datasets can cause AI systems to perpetuate existing inequities. A collaborative study found that even well-intentioned AI models can inadvertently discriminate against certain demographic groups when trained on biased historical data.

Continuous monitoring poses its own set of challenges, requiring sophisticated feedback mechanisms and regular performance assessments. Organizations must establish robust monitoring frameworks to detect issues early and ensure AI agents operate within intended parameters. This involves tracking not just technical metrics but also the broader impact of AI decisions on business outcomes.

The focus needs to shift from keeping the data constant and endlessly tweaking the model to cleaning and prepping the data and building a model based on that information.

Dr. Manjeet Rege, Director of the Center for Applied Artificial Intelligence

Interdisciplinary collaboration proves essential for addressing these challenges effectively. Technical teams must work closely with domain experts, ethicists, and business stakeholders to develop comprehensive solutions. This collaborative approach helps ensure AI agents meet technical requirements while adhering to ethical principles and business objectives.

Organizations are finding success by implementing iterative development processes that incorporate continuous feedback loops. Regular testing, validation, and refinement of AI models help identify and correct issues before they impact production systems. This approach allows for more robust and reliable AI agent architectures that can adapt to changing requirements and conditions.

Conclusion and Future Directions in AI Agents

The landscape of autonomous AI agents is evolving rapidly, with advances in perception, reasoning, and learning capabilities reshaping possibilities. These intelligent systems have grown from simple rule-based programs to sophisticated agents capable of understanding context, making complex decisions, and learning from their experiences. Recent research indicates that integrating enhanced reasoning frameworks and adaptive learning modules will drive the next wave of innovation.

Several key developments will define the future of AI agents. Advances in perception systems will enable agents to better understand and interpret their environment, while improvements in reasoning capabilities will lead to more nuanced decision-making processes. According to recent research, the combination of these enhanced capabilities will create more resilient and adaptable autonomous systems.

The evolution of learning modules presents exciting possibilities. Future AI agents will likely demonstrate improved abilities to learn from experience, adapt to new situations, and handle increasingly complex tasks. This progression towards more sophisticated learning capabilities will enable agents to tackle challenges that currently require significant human oversight.

Multi-agent systems represent another promising direction, where multiple specialized agents collaborate to solve complex problems. These systems show potential for handling intricate tasks that single agents struggle with, offering new possibilities for enterprise automation and problem-solving.

As the field advances, platforms like SmythOS are emerging as essential tools for developing and deploying autonomous agents. By providing robust development environments and simplified deployment processes, these platforms are making it easier for organizations to harness the power of AI agents while navigating the complexities of implementation.

Last updated:

Disclaimer: The information presented in this article is for general informational purposes only and is provided as is. While we strive to keep the content up-to-date and accurate, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained in this article.

Any reliance you place on such information is strictly at your own risk. We reserve the right to make additions, deletions, or modifications to the contents of this article at any time without prior notice.

In no event will we be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from loss of data, profits, or any other loss not specified herein arising out of, or in connection with, the use of this article.

Despite our best efforts, this article may contain oversights, errors, or omissions. If you notice any inaccuracies or have concerns about the content, please report them through our content feedback form. Your input helps us maintain the quality and reliability of our information.