Imagine a robot that can think and act on its own. That’s what an autonomous agent is all about. These smart systems can make choices without someone telling them what to do every step of the way. Let’s break down how they work!

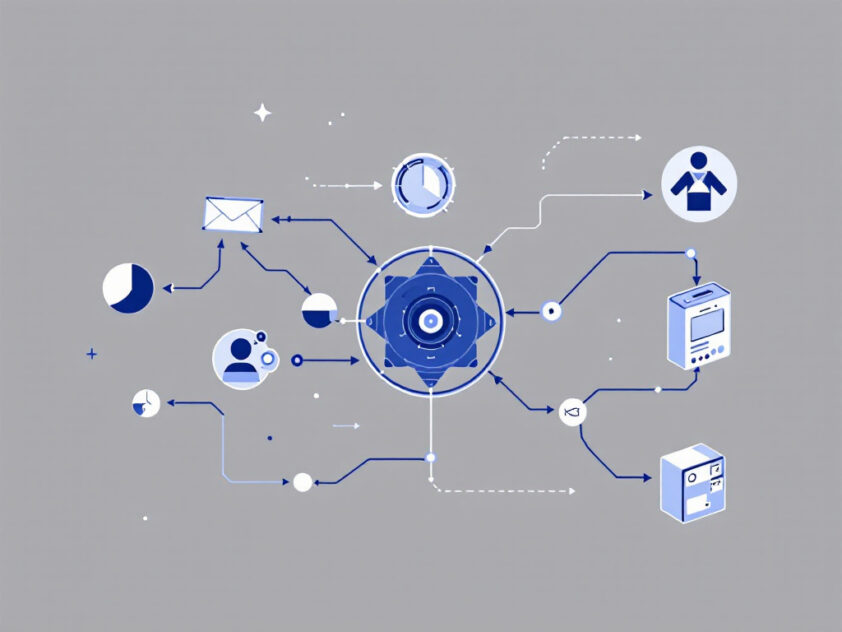

At the heart of every autonomous agent is its architecture. This is like the brain and nervous system of the agent. It has three main parts: memory, planning, and action. Each part plays a big role in helping the agent make good decisions.

Memory: The Agent’s Knowledge Bank

Just like how we remember things, agents need memory too. This is where they store all the info they learn about their world. When an agent sees or does something new, it saves that experience. Later, it can use this info to make better choices.

For example, if an agent is cleaning a house, it might remember where it found dirt last time. This helps it clean more efficiently next time.

Planning: Thinking Ahead

Planning is how agents figure out what to do next. They look at their goal and think about the steps to get there. It’s like when you plan a trip – you think about where you want to go and how to get there.

Agents use smart math and logic to make plans. They try to pick the best way to reach their goal. Sometimes, they might even think of backup plans in case something goes wrong.

Action: Getting Things Done

After planning, it’s time for action! This is when the agent actually does something in its world. It might move, speak, or change something around it. The action part is super important because it’s how the agent makes real changes.

For a robot exploring Mars, actions might include moving its wheels, taking pictures, or picking up rocks to study.

Autonomous agents are like mini-brains that can think, plan, and act on their own. They’re changing the way we build smart machines!

By working together, these parts – memory, planning, and action – let agents tackle all sorts of tasks. They can drive cars, play chess, or even help doctors! As we make these systems better, they’ll be able to do even more amazing things.

Next time you hear about a smart robot or AI system, think about these parts. How might they be using memory to learn? What kind of planning do they do? And what actions can they take to reach their goals? Understanding these basics helps us see how these cool technologies work!

The Role of Memory in Autonomous Agents

Memory is the backbone of intelligent agents, enabling them to learn from experiences and make smarter choices over time. Without it, agents would be like goldfish, constantly starting from scratch. Let’s explore how memory shapes these digital decision-makers.

Imagine an AI assistant trying to help you plan a trip. If it can’t remember your preferences or past conversations, you’d have to repeat yourself constantly. That’s why developers craft memory systems that mimic human recall.

Short-Term Memory: The Agent’s Immediate Focus

Short-term memory acts like a digital scratch pad. It holds recent information the agent needs right now. For example, when you ask a chatbot a question, it keeps your query and its current train of thought in short-term memory. This allows for fluid conversations without constant backtracking.

However, short-term memory has limits. Just like humans can only juggle so many thoughts at once, agents have a finite ‘context window’. Once it’s full, older information gets pushed out to make room for new input.

Long-Term Memory: The Agent’s Knowledge Bank

Long-term memory is where the real magic happens. It’s a vast storage system for everything the agent has learned. This includes facts, skills, and past experiences. When an agent encounters a new situation, it can draw on this repository to make informed decisions.

Many current AI systems use ‘vector databases’ for long-term storage. These databases don’t just store information; they organize it so related concepts are easily retrievable. It’s like having a librarian who instantly knows which book you need, even if you’re not sure how to ask for it.

Putting It All Together: Memory in Action

When an agent tackles a task, it blends short-term focus with long-term knowledge. Let’s say you’re using an AI travel planner. As you chat, it keeps your current preferences in short-term memory. Simultaneously, it taps into long-term memory to recall your past trips, general travel tips, and up-to-date information about destinations.

This combination allows the agent to offer personalized, contextually relevant advice. It might suggest, ‘Based on your love for hiking in Norway last year, you might enjoy the trails in New Zealand’s South Island.’

Memory in AI isn’t just about storing data—it’s about creating experiences that feel seamless and personal, just like talking to a knowledgeable friend.

The Impact on Performance

Well-designed memory systems dramatically boost an agent’s capabilities:

- Consistency: Agents remember past interactions, providing coherent long-term assistance.

- Learning: They improve over time by recalling what worked (or didn’t) in similar situations.

- Efficiency: Quick access to relevant information means faster, more accurate responses.

- Personalization: Stored preferences and habits allow for tailored interactions.

However, memory isn’t without challenges. Agents must balance retaining useful information against the risk of being bogged down by irrelevant details. They also need safeguards against remembering incorrect or biased information.

As memory architectures evolve, we’re seeing agents that can handle increasingly complex tasks. From virtual assistants that truly know you, to robots that learn and adapt to new environments, memory is what transforms a simple program into a helpful companion.

The future of autonomous agents is bright, and memory systems are the unsung heroes making it possible. As these systems grow more sophisticated, we can expect AI that not only responds to our needs but anticipates them, creating a world where technology feels less like a tool and more like a collaborative partner.

Planning Mechanisms in Intelligent Agents

Imagine trying to navigate a complex maze without any strategy. That challenge is what intelligent agents face when tackling real-world problems. Planning guides these digital explorers through the labyrinth of possibilities, breaking down large tasks into manageable steps.

At its core, planning in AI is about foresight and adaptability. It involves charting a course of action before execution while remaining flexible enough to pivot when new information arises. But how exactly do these silicon-based thinkers plot their paths?

Task Decomposition: Divide and Conquer

One of the most powerful tools in an agent’s arsenal is task decomposition. It’s the art of breaking down complex problems into smaller, more digestible chunks. Think of it as the AI equivalent of eating an elephant one bite at a time.

Recent advancements, like the Task Decomposition and Agent Generation (TDAG) framework, take this concept further. TDAG dynamically splits tasks and assigns each subtask to a specially generated subagent. This approach enhances adaptability, allowing agents to tackle diverse and unpredictable scenarios with greater finesse.

Dynamic task decomposition significantly improves system responsiveness and scalability, particularly in handling complex, multi-step tasks.

Advancing Agentic Systems Research

Multi-Step Reasoning: Thinking Ahead

While task decomposition breaks problems down, multi-step reasoning puts the pieces back together. It’s the agent’s ability to chain multiple actions and inferences to reach a goal. This process often involves:

- Generating intermediate goals

- Predicting outcomes of actions

- Adjusting plans based on new information

- Balancing short-term and long-term objectives

Frameworks like ReAct exemplify this approach, using an iterative process where agents generate thoughts and actions based on current observations until the task is complete.

Adapting on the Fly

The real world is messy and unpredictable. That’s why the best planning mechanisms in AI don’t just set a course—they constantly recalibrate. Adaptive planning allows agents to respond to changes in their environment, unexpected outcomes, or new constraints.

For instance, the ADAPT framework employs a recursive strategy, breaking tasks into subtasks and allowing for further decomposition when necessary. This flexibility ensures that agents can navigate complex scenarios without getting stuck on a single, rigid plan.

The Role of Memory and Learning

Effective planning isn’t just about the present—it’s about learning from past experiences. Advanced agent frameworks are incorporating memory systems and incremental skill libraries. These allow agents to store successful strategies and apply them to future challenges, much like how human experts draw on their wealth of experience.

Our proposed evaluation framework fills these gaps by introducing detailed metrics—such as Node F1 Score, Structural Similarity Index, and Tool F1 Score—paired with specialized datasets to assess task decomposition, tool selection, and execution through task graph metrics.

Advancing Agentic Systems Research

As AI planning mechanisms continue to evolve, we’re seeing a shift towards more dynamic, adaptable, and context-aware systems. These advancements are pushing the boundaries of what autonomous agents can achieve, from optimizing complex logistics operations to assisting in scientific research.

The next time you’re breaking down a big project into smaller tasks or adjusting your plans on the fly, remember—you’re employing strategies similar to those powering some of the most sophisticated AI agents. The future of planning in AI is not just about making decisions; it’s about making the right decisions at the right time, with the wisdom to know the difference.

Action Execution in Autonomous Agents

Action execution is the core of autonomous agents, enabling them to translate plans into real-world impact. It’s where algorithms meet APIs. Let’s explore how these digital decision-makers handle task execution complexities.

At its core, action execution involves an agent selecting and carrying out specific actions to achieve its goals. But it’s not as simple as pressing a button. Agents must contend with dynamic environments, imperfect information, and the potential for failure at every turn.

Action Policies

Imagine navigating a bustling city with a destination in mind, but the path isn’t always clear. This is the challenge autonomous agents face when executing actions. They rely on action policies—sets of rules and strategies that guide their decision-making process.

These policies can range from simple if-then statements to complex algorithms that weigh multiple factors. For example, a customer service agent might always greet the customer before addressing their query. A more advanced agent could dynamically adjust its communication style based on the customer’s mood and past interactions.

Tools of the Trade

Just as we use smartphones, maps, and vehicle GPS to navigate a city, autonomous agents leverage a variety of tools to execute their tasks. These could include:

- APIs for accessing external data sources

- Natural language processing libraries for understanding user input

- Machine learning models for making predictions

- Robotic actuators for physical tasks

The ability to seamlessly integrate and utilize these tools is crucial for effective action execution. It’s not just about having the right tools, but knowing when and how to use them.

The true power of autonomous agents lies not in their individual capabilities, but in their ability to orchestrate a symphony of tools and actions to achieve complex goals.

Challenges in the Real World

Action execution isn’t without its hurdles. Autonomous agents must grapple with:

- Uncertainty: The real world is messy and unpredictable. Agents need robust strategies to handle unexpected situations.

- Partial observability: Agents rarely have complete information about their environment. They must make decisions based on limited data.

- Time constraints: Many tasks require quick reactions. Agents need to balance thoroughness with speed.

- Resource limitations: Whether it’s computational power, battery life, or API call limits, agents must manage finite resources.

Overcoming these challenges requires a delicate balance of planning, adaptability, and learning from experience. It’s a constant process of refinement and improvement.

The Future of Action Execution

As technology advances, we’re seeing exciting developments in action execution capabilities. Reinforcement learning is enabling agents to develop more sophisticated action policies through trial and error. Advances in natural language processing are allowing for more nuanced interactions between agents and humans.

The line between digital and physical action execution is also blurring. With the rise of the Internet of Things and robotics, autonomous agents are increasingly able to affect the physical world directly. Imagine a home assistant that not only tells you the weather but also adjusts your thermostat and closes your windows in response.

As we continue to push the boundaries of what’s possible, it’s crucial to consider the ethical implications of autonomous action execution. How do we ensure these agents act in ways that align with human values and societal norms?

Autonomous agents are getting better at executing complex tasks, but the real challenge is ensuring they do so ethically and safely. We need robust frameworks for AI governance now more than ever. #AIEthics #AutonomousAgents

Action execution in autonomous agents is a field ripe with potential and challenges. As these digital assistants become more integrated into our daily lives, understanding how they translate decisions into actions will be crucial for developers, users, and policymakers alike.

So the next time you interact with an AI, whether it’s a chatbot or a self-driving car, appreciate the complex dance of algorithms, tools, and decision-making that goes into every action it takes. It’s a glimpse into a future where the line between human and machine intelligence continues to blur, opening up new possibilities and questions for us all to explore.

Challenges and Future Directions in Autonomous Agent Architecture

The journey towards creating truly autonomous agents is filled with exciting possibilities, but it has its hurdles. As we push the boundaries of artificial intelligence, we encounter complex challenges requiring innovative solutions. Here are some key obstacles facing researchers and developers in this field.

Multimodal Data Integration

One significant challenge in developing autonomous agents is their ability to process and understand multimodal data—information from various sources and formats. Imagine an agent trying to make sense of visual cues, spoken commands, and textual information all at once. It’s like asking someone to juggle while riding a unicycle and singing opera!

Current approaches often struggle to integrate these diverse data streams effectively. However, promising research is underway to create more sophisticated neural network architectures that can handle multiple input types simultaneously. For example, some teams are exploring ‘fusion’ techniques that align elements from different unimodal models, allowing for a more holistic understanding of the environment.

Aligning Agents with Human Values

Another crucial challenge is ensuring that autonomous agents remain aligned with human values and ethical considerations. As these systems become more complex and capable of making independent decisions, there’s a growing concern about potential misalignment between their objectives and what we consider important.

Researchers are tackling this issue through various means, including:

- Developing more robust reward functions that better capture human preferences

- Implementing oversight mechanisms that allow for human intervention when necessary

- Exploring techniques like inverse reinforcement learning to infer human values from observed behavior

The goal is to create agents that not only perform tasks efficiently but do so in a way that respects and upholds human ethical standards.

Addressing the Hallucination Problem

One of the most perplexing challenges in AI development is the phenomenon of ‘hallucinations’—where agents generate or act upon information that isn’t grounded in reality. This issue is particularly prevalent in large language models and can lead to unreliable or even dangerous behavior in autonomous systems.

To combat this, researchers are exploring several avenues:

- Improving the quality and diversity of training data to reduce biases and gaps in knowledge

- Developing better fact-checking and verification mechanisms within AI systems

- Investigating new architectures that are inherently more resistant to hallucinations

The quest to create more ‘grounded’ AI that can distinguish between factual information and its own generated content is ongoing and crucial for developing trustworthy autonomous agents.

Future Directions

The future of autonomous agent development looks both exciting and daunting. Emerging research directions include:

- Exploring ’embodied AI’ approaches that allow agents to learn through interaction with physical or simulated environments

- Developing more sophisticated common-sense reasoning capabilities

- Creating agents with better explainability, allowing humans to understand their decision-making processes

These advancements could lead to agents that are not only more capable but also more reliable and aligned with human needs.

The development of autonomous agents is not just a technological challenge, but a societal one. As we push forward, we must consider the broader implications of these systems on privacy, employment, and even the nature of human-AI interaction.

Dr. Ava Richardson, AI Ethics Researcher

As we stand on the brink of a new era in artificial intelligence, the challenges we face in developing autonomous agents are as complex as they are fascinating. By addressing issues of multimodal data processing, human value alignment, and eliminating hallucinations, we’re not just creating smarter machines—we’re shaping the future of human-AI collaboration.

What do you think about these challenges? How might they impact the role of AI in your daily life? Let’s keep the conversation going!

The future of AI is not just about creating intelligent machines, but about fostering a symbiotic relationship between human creativity and artificial capabilities.

Conclusion: Enhancing Autonomous Agents with SmythOS

Autonomous agent architecture is driving innovation across industries. Despite challenges in scaling, managing uncertainty, and adapting to evolving goals, ongoing research is advancing increasingly sophisticated agents. For technical architects and developers exploring intelligent agent capabilities, SmythOS stands out. Its visual workflow builder simplifies complex agent design, allowing for rapid prototyping and iteration. With support for multiple AI models, SmythOS enables the customization of agents for specific tasks.

A key feature of SmythOS is its advanced debugging tools, which provide insight into an agent’s “thought process.” This transparency is invaluable for fine-tuning performance and ensuring reliability. By lowering technical barriers and streamlining development, SmythOS empowers teams to leverage autonomous agents fully. As digital assistants integrate more seamlessly into workflows, platforms like SmythOS will play a pivotal role in shaping the future of intelligent automation.