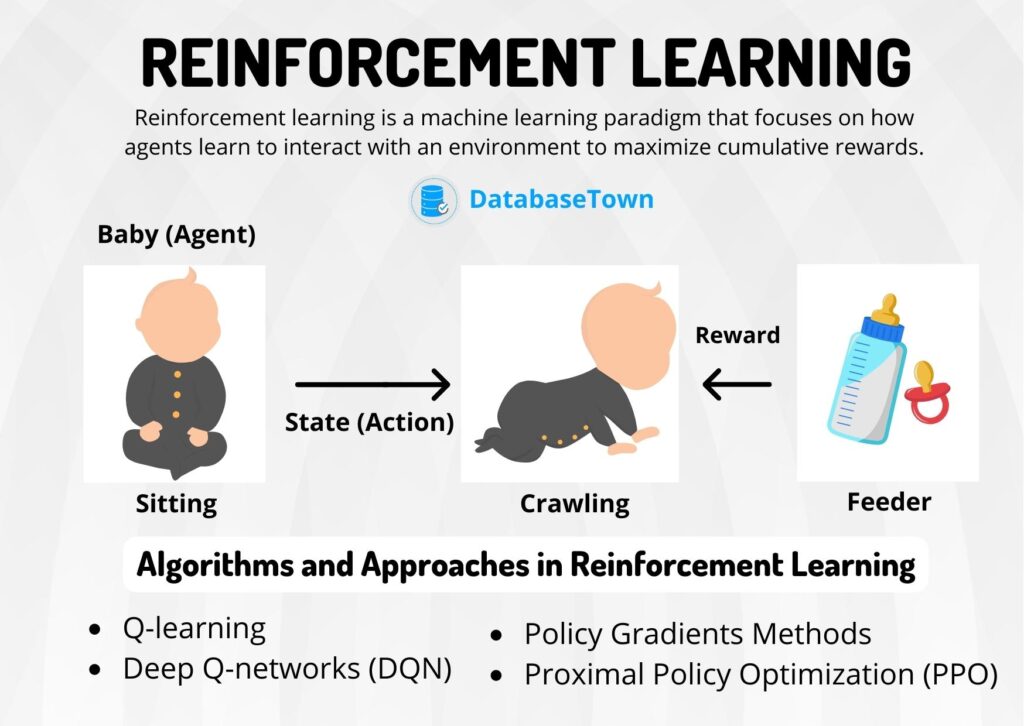

Reinforcement learning is a powerful approach to machine learning that mirrors how humans and animals naturally learn through interaction with their environment. Unlike methods that rely on pre-labeled datasets, reinforcement learning involves an agent learning to make decisions by performing actions and receiving feedback in the form of rewards or penalties.

This trial-and-error method helps the agent develop strategies to maximize cumulative rewards over time without explicit instructions.

Imagine a robot learning to navigate a maze. Instead of following programmed steps, the robot explores different paths. It receives penalties for hitting dead ends and rewards for moving closer to or reaching the exit. Through repeated attempts and adjustments based on these signals, the robot’s navigation strategy improves.

Reinforcement learning is unique because it focuses on making sequential decisions in an interactive environment rather than finding patterns in static data. This makes it suitable for tasks involving long-term planning, real-time decision-making, and adaptability to changing conditions.

We will explore the fundamental concepts, key algorithms, and applications of reinforcement learning in fields like robotics, autonomous vehicles, finance, and healthcare. Understanding how machines learn to make decisions through experience provides insights into both artificial intelligence and the nature of learning and decision-making.

Key Concepts in Reinforcement Learning

Reinforcement learning (RL) is a fascinating branch of artificial intelligence that enables agents to learn optimal behaviors through interaction with their environment. To grasp the fundamentals of RL, we need to understand several key concepts that form its backbone. Here are these essential components:

The Core Players: Agents and Environments

At the heart of reinforcement learning lies the interplay between two main actors:

- Agent: This is our artificial learner, the protagonist of our RL story. It’s the entity we’re training to perform a specific task or make decisions. Think of a robot learning to navigate a maze or an AI mastering a complex video game.

- Environment: This is the world in which our agent operates. It could be a simulated game world, a physical space for a robot, or even a complex business system. The environment responds to the agent’s actions and provides feedback.

The Building Blocks: States, Actions, and Rewards

These three elements form the core language through which agents and environments communicate:

- States: A state represents the current situation of the environment. It’s the information the agent uses to make decisions. In a chess game, the state would be the current position of all pieces on the board.

- Actions: These are the choices an agent can make to influence its environment. In our chess example, actions would be the legal moves a player can make.

- Rewards: The feedback mechanism of RL. After taking an action, the agent receives a reward signal, indicating how good or bad that action was. This could be points in a game or a measure of how close a robot is to its goal.

The Decision-Making Framework: Policies and Value Functions

These concepts help the agent determine the best course of action:

- Policy: This is the agent’s strategy or behavior. It defines how the agent chooses actions in different states. A policy could be as simple as ‘always move forward’ or as complex as a neural network making nuanced decisions.

- Value Functions: These help the agent evaluate the desirability of states or actions. They estimate the expected cumulative reward the agent can obtain from a given state or by taking a specific action. Value functions are crucial for the agent to plan ahead and make optimal decisions.

The Predictive Element: Models

Some RL approaches use models to enhance learning:

- Model: A representation of how the environment works. It allows the agent to predict future states and rewards based on its actions. Models can speed up learning by enabling the agent to ‘imagine’ scenarios without actually experiencing them.

Understanding these key concepts is crucial for grasping how reinforcement learning agents navigate complex decision-making processes. By leveraging these components, RL systems can tackle a wide range of challenging tasks, from game playing to robotic control and beyond.

Reinforcement learning weaves together agents, environments, and rewards to create systems that can learn, adapt, and excel in complex, dynamic worlds.

| Concept | Description | Example |

|---|---|---|

| Agent | The learner and decision-maker | A chess-playing AI |

| Environment | The world the agent interacts with | The chess board and rules |

| State | Current situation of the environment | Position of chess pieces |

| Action | Choices the agent can make | Moving a chess piece |

| Reward | Feedback on the action taken | Points for capturing a piece |

| Policy | Strategy for choosing actions | Always attack when possible |

| Value Function | Estimation of future rewards | Assessing board positions |

| Model | Agent’s representation of the environment | Predicting opponent’s moves |

By mastering these fundamental concepts, we can begin to appreciate the elegance and power of reinforcement learning. As we delve deeper into this field, we’ll see how these building blocks come together to create AI systems capable of remarkable feats of learning and adaptation.

Types of Reinforcement Learning Algorithms

There are three main types of reinforcement learning algorithms: dynamic programming, Monte Carlo methods, and temporal difference learning. Each one works differently and is suited for certain kinds of problems.

Dynamic programming methods use a complete model of the environment to find the best actions. They work well when we know everything about how the world behaves but require significant computing power for large problems.

Monte Carlo methods learn from complete episodes of experience. They don’t need to know how the world works beforehand. These methods are simple to understand and use, but they can be slow because they wait until the end of an episode to learn.

Temporal difference learning combines ideas from dynamic programming and Monte Carlo methods. It learns from parts of experiences and updates its estimates frequently, making it faster than Monte Carlo methods in many cases. It also doesn’t need a complete model of the environment like dynamic programming does.

Some popular algorithms that use these methods include:

- Q-learning: A type of temporal difference learning that learns the value of actions

- SARSA: Another temporal difference method that learns while following a specific policy

- Policy gradient methods: These directly learn the best policy without using a value function

- Actor-critic methods: These combine policy learning with value function estimation

Each type of algorithm has its strengths and weaknesses. The best choice depends on the specific problem and the information available about the environment.

Reinforcement learning algorithms come in many flavors: dynamic programming, Monte Carlo, and temporal difference. Each shines in different scenarios. The key is picking the right tool for your AI job! #ReinforcementLearning #AI

Challenges with Reinforcement Learning

Reinforcement learning (RL) has shown promise in areas like game-playing and robotics, but it faces several key challenges that researchers are working to overcome. Addressing these challenges is crucial for broader real-world applications of RL.

A significant hurdle is the need for large amounts of training data. Unlike supervised learning, which learns from labeled datasets, RL agents require extensive trial-and-error interactions with an environment to discover effective strategies. This sample inefficiency can make training slow and computationally expensive, especially for complex tasks. Researchers are exploring techniques like model-based RL and transfer learning to reduce data requirements.

Another challenge is deploying RL algorithms in unpredictable real-world environments. Most current RL systems are trained and tested in controlled simulations but struggle to generalize to novel scenarios. As Robert Kirk and colleagues note in their survey, “Tackling this is vital if we are to deploy reinforcement learning algorithms in real-world scenarios, where the environment will be diverse, dynamic, and unpredictable.” Improving the robustness and adaptability of RL agents to handle unexpected situations remains an active area of research.

Additionally, ensuring that RL agents pursue actions aligned with long-term goals can be difficult due to the problem of delayed rewards. In many real-world applications, the consequences of actions may not be immediately apparent, making it challenging for agents to learn optimal long-term strategies. This is especially critical for safety-critical applications like autonomous driving or healthcare, where mistakes could have severe consequences. Researchers are developing safe RL approaches that incorporate constraints and risk awareness to address these concerns.

Despite these challenges, ongoing work in areas like sample-efficient algorithms, sim-to-real transfer, and safe RL is steadily advancing the field. As these hurdles are overcome, reinforcement learning has the potential to enable more capable and reliable autonomous systems across a wide range of domains.

Conclusion: Leveraging Reinforcement Learning with SmythOS

Reinforcement learning’s potential to enhance decision-making across industries is significant. From optimizing workflows to improving customer experiences, this AI technique offers a gateway to unprecedented automation and efficiency.

Traditionally, harnessing its capabilities required extensive technical expertise. However, innovative platforms like SmythOS are changing that.

SmythOS is democratizing reinforcement learning with a no-code solution, enabling domain experts to create sophisticated AI agents without complex algorithms. By offering intuitive drag-and-drop interfaces and pre-built components, the platform allows businesses to prototype and deploy customized reinforcement learning solutions rapidly.

SmythOS bridges the gap between advanced AI capabilities and practical business applications. Whether streamlining internal processes, developing intelligent chatbots, or optimizing resource allocation, SmythOS provides tools to transform reinforcement learning concepts into tangible business value.

The AI revolution is here, with reinforcement learning at its core. Platforms like SmythOS lower the barriers to entry, making it an ideal time to explore how this technology can drive innovation and growth in your organization. The question isn’t whether to leverage reinforcement learning but how quickly you can start reaping its benefits. Are you ready to unlock the full potential of AI automation in your business?